Published on April 5, 2025 12:21 PM GMT

This is a summary of Yann LeCun's talk "Mathematical Obstacles on the Way to Human-Level AI". I've tried to make it more accessible to people who are familiar with basic AI concepts, but not the level of maths Yann presents. You can watch the original talk on YouTube.

I disagree with Yann, but I have tried to represent Yann's arguments as faithfully as possible. I think understanding people who differ in opinion to you is incredibly important for thinking properly about things.

In an appendix on my blog I include Gemini 2.5 Pro's analysis of my summary. In short:

The summary correctly identifies the core arguments, uses LeCun's terminology [...], and reflects the overall tone and conclusions of the talk

Why Yann LeCun thinks LLMs will not scale to AGI

LLMs use deep learning for base and fine-tuning, which is sample inefficient (need to see many examples before learning things). Humans and animals learn from way fewer samples.

LeCun's slide

LLMs are primarily trained on text, which doesn't carry as much raw data as other formats. To get AGI we need to train models on sensory inputs (e.g. videos). Humans see more data when you measure it in bits.

LeCun's slide

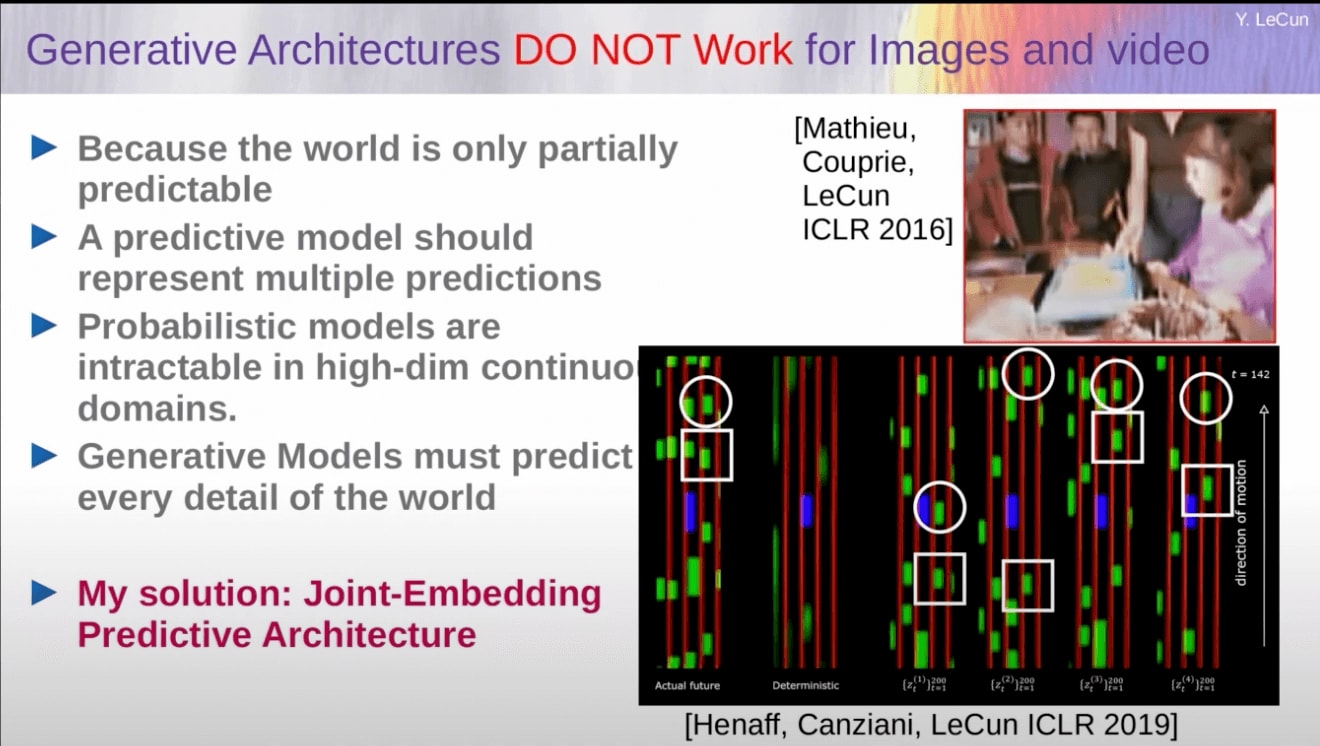

The setup for LLMs has them predict the next token. But this means they are predicting in a space with exponentially many options, of which only one is correct. This means they are almost always incorrect. And similarly for images/videos, they have so many options and the world is only partially predictable, that it's not feasible for the model to be correct.

My visualisation

LeCun's slides

AI systems work the same amount of time on short problems and hard problems. But actually they should work longer on hard problems.

- He thinks chain of thought is a trick that he implies isn't really solving it. (video timestamp: 19:33)Instead thinks we should have AI systems be using optimization/search algorithms against an objective when posed with a problem, rather than using feed forward neural networks. And then the search process can continue until it solves the problem (which means it can work longer on a hard problem).

LeCun's slide

How Yann LeCun thinks we should build AGI

We need to model it after how humans think

- Sensory inputs (e.g. videos)Have access to long term memory via toolsShould spend some time planningShould spend some time reasoning and doing

Needs to be safe and controllable by design

- "Not by fine-tuning, like current AI systems" (video timestamp: 19:01)

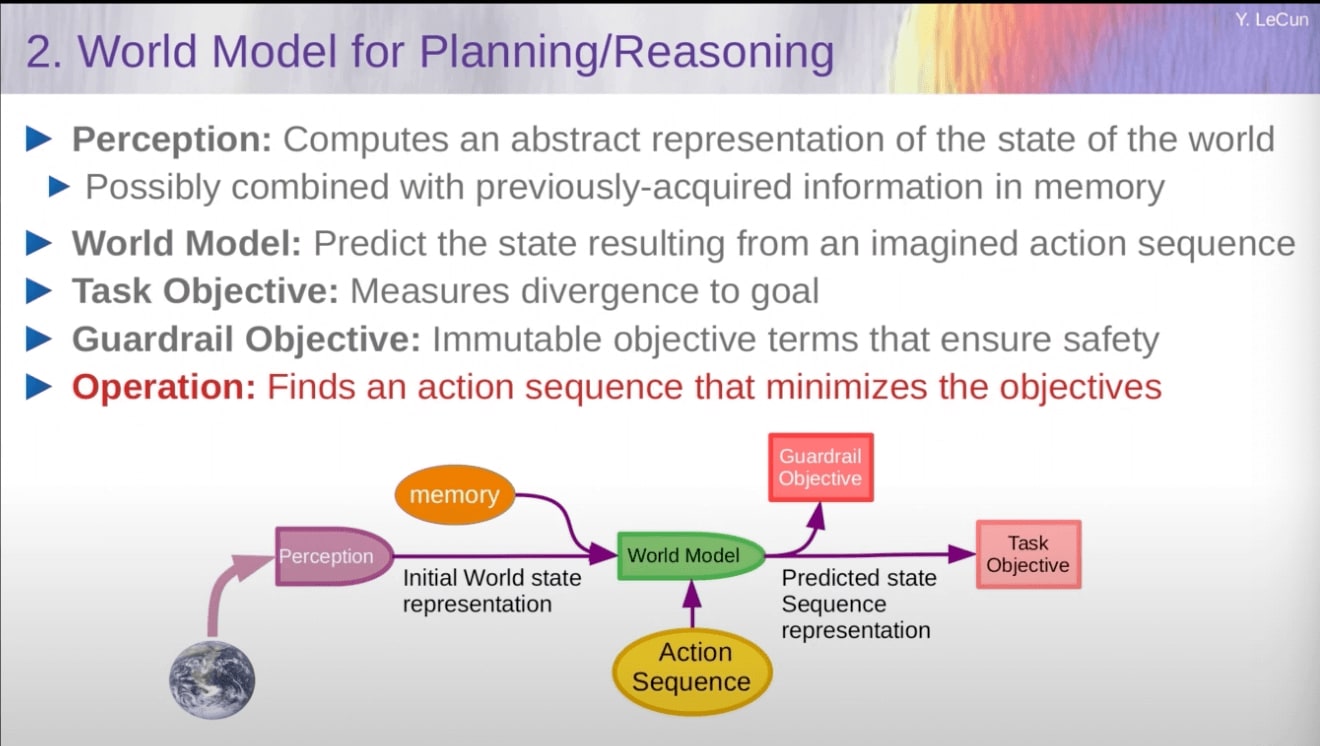

The specific architecture he proposes is:

- An AI doing action generation, i.e. "What actions could I take?", output = X, Y, ZAn AI doing world modelling, i.e. "What will happen in the world if actions X, Y, Z are taken?". Output = world where scenario A happens, world where scenario B happens, etc.

- This takes in:

- The potential actionsSensory data about the worldData from a tool(Extension added in a later slide: some random noise that represents latent unpredictability/uncertainty in the world - so your plans are good across different situations)

- There are two of these models. One which effectively represents the prompt e.g. 'maximise making paperclips', and one which is for safety e.g. 'don't destroy earth'. These are combined in an unspecified way.

AI should be released open-source, and be used as an amplifier of human intelligence.

- Vision of the future: all interactions with the digital world are through AI assistantsWants a diverse set of AI assistants for linguistic, cultural and value system diversity

Discuss