Published on April 2, 2025 10:48 AM GMT

Epistemic status: sure in fundamentals, informal tone is a choice to publish this take faster. Title is a bit of a clickbait, but in good faith. I don't think much context is needed here, ghiblification and native image generation was (and still is) a very much all-encompassing phenomenon.

No-no, not in the way you might have thought. Of course it is terrible for artists, it's a spit in the face of Miyazaki, who publicly disavowed image generation way back when.

A lot of people hate it, and image generation in general.

It is good, however, for AGI timelines.

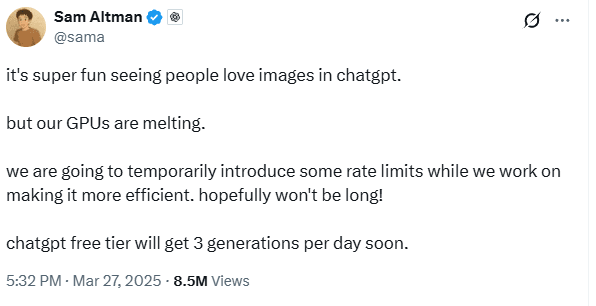

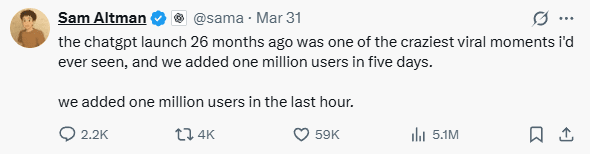

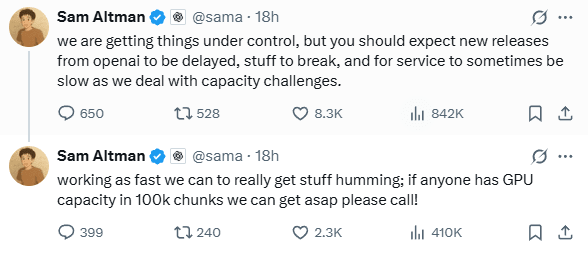

It is good because of this:

And this:

And this:

Why, you might ask? Isn't all this making people and investors "feel the AGI" and pour more money into it? Make more money for OpenAI and lead them to buy more GPUs?

Well, yeah. But...

Resource constraints

The resources are very much tight right now.

It might change, of course, but OpenAI only has so much compute. Where do you think they suddenly found capacity to support all these generations from millions of users?

Mind you, I work in the field: image generation is much more computationally expensive than text generation.

One image, depending on the model, can generate upwards from 10s to 120s, depending on the quality you expect.

That means for 1 RPS (request per second) with the model that generates an image in 10 seconds you need 10 GPUs. You have only 9? Too bad, the generation time for each new request will grow, and will not stop unless people stop asking for generations.

If you have way less GPUs than you have RPS your queue for image generation will spike from tens of seconds to hours and days quite fast. Millions of daily users and SOTA quality... Well. You take a guess where they got their GPUs to support all that.

I say from pretraining cluster.

(Potential) priority shift

Image generation requires different pretraining, different post-training, analytics, people etc. And same GPUs.

That's a major priority shift for a company (and maybe the field overall), and the compute they have is pretty much the same and won't change so soon.

I mentioned I work in the field. And there's kind of an open secret: text generation? Barely earns money. Most of the revenue (from my knowledge) comes from private deployments for organizations that need to host models within their own infrastructure, rather than from cloud API requests.

Image generation? Well, there are very very lucrative usecases in B2B. B2C, as you can see, also has a lot of interest.

Seeing how Sam is product-oriented first...

This might buy us some time. At least until GPU production ramps up more.

There are companies, of course, who don't dabble in image generation. Anthropic does not care about it at all, at least for now. I expect them to potentially take the lead in frontier models and hold it for some time. There's China, too, with Deepseek that does not have image generation.

To reflect that expectation, I bought 1k shares in this market betting on Anthropic for 10c. I expect that probability to grow substantially in the coming year.

My overall timelines became a few years longer.

Discuss