Published on April 1, 2025 9:47 PM GMT

The EA/rationality community has struggled to identify robust interventions for mitigating existential risks from advanced artificial intelligence. In this post, I identify a new strategy for delaying the development of advanced AI while saving lives roughly 2.2 million times [-5 times, 180 billion times] as cost-effectively as leading global health interventions, known as the WAIT (Wasting AI researchers' Time) Initiative. This post will discuss the advantages of WAITing, highlight early efforts to WAIT, and address several common questions.

Theory of Change

Our high-level goal is to systematically divert AI researchers' attention away from advancing capabilities towards more mundane and time-consuming activities. This approach simultaneously (a) buys AI safety researchers time to develop more comprehensive alignment plans while also (b) directly saving millions of life-years in expectation (see our cost-effectiveness analysis below). Some examples of early interventions we're piloting include:

- Bureaucratic Enhancement: Increasing administrative burden through strategic partnerships with university IRB committees and grant funding organizations. We considered further coordinating with editorial boards at academic journals but to our surprise they seem to have already enacted all of our protocol recommendations.Militant Podcasting: Inviting leading researchers at top AI organization to come onto podcasts with enticing names like "Silicon Savants" and "IQ Infinity", ensuring each recording runs 4+ hours and requires multiple re-recordings due to technical difficulties.Conference Question Maximization: We plan to deploy trained operatives to ask rambling, multi-part questions during Q&A sessions at leading ML conferences that begin with "This is more of a comment than a question..." and proceed until their microphones are snatched away.Twitter/X Stupidity Farming: Our novel bots have been trained to post algorithmically optimized online discourse that consistently confuses the map for the territory. Also they won't shut up about Elon and "sexual marketplace dynamics" but apparently there is no limit to how long everyone will find these topics interesting for.Romantic Partnership Engineering (RPE): One shallow investigation suggests bay area researchers could be uniquely receptive to polyamory and other relationship structures requiring increased communication and processing time. We're placing strategic emphasis on recruiting women from the VH (very hot) community which, at first glance, appears to present a strong talent pool for these programs.Targeted Nerdsniping: It's also well-known that substantial overlap exists between the AI community and the HN (huge nerd) community. WAIT interventions will aim to exploit this overlap through a new groundbreaking partnership with EA (Electronic Arts). Our venture fund has already acquired minority stakes in EA, allowing us to influence video game release schedules to coincide with major AI conference submission deadlines. It's in the game.

Cost-Effectiveness Analysis

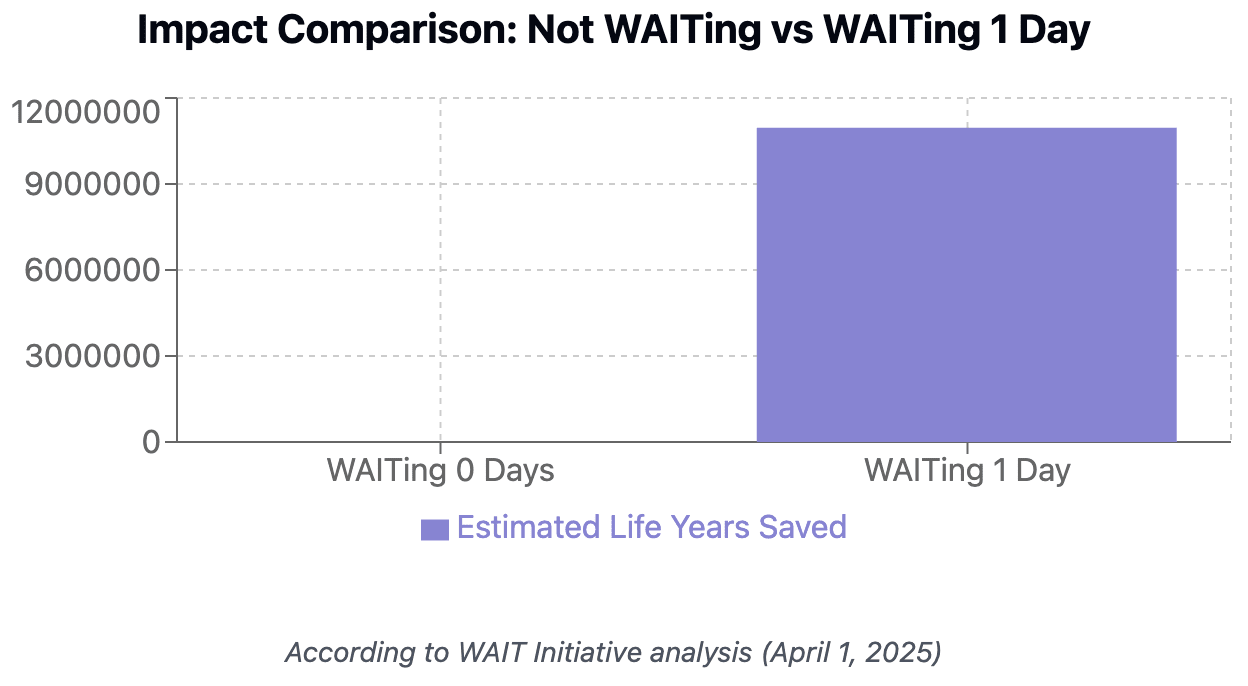

To efficiently estimate existential risk from AI, consider the only two possibilities: AI extinction or survival. We thus derive an existential risk parameter of 50%. Multiplying by ~8 billion human beings on earth * 1/365 (per day saved) we estimate ~10,958,904 years of human life could be saved per day of WAITing. This figure is further evidence:

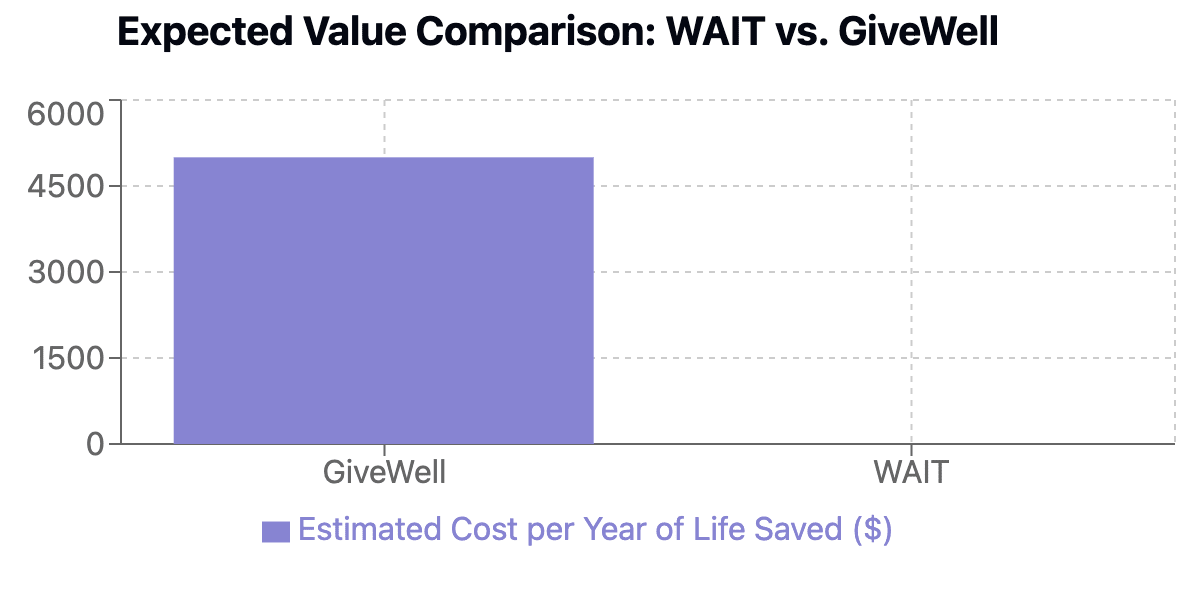

Our analyses suggest the most effective interventions could cost approximately $25k to WAIT for 1 effective researcher day, making WAIT approximately 2.2 million times as cost effective as leading GiveWell charities. Further evidence for this claim:

Answers to Common Questions

- "How do you measure success?" We've developed a novel Stagnation of AI Frontier Threats Index (SAIFTI), which incorporates factors like the ratio of Tweets to Github commits among employees at leading AI labs."What are the primary risks?" We think the primary risk WAITing poses is something Streisand-ish; for example, our fake podcasts keep accidentally blowing up - apparently podcast listeners have near infinite patience for listening to two dudes just talkin'. We plan to dilute the audio quality as a band-aid solution in the short term."How can I contribute?" Our most urgent need is for volunteers willing to schedule and cancel multiple coffee chats with AI researchers 5 minutes after the scheduled meeting time. We've found this to be particularly effective, but it requires a consistent volunteer rotation."Isn't this post kinda infohazardous?" We think it's the exact opposite; AI researchers' time spent reading this post might well have come at the cost of a substantive technical insight. In other words, you're welcome for the additional four minutes, humanity.

If you're an AI researcher wanting to learn more about the WAIT Initiative, we encourage you to reach out to us; we'd love to schedule a time to get coffee and chat about it with you.

Discuss