Gemini 2.5 Pro

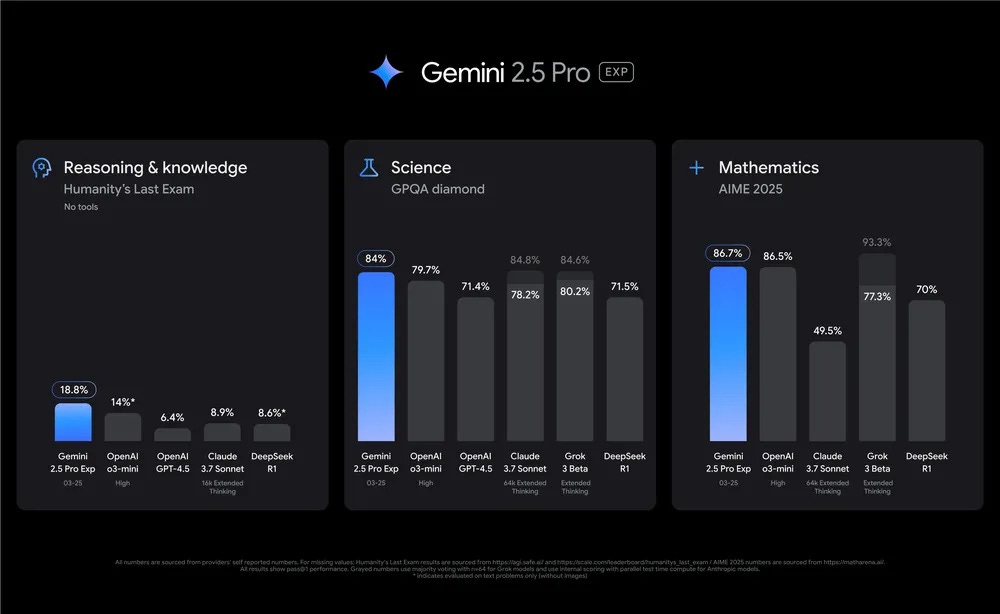

Google dropped Gemini 2.5 Pro, its latest reasoning model with deeply impressive benchmark scores.

What to watch:

Going by the benchmarks, Gemini 2.5 Pro is a GPT-4.5 class model — yet it seems to be garnering far less attention than GPT-4.5 or even Claude 3.7 Sonnet (early reviews, however, are quite good).

Google's ever-growing array of models, from Gemini Flash to Gemini Thinking Experimental to its Gemma series, likely contributes to that.

And there's also the fact that growing benchmark saturation is making it harder to break through with a compelling model alone - increasingly, good product design and UX are needed to go viral.

Elsewhere in frontier models:

Anthropic has developed a new analysis tool for understanding how large language models process information and make decisions.

DeepSeek has switched to an MIT license for its latest DeepSeek-V3-0324 release, while Alibaba has released its Qwen2.5-VL-32B model under an Apache 2.0 license.

And OpenAI has announced support for Model Context Protocol, Anthropic's open-source standard for connecting AI models to data sources.

Elsewhere in the FAANG free-for-all:

Microsoft is introducing two new AI reasoning agents for 365 Copilot, Researcher and Analyst, launching in April.

Google has launched real-time video features for Gemini Advanced that can analyze live camera feeds and screen sharing.

Apple is exploring adding cameras to Apple Watches while developing its own AI models for Visual Intelligence.

And interviews reveal Google's intense two-year scramble to compete with OpenAI, including organizational changes and reduced AI safeguards.

Ghiblification

In the aftermath of GPT-4o's new image capabilities, the internet has been swamped with images of people, places, and things in the style of Studio Ghibli.

Between the lines:

For a deep dive on the model and what it might mean for the industry, check out Wednesday's post.

In a break from DALL-E's rollout, the new 4o model can generate images of public figures - it's up to the public figures themselves to opt out.

The trend is on its last legs (especially now that the White House has bizarrely joined in), but given how good 4o is at this sort of thing, my guess is we’re going to see plenty more AI-driven fads.

And yet there is also something tragic (if not egregious) about Hayao Miyazaki, the founder of Studio Ghibli, having a strongly anti-AI stance - calling it "an insult to life itself" - and now seeing his signature style unleashed across the web.

Elsewhere in OpenAI:

OpenAI's model behavior lead indicates the company is taking a more nuanced stance on sensitive content, moving away from blanket refusals toward preventing specific harms.

OpenAI announces leadership changes in its organizational structure.

OpenAI enhances ChatGPT's Advanced Voice Mode to be more engaging and concise while reducing interruptions.

And OpenAI partners with MIT to release groundbreaking research on ChatGPT's impact on users' emotional well-being, including gender-specific effects.

Buildout bubble

After months of lavish infrastructure spending, Alibaba's Joe Tsai warns that major US tech companies may be overinvesting in AI data center infrastructure, potentially creating a bubble as construction outpaces actual demand.

The big picture:

It's hard to disagree with the numbers: four tech companies alone (Alphabet, Amazon, Meta, and Microsoft) have previously committed to spending over $300 billion on data center infrastructure this year alone.

Tsai is questioning whether these hundreds of billions are necessary right now, especially as some projects are being built speculatively without secured customers.

The warning seems prescient, as news reports that up to 80% of Chinese data centers are idle, and Microsoft appears to be walking away from new data center projects.

Elsewhere in AI anxiety:

GitLab faces investor lawsuits (again) over allegedly misleading claims about its AI capabilities.

An a16z-backed AI startup called 11x is accused of using fake customer testimonials and manipulating financial data.

Open-source projects report that AI crawlers are overwhelming their infrastructure with bot traffic.

A federal judge allows the NYT's copyright lawsuit against OpenAI to proceed while narrowing some claims.

And Cloudflare introduces AI Labyrinth, a system designed to confuse and drain resources from AI crawlers that ignore crawling restrictions.

Things happen

Perplexity partners with Firmly.ai to enable direct shopping through search results. AI search is more of a UX revamp than a Google replacement. Nvidia debuts G-Assist, a local gaming AI chatbot. Grok comes to Telegram for Premium users. Databricks unveils Test-time Adaptive Optimization for LLMs. China floods the market with low-cost AI services in the wake of DeepSeek. How Microsoft lured away Inflection's staff. Reid Hoffman explains why AI gloomers are wrong. An off-Broadway theater offers AI-powered live translations in 60 languages. Scientists are using AI to speed up drug repurposing. Why Claude still can't beat Pokémon. OpenAI and Meta court India's Reliance for partnerships. Perplexity wants to buy TikTok. AOL's AI produces terrible captions for attempted murder story. Chinese AI startups scramble to adapt after DeepSeek's rise. That viral JD Vance audio about Elon Musk is fake. Nvidia near deal to acquire GPU server rental startup Lepton AI. Anthropic and Databricks ink $100M partnership. Synthesia creates $1M stock pool to pay AI actors. Chinese server maker warns of Nvidia H20 chip shortages. UK MPs doubt public sector AI plans. Meta tests AI-generated Instagram comments. Instagram's "Brainrot" AI is leading the way in monetizing disturbing content. AI companies continue to court Trump's favor. North Korea launches AI hacking unit. Project Aardvark reimagines AI weather prediction. Most AI value will come from broad automation. Anthropic releases economic insights from Claude 3.7. Google studies brain-LLM connections. Companies lobby Trump to loosen AI chip export rules. Google adds AI vacation planning features. Cleo AI to pay $17M settlement over deceptive practices. Leaked data reveals possible Chinese AI censorship system. Anthropic wins early round in music copyright case. A new "AGI" test stumps AI models. Survey reveals how developers actually use AI. A look at LibGen, the pirate library allegedly used to train AI. Microsoft studies AI's impact on critical thinking.