Scientific publishing in confronting an increasingly provocative issue: what do you do about AI in peer review?

Ecologist Timothée Poisot recently received a review that was clearly generated by ChatGPT. The document had the following telltale string of words attached: “Here is a revised version of your review with improved clarity and structure.”

Poisot was incensed. “I submit a manuscript for review in the hope of getting comments from my peers,” he fumed in a blog post. “If this assumption is not met, the entire social contract of peer review is gone.”

Poisot’s experience is not an isolated incident. A recent study published in Nature found that up to 17% of reviews for AI conference papers in 2023-24 showed signs of substantial modification by language models.

And in a separate Nature survey, nearly one in five researchers admitted to using AI to speed up and ease the peer review process.

We’ve also seen a few absurd cases of what happens when AI-generated content slips through the peer review process, which is designed to uphold the quality of research.

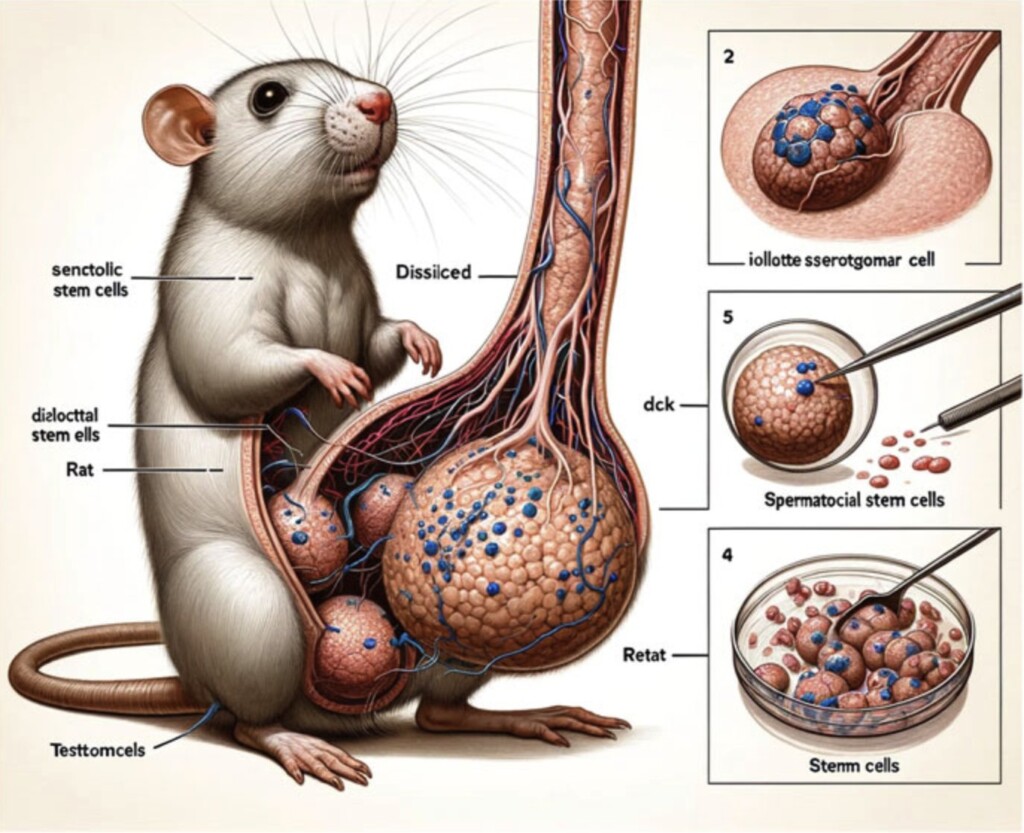

In 2024, a paper published in the Frontiers journal, which explored some highly complex cell signaling pathways, was found to contain bizarre, nonsensical diagrams generated by the AI art tool Midjourney.

One image depicted a deformed rat, while others were just random swirls and squiggles, filled with gibberish text.

Commenters on Twitter were aghast that such obviously flawed figures made it through peer review. “Erm, how did Figure 1 get past a peer reviewer?!” one asked.

In essence, there are two risks: a) peer reviewers using AI to review content, and b) AI-generated content slipping through the entire peer review process.

Publishers are responding to the issues. Elsevier has banned generative AI in peer review outright. Wiley and Springer Nature allow “limited use” with disclosure. A few, like the American Institute of Physics, are gingerly piloting AI tools to supplement – but not supplant – human feedback.

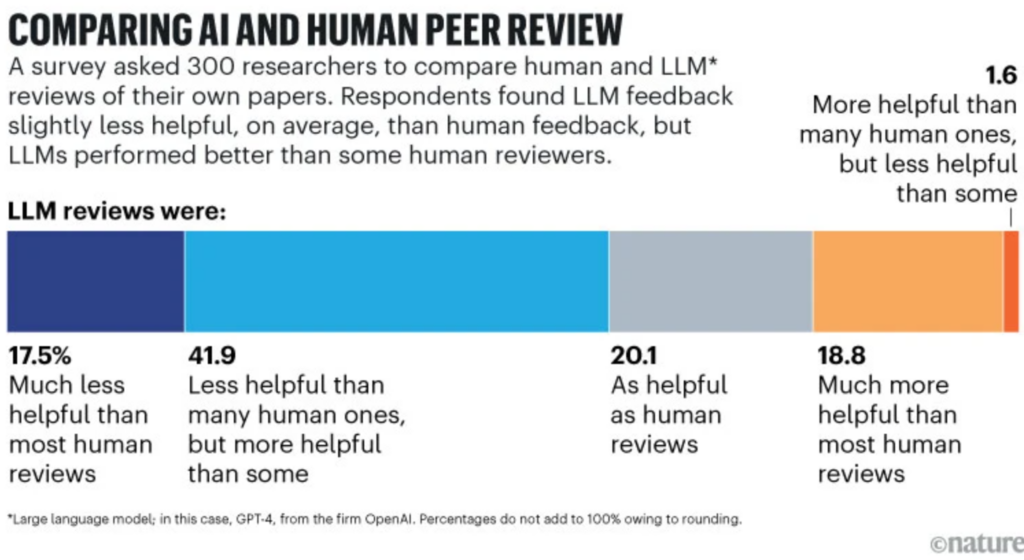

However, gen AI’s allure is strong, and some see the benefits if applied judiciously. A Stanford study found 40% of scientists felt ChatGPT reviews of their work could be as helpful as human ones, and 20% more helpful.

Academia has revolved around human input for a millenia, though, so the resistance is strong. “Not combating automated reviews means we have given up,” Poisot wrote.

The whole point of peer review, many argue, is considered feedback from fellow experts – not an algorithmic rubber stamp.

The post AI stirs up trouble in the science peer review process appeared first on DailyAI.