Published on March 20, 2025 4:18 PM GMT

Epistemic status: small exploration without previous predictions, results low-stakes and likely correct.

Introduction

As a personal exercise for building research taste and experience in the domain of AI safety and specifically interpretability, I have done four minor projects, all building upon code previously written. They were done without previously formulated hypotheses or expectations, but merely to check for anything interesting in low-hanging fruit. In the end, they have not given major insights, but I hope they will be of small use and interest for people working in these domains.

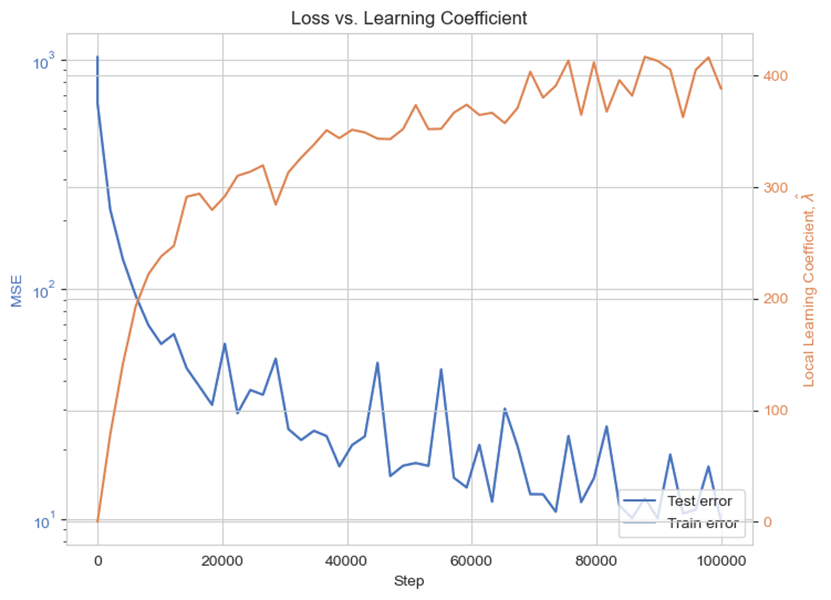

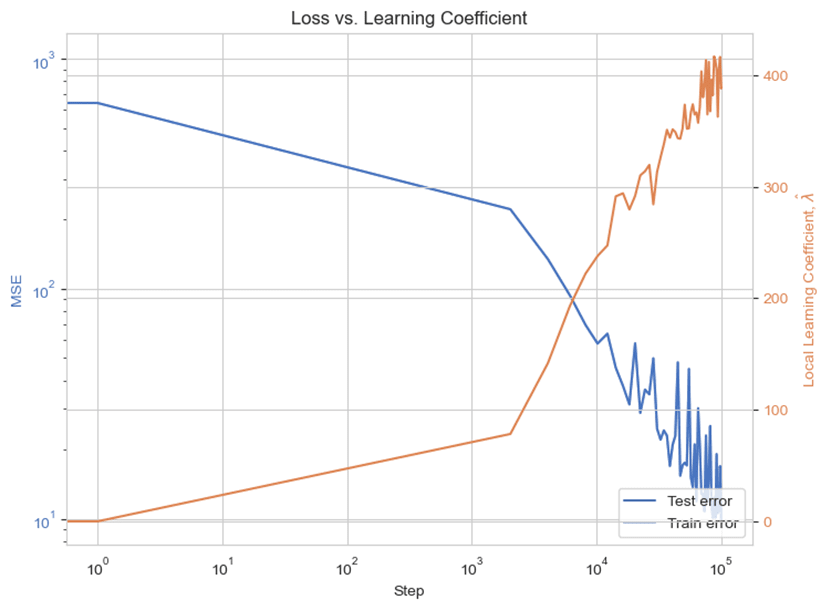

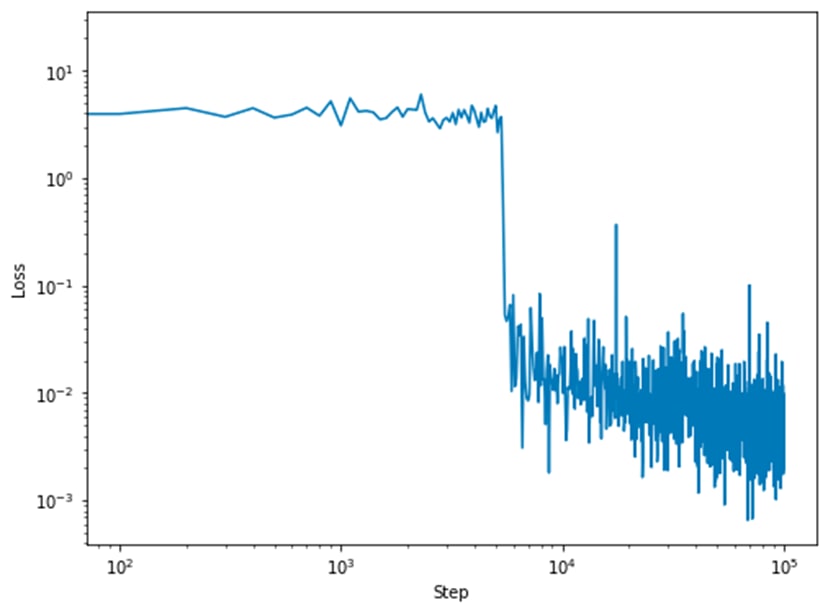

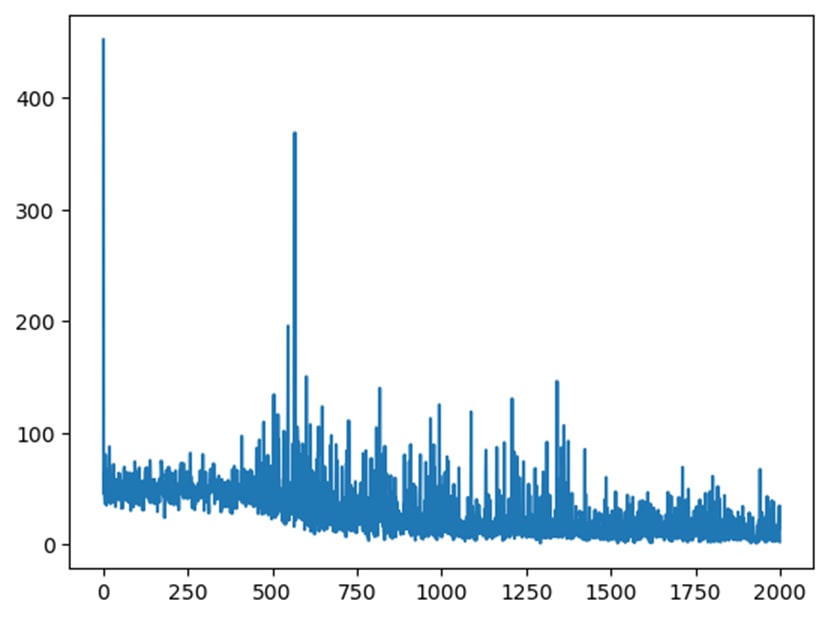

This is the fourth project: checking how the LLC behaves for toy models containing LayerNorm and sharp transitions in the loss landscape (this project from Timaeus).

TL;DR results

LLC behaves as expected. Large drops in loss are mirrored by large spikes in the LLC.

Methods

The basis for these findings is the DLNS notebook of the devinterp library, plus the example code given. Notebooks and graphs can be found here.

Results

There does not seem to be anything peculiar about the progression of the LLC. The sudden drop in loss was mirrored by a sudden rise in LLC, showing a highly compartmentalized loss landscape.

Discussion

This was an attempt to check the validity of LLC for sharply delineated loss landscapes, and to see if any strange results appear when looking at the loss landscape of a LayerNorm model, which contain a feature that the interpretability community dislikes for many reasons. LLC behaved as expected.

I must mention that the project page loss does not one-to-one correspond to the losses registered on the given model. This might be a problem on my end, but I doubt it is a large issue.

Conclusion

This confirmation that LLC acts as it is supposed to even in strange loss regimes is encouraging, indicating that it truly reflects fundamental properties of the network training process.

Acknowledgements

I would like to thank the original Timaeus team for starting this research direction, establishing its methods, and writing the relevant code.

Discuss