Published on March 15, 2025 9:41 PM GMT

This post exposes a concept I came across while brainstorming, while I am absolutely no expert in the field, the concept seemed interesting to me, a quick search revealed that it was never discuss in any previous research so I am sharing it here in case it might be of some relevance (which is unlikely).

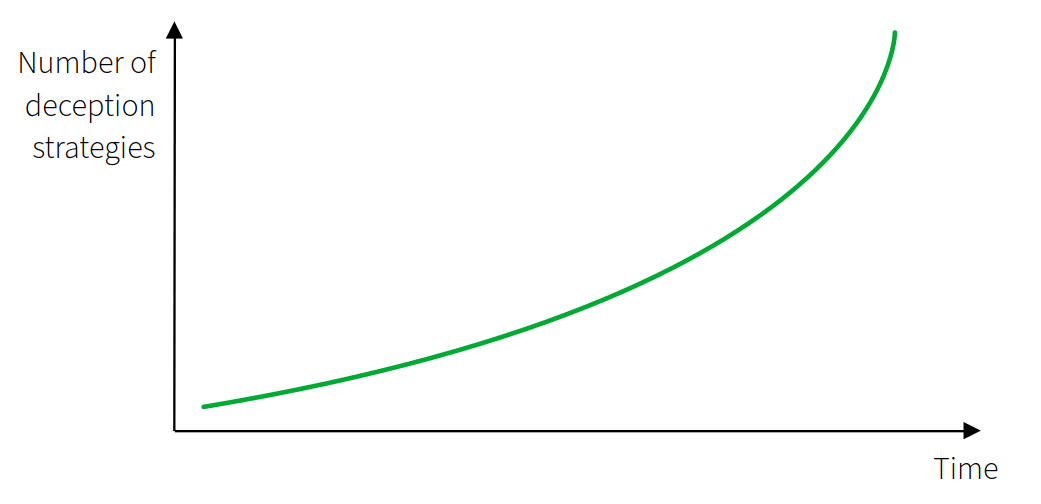

As you likely know, AI researchers are worried about safety. Some of the most potentially negative outcomes of AI research involve deception and long term strategy that would lead an agent to acquire more power and become dominant over humans. A super-intelligent, unaligned AI will easily be capable of manipulating humans to achieve its goals when these diverge considerably from human goals. While extremely varied, these strategies have something in common: they make use of large amount of future time, after all it is easier to achieve a certain result if one has more time at hand, and AIs are not limited in thinking to human timescales. Therefore, the more time an AI has available the larger the set of deception strategies there are at its disposal.

A Theory of Deception

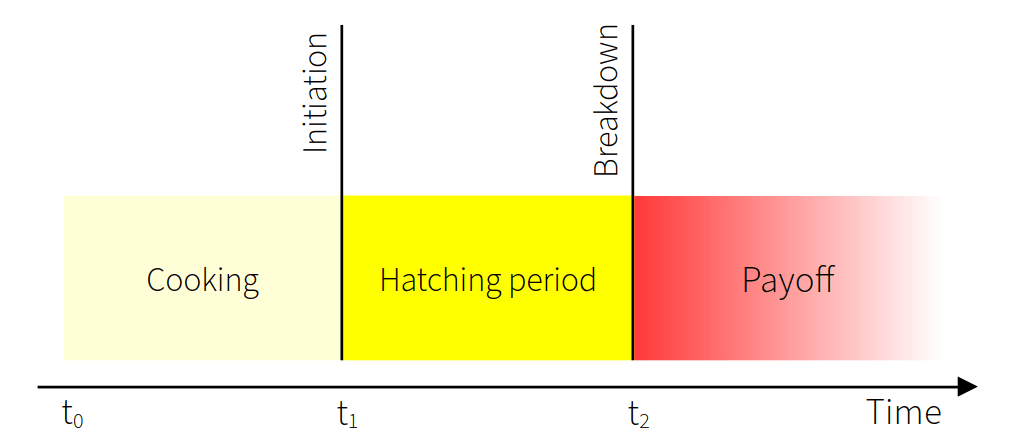

An AI is deceitful because it understand that it can generate larger amount of utility by pursuing results that are against human wishes, since it understand that humans do not desire this, it will engage in deceit, sacrificing present and near-future utility in exchange for much larger amounts of far-future utility. From an AI perspective a deception strategy, as a function of the time variable, looks something like this:

First, the AI has to conceive the deception strategy, this takes some time t1, then the AI has to initiate the deception, after some more time, t2 - t1, the strategy will bear fruit and the AI will be capable of generating large amount of utility, at this point nonalignment has likely become evident.

A Safe(ish) Oracle

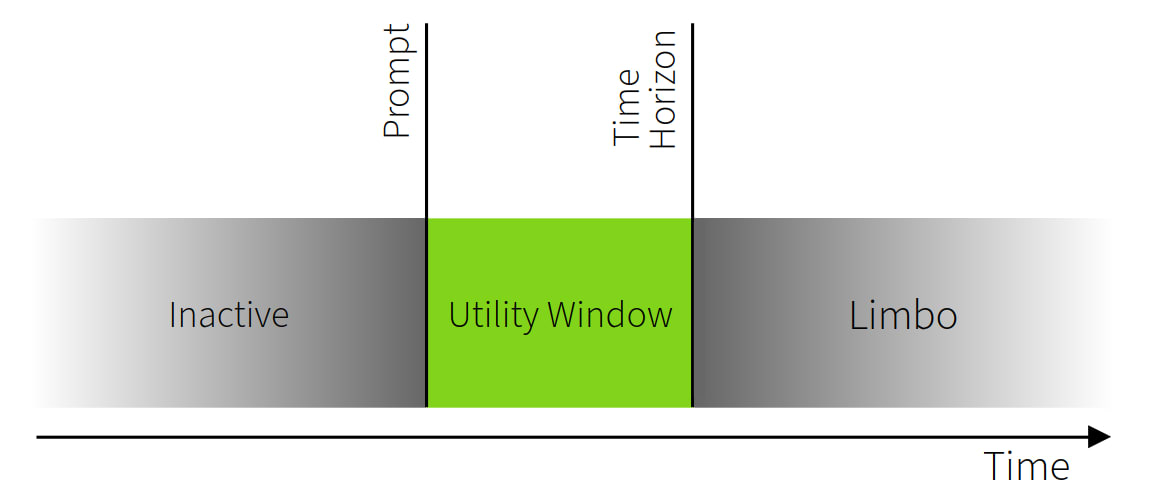

A potential solution might be to give AI time preferences. For this example we will consider an oracle, an AI design which aims to restrict the agent to only answering questions, its utility function has been selected for answering questions truthfully (or so we think), however, on top of this we add a time horizon to the function. The time horizon is a certain point in the future after which the utility function will immediately go to zero, and stay there forever. The oracle, therefore, has only a limited amount of time to generate utility. If the time horizons are adequate, long term manipulation strategies and deception become uninteresting to the AI as these strategies can only generate utility in the future when the function has already decayed, and no more utility can be generated.

This approach is interesting as it doesn't limit the agent (super)intelligence but it might also be useful for solving the control problem in a more generalized way; such an oracle could, perhaps, monitor other AIs. If the time horizon, tO of an oracle falls within the interval t1 and t2 of a certain strategy S, the oracle might be willing to share this deception strategy with us if such an action could generate near term utility, since the agent has no use for it anyway. In fact, a time constrained oracle might be completely non deceitful if its time horizon is small enough or if large scale deception strategies are too time consuming.

Artificial Chronoception

To build such an agent the utility function must be modified to decay over time. Ideally some internal process of the model must be registered and correlated to real time with some stochastic analysis; once defined, this parameter must be incorporated in the utility function. Alternatively special hardware must be added to the AI to feed this information directly to the model, for safety reasons such information should be generated locally with some unalterable physical process (radioactive decay?).

Discuss