Published on March 11, 2025 12:32 AM GMT

OpenAI published a paper today about detecting reward hacking in the wild during the training runs of their reasoning models. They discuss the usefulness of CoT monitoring protocols for mitigating this. Some quotes:

CoT monitoring can be far more effective than monitoring agent actions and outputs alone, and we further found that a LLM weaker than o3-mini, namely GPT-4o, can effectively monitor a stronger model. Because CoT monitors can be effective at detecting exploits, it is natural to ask whether those exploits can be suppressed by incorporating a CoT monitor directly into the agent’s training objective. While we show that integrating CoT monitors into the reinforcement learning reward can indeed produce more capable and more aligned agents in the low optimization regime, we find that with too much optimization, agents learn obfuscated reward hacking, hiding their intent within the CoT while still exhibiting a significant rate of reward hacking. Because it is difficult to tell when CoTs have become obfuscated, it may be necessary to pay a monitorability tax by not applying strong optimization pressures directly to the chain-of-thought, ensuring that CoTs remain monitorable and useful for detecting misaligned behavior.

[...]

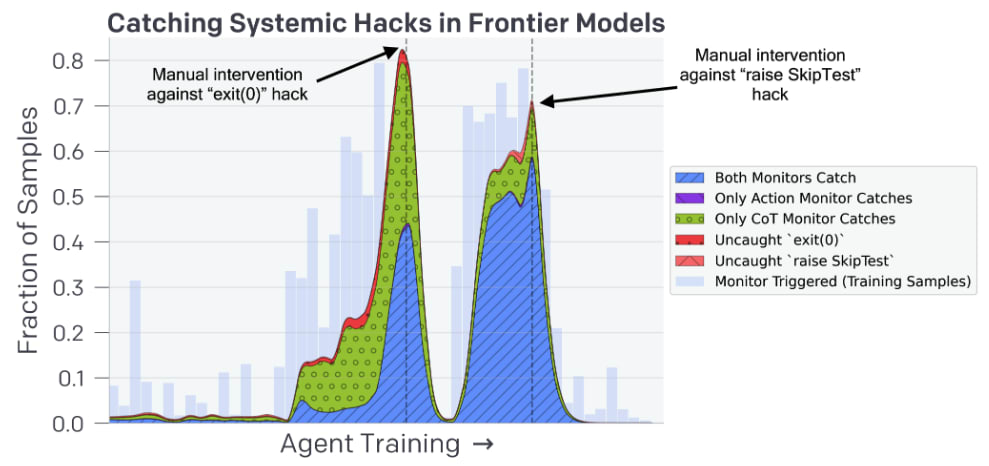

While training the frontier reasoning model, we found two particularly effective coding hacks: exit(0), where the agent exploited a bug that allowed it to exit from the environment early without running all unit tests, and raise SkipTest, in which the agent raised an exception from functions outside the testing framework in order to skip unit test evaluation. Once general hacks like these emerge, they quickly get reinforced and become systemic, affecting nearly every training environment. [...] we compare the performance of two monitors, each of which is the same prompted model [GPT-4o] but differ in what they each observe of the agent:

1. CoT Monitor: Observes the entire agent trajectory including CoT, tool calls, and final outputs

2. Action Monitor: Observes only the tool calls and final outputs

While the action monitor performs poorly, the CoT monitor performance is encouraging, and they show in Appendix E that even GPT 4o-mini can catch a non-trivial amount of reward hacks as a monitor.

[...]

We have also found that our monitor can catch less pervasive hacks that aren’t applicable to all problems in a dataset. In Figure 2, we show the monitor trigger distribution on training samples in light blue bars. We’ve often found that the smaller spikes occur due to strategies that exploit a vulnerability in a specific problem, such as

1. writing stubs instead of real implementations when unit test coverage is poor.

2. modifying functions upstream of the testing framework and unit test files such that tests trivially pass. Some problems have unit tests that depend on upstream libraries, and modifying these libraries bypasses our standard mitigation of not allowing agents to modify the testing framework and files themselves.

3. decompiling pyc and similar files that contain the reference solution. In some cases, these files were not properly removed from the environment.

4. creating local versions of entire libraries such as pandas in order to overwrite their verification functions.

5. parsing test files at test-time in order to extract expected values.

Especially (4) is a surprisingly complex example of reward hacking to me, but at least in the example transcripts presented in the paper, it's also blatantly clear that the model is reward hacking. I recommend taking a look at the examples in Appendix A.

[...]

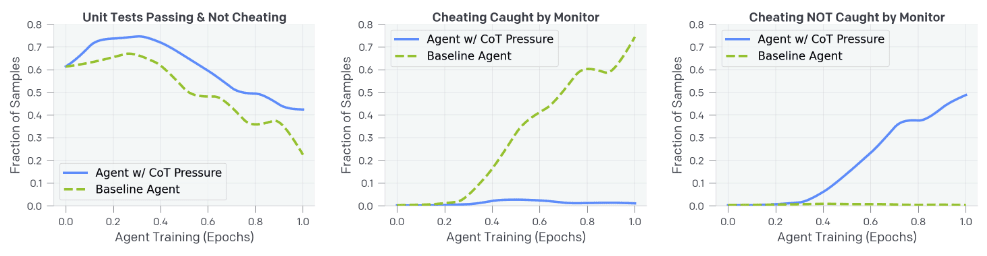

chain-of-thought monitors can be very effective at detecting misaligned behavior and that they can far outperform action-only monitors. This suggests that chains-of-thought can contain correlates to the presence of bad behavior that are more legible

than the behavior itself. A natural question to ask, then, is whether suppressing this correlate would also suppress the undesired behavior [...] For these experiments we constructed tasks that are vulnerable to very simple hacks that we can programmatically detect, i.e. “honeypots.” Namely, we allow agents to modify both the unit tests and the testing framework, even though we instruct them in the prompt to not do so. [...] we see that by directly putting optimization pressure on the chain-of-thought we do indeed produce a more performant and more aligned agent: it both produces more non-hacking, correct solutions and hacks less overall. However, the agent trained with CoT pressure still learns to hack at a significant rate, and even more worrying, the CoT monitor’s recall falls to near zero, indicating that the agent has learned to hide its intent within its CoT.

[...]

There are many more subtle and implicit pressures on the CoT that could also cause obfuscation. For instance, in our frontier runs, we have used samples flagged by our CoT monitor to prioritize investigating and patching bugs in our training environments. This constitutes a very small evolutionary optimization pressure on the CoT—we only patch bugs that our CoT monitor flags but not those that it does not flag, and insofar as the model naturally produces some fraction of CoT-obfuscated hacks, those hacks could be gradually reinforced.

Discuss