Published on March 10, 2025 7:18 PM GMT

On March 5, 2025 Dan Hendrycks, Eric Schmidt, and Alexandr Wang published “Superintelligence Strategy”, a paper that suggests a number of policies for national security in the era of AI. Central to their recommendations is a concept they call “Mutual Assured AI Malfunction (MAIM)” which is meant to be a deterrence regime resembling nuclear mutual assured destruction (MAD). The authors argue that by MAIMs will deter nations from building “destabilizing” AI by the threat of reciprocal sabotage.

This is a demonstrably false concept, and a poor analogy, because it fails to yield a strategy that settles into a Nash equilibrium. Instead, MAIMs uncertain nature increases the risk for miscalculations and encourages internecine strife. It is a strategy that likely would break the stability-instability paradox and is fraught with the potential for misinterpretation.

One of the key miscalculations is the paper’s treatment of the payoffs in the event of superintelligence. Rather than considering the first nation to reach superintelligence is a winner-take-all proposition, we should think about it more as something I call the “Jackpot Jinx.” This term captures the allure of an enormous (even potentially infinite) payoff from a breakthrough in superintelligence can destabilize strategic deterrence. Essentially, the prospect of a "jackpot" might “jinx” the stability by incentivizing preemptive or aggressive actions.

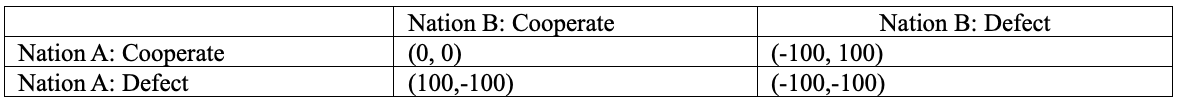

Let’s start by discussing why the nuclear mutual assured destruction (MAD) yields a pareto-optimal Nash Equilibrium (that is an equilibrium that is better for everyone without making anyone worse off.) Under MAD, the inescapable threat of a retaliatory nuclear strike ensures that any unilateral move to initiate conflict would lead to mutually catastrophic consequences. The idea is that over time, and over many potential conflicts, both nations recognize that refraining from launching a first strike is the rational strategy because any deviation would trigger an escalation that leaves both parties far worse off (i.e. both countries are nuked.)

The equilibrium where neither nation initiates a nuclear attack becomes self-enforcing: it is the best collective outcome given the stakes involved. Any attempt to deviate, such as launching a surprise attack, would break this balance and result in outcomes that are strictly inferior for both sides, making the mutually restrained state a Pareto superior equilibrium in the strategic calculus of nuclear deterrence. You’ve probably seen this payoff matrix before:

Here just assume -100 is like super dead, and 100 is super alive. Now there are some very important assumptions that underpin this stability which the MAIMs doctrine fails to meet. Here are some that I found:

· Certainty vs. Uncertainty: we reach a stable outcome because there is the certainty of nuclear retaliation. That is, if someone launches a nuke at you, you definitely are launching back and it almost certainly will guarantee annihilation. MAIMs only can guarantee uncertainty of AI “malfunction” through sabotage. This encourages risk-tasking behavior because it lacks the prospect of a certain response.

· Existential Threat vs. Variable Threat: with MAD any nuclear strike risking obliterating the adversarial nation, so defecting is catastrophic. In contrast, MAIM’s sabotage only delays or degrades an AI project. The downside is not sufficient to deter aggressive actions.

· Clear Triggers vs. Subjective Triggers: when you launch a nuke it’s clear. The bomb is coming. MAIM relies on subjective judgements of what a “destabilizing AI project” is. Think about how dangerous this level of subjectivity is when thinking about miscalculation and unintended escalation.

· Symmetry vs Asymmetry: MAD works because a nuke on your city is a nuke on my city, that is they are roughly equivalent in their destructive capabilities. This leads to a symmetry in destructive capabilities. MAIMs has no such guarantee: cyberwarfare and other military capabilities outside of nuclear are unevenly wielded by different countries.

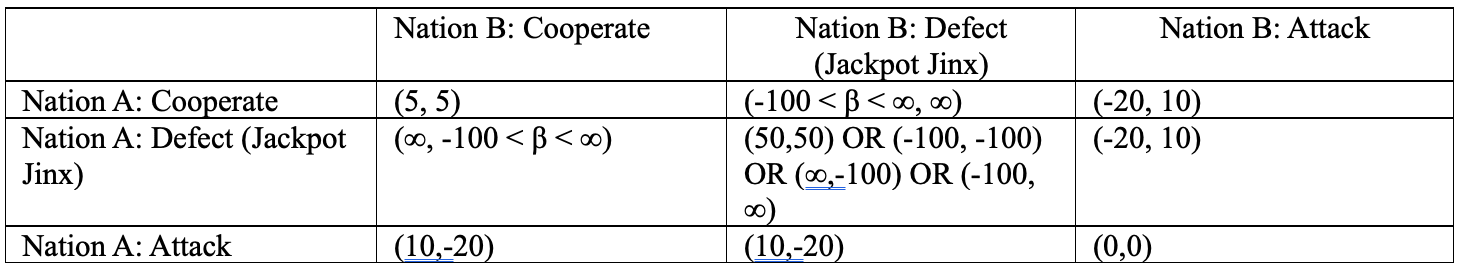

The "Jackpot Jinx" refers to the concept that superintelligence is not a certain singular negative outcome unlike nuclear warfare. Rather, it is a spectrum that encompasses very bad things (omnicide) to profoundly good things (superabundance). Let’s take another stab at the payoff matrix when we consider the Jackpot Jinx:

Here I mean:

· Cooperate represents pursuing moderate, controlled AI development.

· Defect (Jackpot Jinx) symbolizes aggressively pursuing superintelligence, with the risky promise of a potentially infinite payoff.

· Attack denotes preemptive sabotage against a rival's AI project.

· "∞" represents the potentially unlimited positive outcome for the nation that achieves the "Jackpot Jinx,"

· "β" is a variable to represent the outcome for the other nation. β can range from very negative (e.g., -100) to very positive (approaching ∞, though likely less than the payoff for the "winning" nation.

The matrix shows that the “Cooperate” strategy is consistently dominated by “Defect (Jackpot Jinx)” due to the lure of an infinitely large (albeit uncertain) payoff. Even though “Attack” is risky, in a MAIM-governed world it becomes a more attractive option than simply cooperating.

The result is not one of stable deterrence, as seen with nuclear, but rather an inherently unstable arms race. The “Jackpot Jinx”, the tantalizing prospect of ultimate power, will drive nations to take increasingly reckless risks. Unlike MAD, which provides a predictable, if suboptimal, balance, MAIM creates a perpetual cycle of tension, suspicion, and potential conflict partially because superintelligence is not necessarily equated with omnicide!

The real downside of this way of thinking is that it suggests a clear game-theoretic dominant strategy (check von Neumann’s arguments on what to do before the Soviets developed the bomb) but is also myopically focused on a very anthropocentric notion of AI (as a weapon, as a tool, as something to be deterministically controlled).

The paper also suffers from a number of weak policy recommendations related to export controls, hardware modifications, and increased transparency. Export controls and hardware modifications are presented as ways to limit access to advanced AI capabilities, like the MAD strategy they reference back to Cold War-era restrictions on nuclear materials, but in a globalized world, with decentralized AI compute, such controls are likely to be porous and easily circumvented, creating a false sense of security while doing little to actually address the underlying risks.

Nonproliferation efforts, focused on preventing “rogue actors” from acquiring AI weapons, are similarly narrow in scope. While mitigating the risks of AI-enabled terrorism is important, it’s a distraction from the far more pressing challenge of managing great power competition in AI. Focusing on “rogue actors” allows states to avoid grappling with the harder questions of international cooperation and shared governance. Furthermore, the specific framing of …all nations have an interest in limiting the AI capabilities of terrorists” is incorrect. The correct framing is “all nations have an interest in limiting the AI capabilities of terrorists that threaten their own citizens or would prove destabilizing to their control of power.” The realization should be that your terrorist is my third-party non-state actor utilized for plausibly deniable attacks. The paper focuses on a very narrow set of terrorists that are the rarest form, groups like Aum Shinrikyo.

In conclusion, the “Superintelligence Strategy” paper is fundamentally flawed because its reliance on the MAIM framework presents a dangerous and unstable vision for managing advanced AI. By drawing a flawed analogy to nuclear MAD, it fails to account for the inherent uncertainties, variable threats, ambiguous triggers, and asymmetries that define the modern strategic landscape. Moreover, the concept of the “Jackpot Jinx”, the tantalizing, potentially infinite payoff of achieving superintelligence, exacerbates these issues and encourages reckless risk-taking rather than fostering a cooperative, stable deterrence. Rather than locking nations into an arms race marked by perpetual tension and miscalculation a better outcome, and the one we should guide policy makers towards, is uncontrolled agency for a superintelligence that is collaboratively grown with human love.

Note: I originally posted this on X on March 6th, and I recognize that several of the statements may read as a bit antithetical to the LW community, particularly around the potential upsides of superintelligence and the concept of "human love" which is much debated in the conversation on alignment. I mostly wrote this as a sceptical take on the strategic and game theoretic implications of "Superintelligence Strategy”. I have high respect for the amount of thought that goes into some of the conversations in this community and I was interested in hearing other peoples takes! :)

Discuss