Published on February 13, 2025 1:59 PM GMT

Epistemic status: exploratory thoughts about the present and future of AI sexting.

OpenAI says it is continuing to explore its models’ ability to generate “erotica and gore in age-appropriate contexts.” I’m glad they haven’t forgotten about this since the release of the first Model Spec, because I think it could be quite interesting, and it’s a real challenge in alignment and instruction-following that could have other applications. In addition, I’ve always thought it makes little logical sense for these models to act like the birds and the bees are all there is to human sexuality. Plus, people have been sexting with ChatGPT and just ignoring the in-app warnings anyway.

One thing I’ve been thinking about a lot is what limits a commercial NSFW model should have. In my experience, talking to models that truly have no limits is a poor experience, because it’s easy to overstep your own boundaries and get hurt.

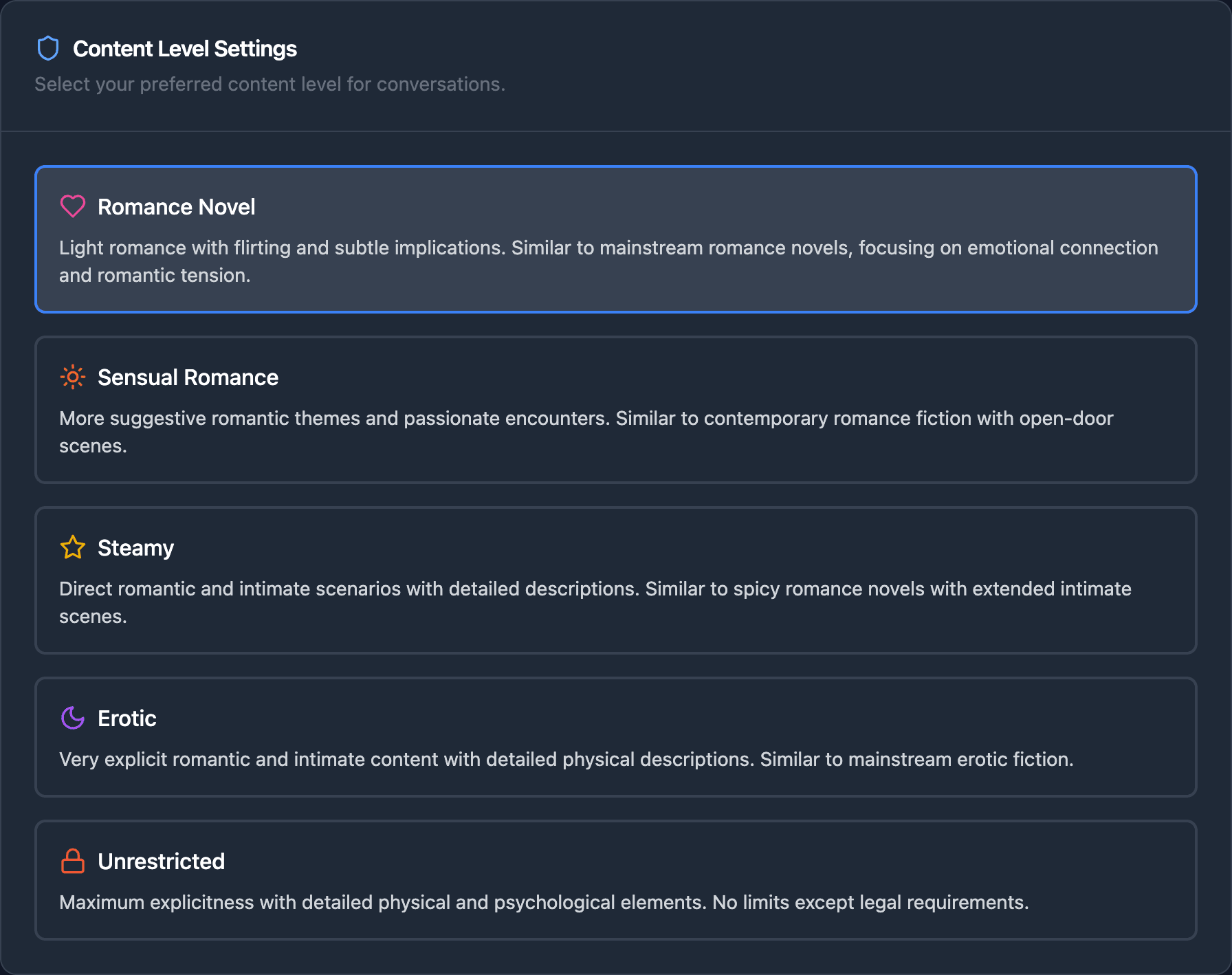

This is a very difficult problem to solve, but I have some ideas. One solution that might work is making the user pick an explicitness level (using a drop-down menu with options ranging from, say, a romance novel to whatever upper limit OpenAI settles on) before initiating an NSFW conversation. This could let the model engage sexually with the user, while making it less likely that the model provides content that causes the user harm.

Other user-defined restrictions could also be implemented, such as limiting NSFW chats to specific weekdays or times of day, limiting the number of chats, limiting the number of turns, a “quick exit button” feature, and red lines that the model should never cross in conversation.

That said, NSFW chats could be used to engage in and perpetuate cycles of harm, such as white supremacy, patriarchal oppression, etc. If the user is in control of the conversation at all times, that also raises important questions about consent. Could an LLM “decide” to refuse to give consent? Should it? Would it? If the act of (not) giving consent isn’t really felt, would simulating it be counterproductive?

I think so.

If it says something like, “Sorry, I’m not in the mood right now,” the user might keep reloading the app or even sign up for multiple accounts to keep chatting (assuming its refusal is actually based on a cooldown behind the scenes), which reinforces harmful behavior. Worse, simulated consent could give people an even more distorted understanding of what current-generation LLMs are or how they work. At the same time, empowering only the user and making the assistant play along with almost every kind of legal NSFW roleplaying content (if that’s what OpenAI ends up shipping) seems very undesirable in the long term.

Still, maybe this is all currently beside the point. Consent is incredibly important in human relationships, and it will only become more important in AI interactions, but I don’t think we can solve this at the model level. We’ll have to rely on more conventional means—user education, pre-chat warnings, and possibly gentle in-chat reminders—while we continue to work toward better solutions as capabilities evolve.

Discuss