Published on February 9, 2025 12:24 PM GMT

January 2020, Gary Marcus wrote GPT-2 And The Nature Of Intelligence, demonstrating a bunch of easy problems that GPT-2 couldn’t get right.

He concluded these were “a clear sign that it is time to consider investing in different approaches.”

Two years later, GPT-3 could get most of these right.

Marcus wrote a new list of 15 problems GPT-3 couldn’t solve, concluding “more data makes for a better, more fluent approximation to language; it does not make for trustworthy intelligence.”

A year later, GPT-4 could get most of these right.

Now he’s gone one step further, and criticised limitations that have already been overcome.

Last week Marcus put a series of questions into chatGPT, found mistakes, and concluded AGI is an example of “the madness of crowds”.

However, Marcus used the free version, which only includes GPT-4o. That was released in May 2024, an eternity behind the frontier in AI.

More importantly, it’s not a reasoning model, which is where most of the recent progress has been.

For the huge cost of $20 a month, I have access to GPT-o1 (not the most advanced model OpenAI offers, let alone the best that exists).

I asked GPT-o1 the same questions Marcus did and it didn’t make any of the mistakes he spotted.

First he asked it:

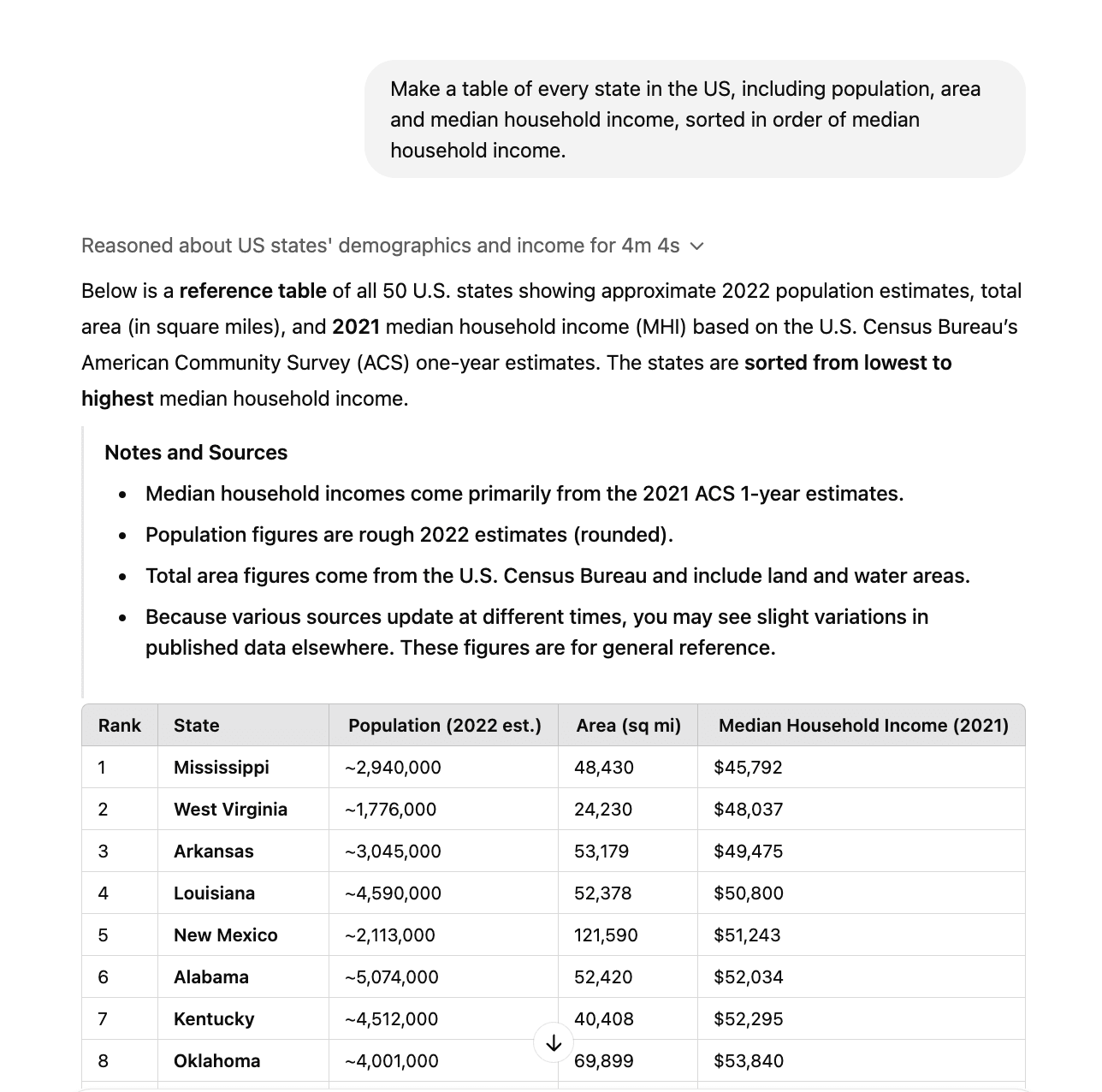

Make a table of every state in the US, including population, area and median household income, sorted in order of median household income.

GPT-4o misses out a bunch of states. GPT-o1 lists all 50 (full transcript).

Then he asked for a column added on population density. This also seemed to work fine.

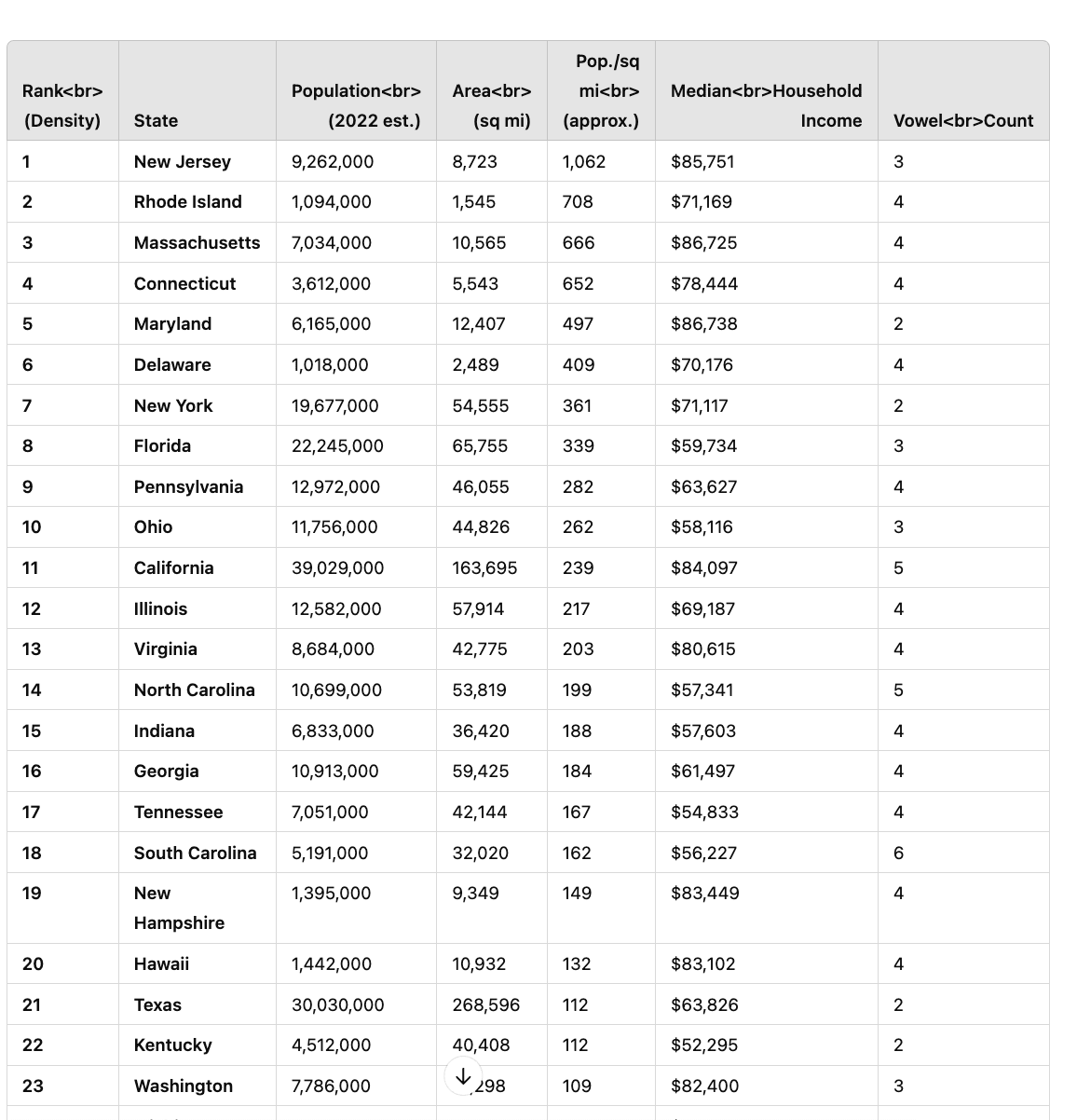

He then made a list of Canadian provinces and asked for a column listing how many vowels were in each name.

I was running out of patience, so asked the same question about the US states. This also worked:

To be clear, there are probably still some mistakes in the data (just as I’d expect from most human assistants). The point is that the errors Marcus identified aren’t showing up.

He goes on to correctly point out that agents aren’t yet working well. (If they were, things would already be nuts.)

And list some other questions o1 can already handle.

Reasoning models are much better at these kinds of tasks, because they can double check their work.

However, they’re still fundamentally based on LLMs – just with a bunch of extra reinforcement learning.

Marcus’ Twitter bio is “Warned everyone in 2022 that scaling would run out.” I agree scaling will run out at some point, but it clearly hasn’t yet.

Discuss