How to monitor AWS container environments at scale (Sponsored)

In this eBook, Datadog and AWS share insights into the changing state of containers in the cloud and explore why orchestration technologies are an essential part of managing ever-changing containerized workloads.

Learn more about:

Strategies for successfully tracking containerized AWS applications at scale

Key metrics to monitor for Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS)

Enabling comprehensive monitoring for AWS container environments with Datadog

Disclaimer: The details in this post have been derived from Google Blogs and Research Papers. All credit for the technical details goes to the Google engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Cloud Spanner is a revolutionary database system developed by Google that uniquely combines the strengths of traditional relational databases with the scalability typically associated with NoSQL systems.

Designed to handle massive workloads across multiple regions, Cloud Spanner provides a globally distributed, strongly consistent, and highly available platform for data management. Its standout feature is its ability to offer SQL-based queries and relational database structures while achieving horizontal scalability. This makes it suitable for modern, high-demand applications.

Here are some features of Cloud Spanner:

A multi-version database that uses synchronous replication to ensure data durability and availability even in the case of regional failures.

Use of TrueTime, a technology that integrates GPS and atomic clocks to provide a globally consistent timeline.

Spanner simplifies data management by offering a familiar SQL interface for queries while handling the complexities of distributed data processing under the hood.

Spanner partitions its data into contiguous key ranges, called splits, which are dynamically resharded to balance the load and optimize performance.

Overall, Google Spanner is a powerful solution for enterprises that need a database capable of handling global-scale operations while maintaining the robustness and reliability of traditional relational systems.

In this article, we’ll learn about Google Cloud Spanner's architecture and how it supports the various capabilities that make it a compelling database option.

The Architecture of Cloud Spanner

The architecture of Cloud Spanner is designed to support its role as a globally distributed, highly consistent, and scalable database.

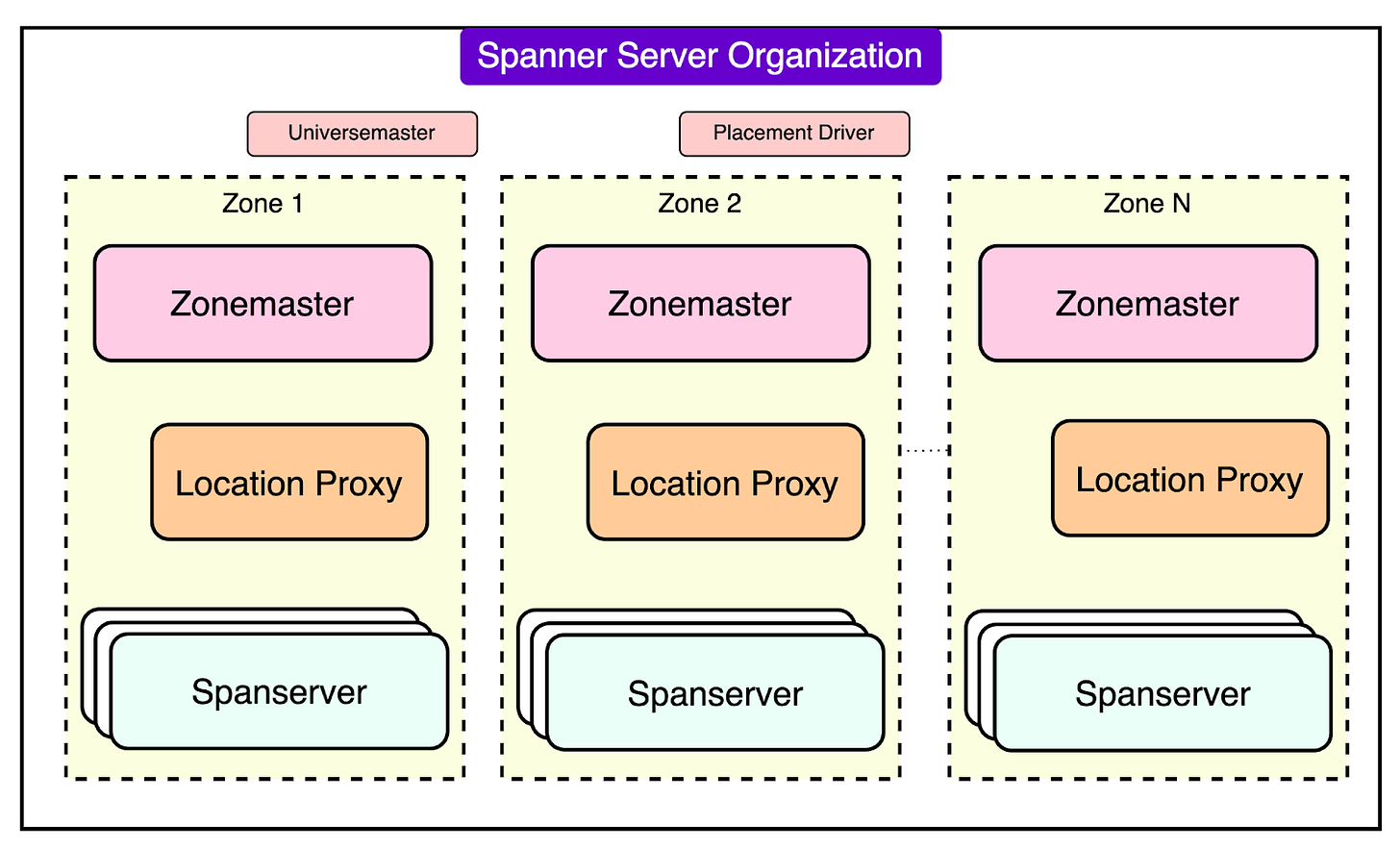

At the highest level, Spanner is organized into what is called a universe, a logical entity that spans multiple physical or logical locations known as zones.

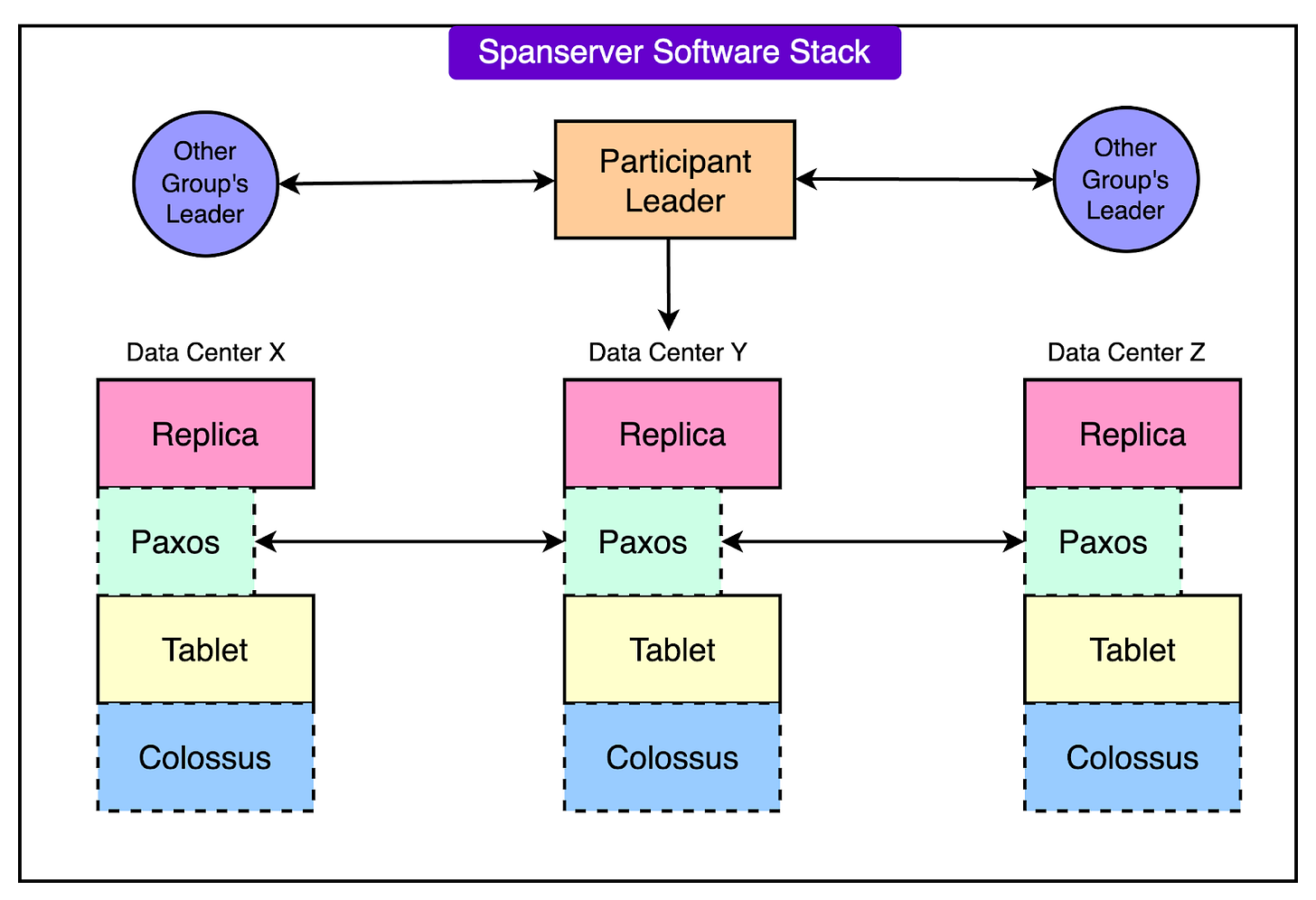

Each zone operates semi-independently and contains spanservers. These are specialized servers that handle data storage and transactional operations. Spanservers are built on concepts from Bigtable, Google’s earlier distributed storage system, and include enhancements to support complex transactional needs and multi-versioned data.

Some of the key architectural components of Spanner are as follows:

1 - Data Sharding and Tablets

Cloud Spanner manages data by breaking it into smaller chunks called tablets, distributed across multiple spanservers.

Each tablet holds data as key-value pairs, with a timestamp for versioning. This structure allows Spanner to act as a multi-version database where old versions of data can be accessed if needed.

Tablets are stored on Colossus, Google’s distributed file system. Colossus provides fault-tolerant and high-performance storage, enabling Spanner to scale storage independently of compute resources.

2 - Dynamic Partitioning

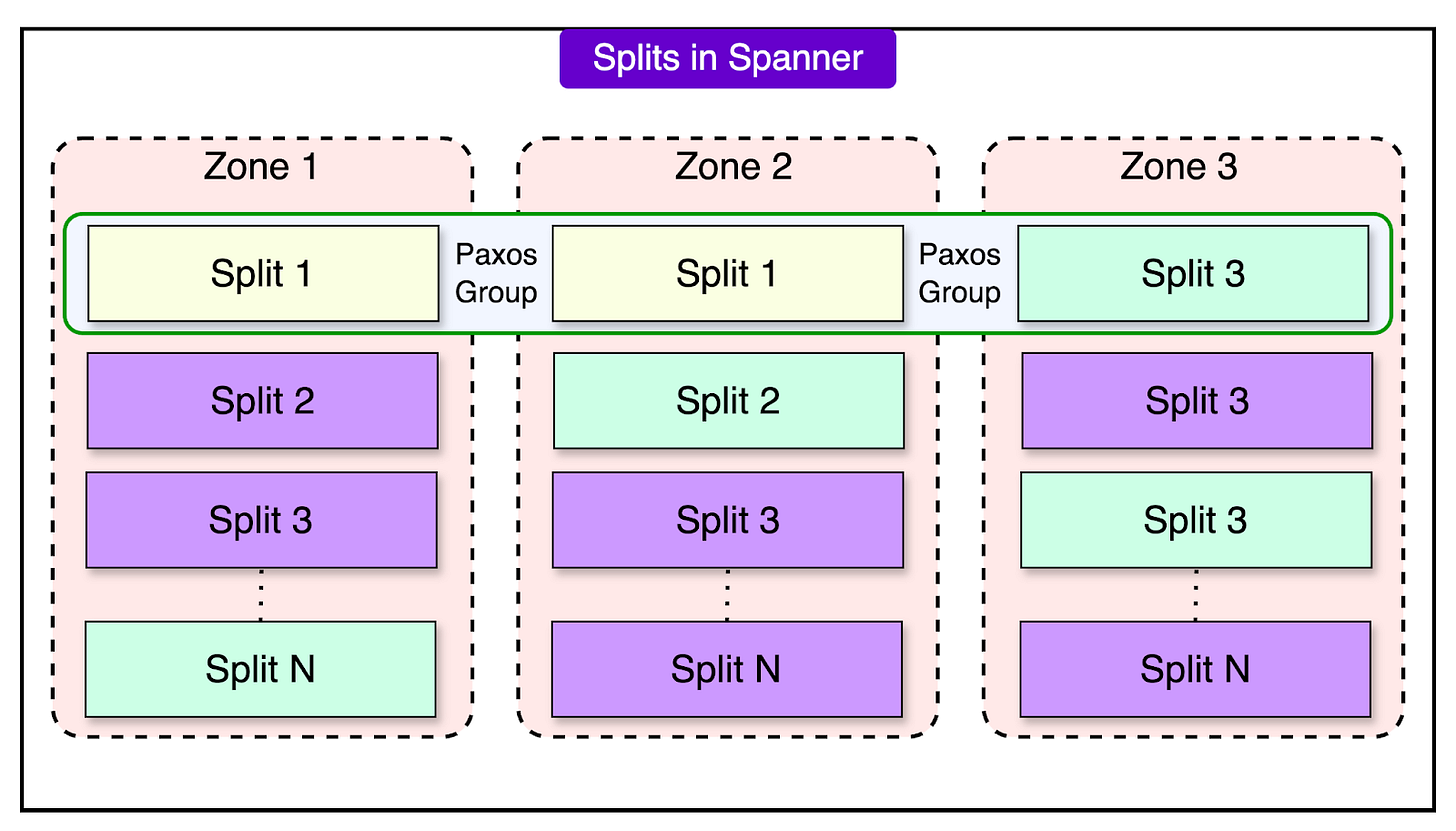

Data within tables is divided into splits, which are ranges of contiguous keys. These splits can be dynamically adjusted based on workload or size.

When a split grows too large or experiences high traffic, it is automatically divided into smaller splits and redistributed across spanservers. This process, known as dynamic sharding, ensures even load distribution and optimal performance.

Each split is replicated across zones for redundancy and fault tolerance.

3 - Paxos-Based Replication

Spanner uses the Paxos consensus algorithm to manage replication across multiple zones. Each split has multiple replicas, and Paxos ensures that these replicas remain consistent.

Among these replicas, one is chosen as the leader, responsible for managing all write transactions for that split. The leader coordinates updates to ensure they are applied in a consistent order.

If the leader fails, Paxos elects a new leader, ensuring continued availability without manual intervention. The replicas not serving as leaders can handle read operations, reducing the workload on the leader and improving scalability.

4 - Multi-Zone Deployments

Spanner instances span multiple zones within a region, with replicas distributed across these zones. This setup enhances availability because even if one zone fails, other zones can continue serving requests.

For global deployments, data can be replicated across continents, providing low-latency access to users worldwide.

5 - Colossus Distributed File System

All data is stored on Colossus, which is designed for distributed and replicated file storage. Colossus ensures high durability by replicating data across physical machines, making it resilient to hardware failures.

The file system is decoupled from the compute resources, allowing the database to scale independently and perform efficiently.

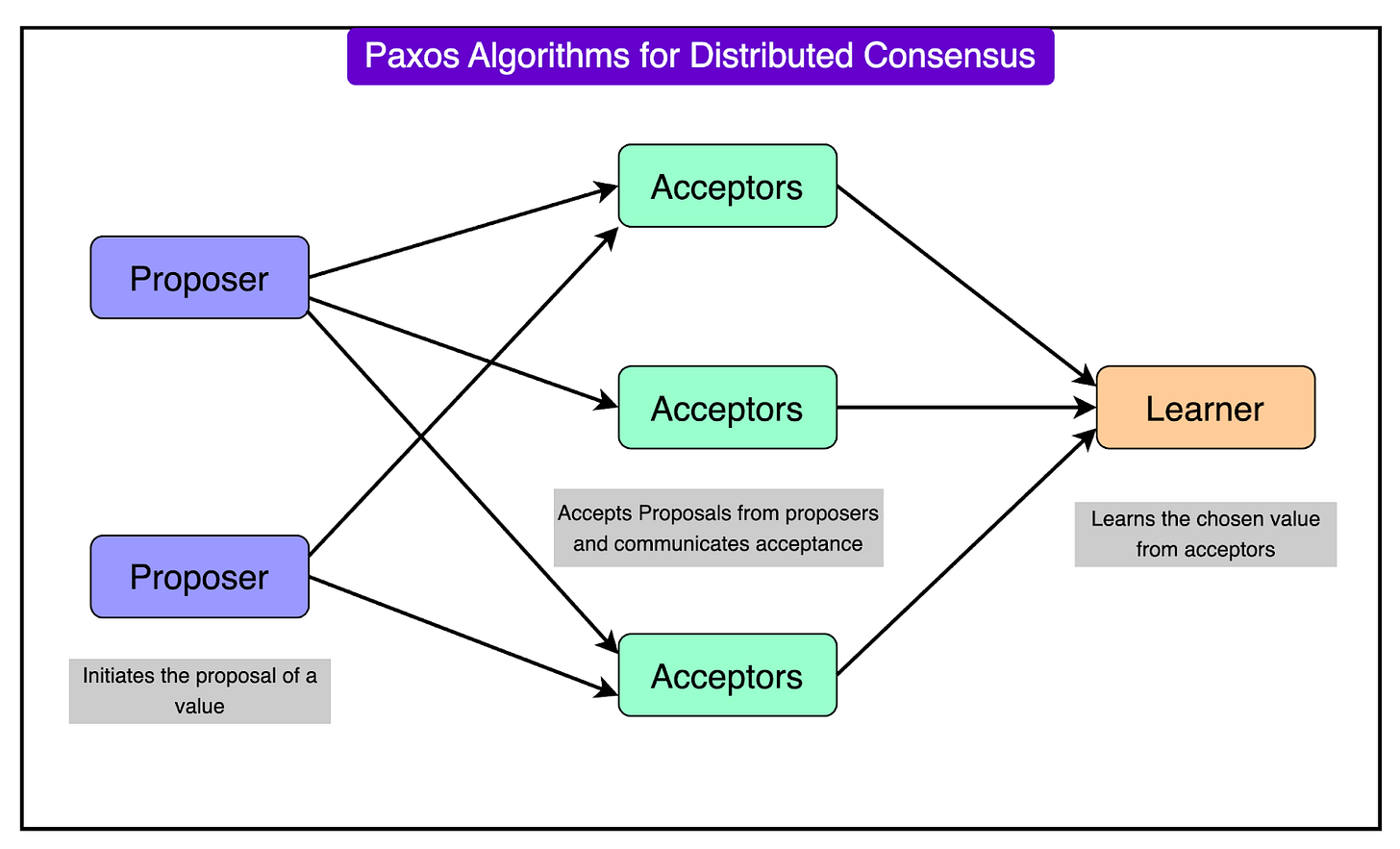

Paxos Mechanism in Spanner

The Paxos Mechanism is a critical component of Spanner’s architecture.

It operates on the principle of distributed consensus, where a group of replicas (known as a Paxos group) agrees on a single value, such as a transaction's commit or the leader responsible for handling updates.

The leadership assignment works as follows:

Each split of data (a contiguous range of keys) is associated with a Paxos group that spans multiple zones.

One replica in the Paxos group is designated as the leader. The leader handles all write operations for its split, ensuring that updates are coordinated.

Other replicas serve as followers, which can process read requests to offload work from the leader and improve performance.

The key responsibilities of the Paxos Leader are as follows:

Handling Writes: The leader receives write requests and ensures they are replicated to a majority of the Paxos group before being committed. This replication process ensures the durability and consistency of the data, even if some replicas fail.

Maintaining Order: The leader assigns timestamps to transactions using TrueTime for a globally consistent ordering of writes.

Communication with Followers: The leader coordinates updates by broadcasting proposals to the followers and collecting acknowledgments.

Failures are inevitable in distributed systems, but Paxos ensures that Spanner remains available and consistent despite such issues.

If the current leader fails due to a machine or zone outage, the Paxos group detects the failure and elects a new leader. The new leader is chosen from the remaining replicas in the Paxos group. This process avoids downtime and ensures that the data remains accessible.

Read and Write Transactions in Spanner

Cloud Spanner manages transactions with a robust approach that ensures strong consistency, reliability, and high performance.

Let’s look at how write and read transactions work in more detail:

1 - Write Transactions

Write transactions in Cloud Spanner are designed to guarantee atomicity (all-or-nothing execution) and consistency (all replicas agree on the data). These transactions are managed by Paxos leaders coordinating the process to ensure data integrity even during failures.

Here are the steps involved in the process:

Acquiring Locks: Before making changes, the Paxos leader for a split acquires a write lock on the rows being modified. If another transaction holds a conflicting lock, the current transaction will wait until the lock is released.

Assigning Timestamps via TrueTime: TrueTime assigns a globally consistent timestamp to the transaction. This timestamp guarantees that the transaction’s changes are applied in the correct order relative to other transactions. The timestamp is greater than any previously committed transaction, ensuring temporal consistency.

Durability with Majority Replication: Once the leader has locked the data and assigned a timestamp, it sends the transaction details to a majority of replicas in its Paxos group. The transaction is only considered committed after the majority acknowledges it, guaranteeing the data is durable even if some replicas fail.

Commit Wait: The leader waits for a brief period to ensure that the commit timestamp is in the past for all replicas. This step makes sure that once the transaction is committed, all subsequent reads will reflect its effects.

There is some difference in the way Spanner handles single-split write versus multi-split write.

For example, in a single-split write, suppose a user wants to add a row with ID 7 and value "Seven" to a table.

The Spanner API identifies the split containing ID 7 and sends the request to its Paxos leader.

The leader acquires a lock on ID 7, assigns a timestamp via TrueTime, and replicates the changes to a majority of replicas.

After ensuring the timestamp has passed, the transaction is committed, and all replicas apply the change.

However, for a multi-split write, if a transaction modifies rows in multiple splits (for example, writing to rows 2000, 3000, and 4000), Spanner uses a two-phase commit protocol:

Each split becomes a participant in the transaction, and one split’s leader acts as the coordinator.

The coordinator ensures all participants acquire locks and agree to commit the transaction before proceeding.

Once all participants confirm, the coordinator commits the transaction and informs the others to apply the changes.

2 - Read Transactions

Read transactions in Spanner are optimized for speed and scalability. They provide strong consistency without requiring locks, which allows reads to be processed efficiently even under high workloads.

The different types of reads are as follows:

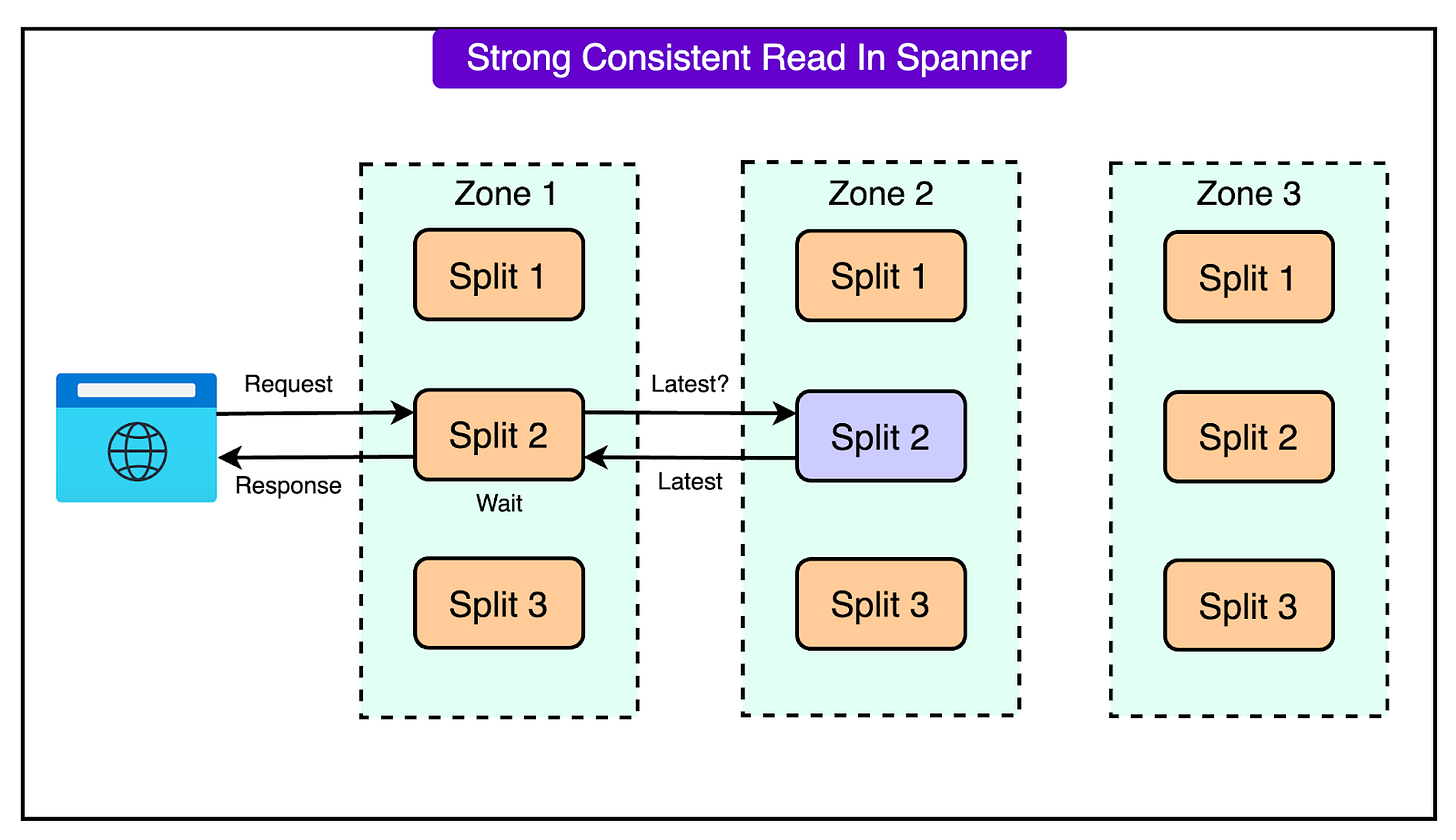

Strong Reads: These reads always return the latest committed data. The system ensures consistency by verifying the latest timestamp of the data using TrueTime. For example, if a client requests a value for row ID 1000, the system routes the request to a replica, which checks with the leader to confirm the data is up-to-date before returning the result.

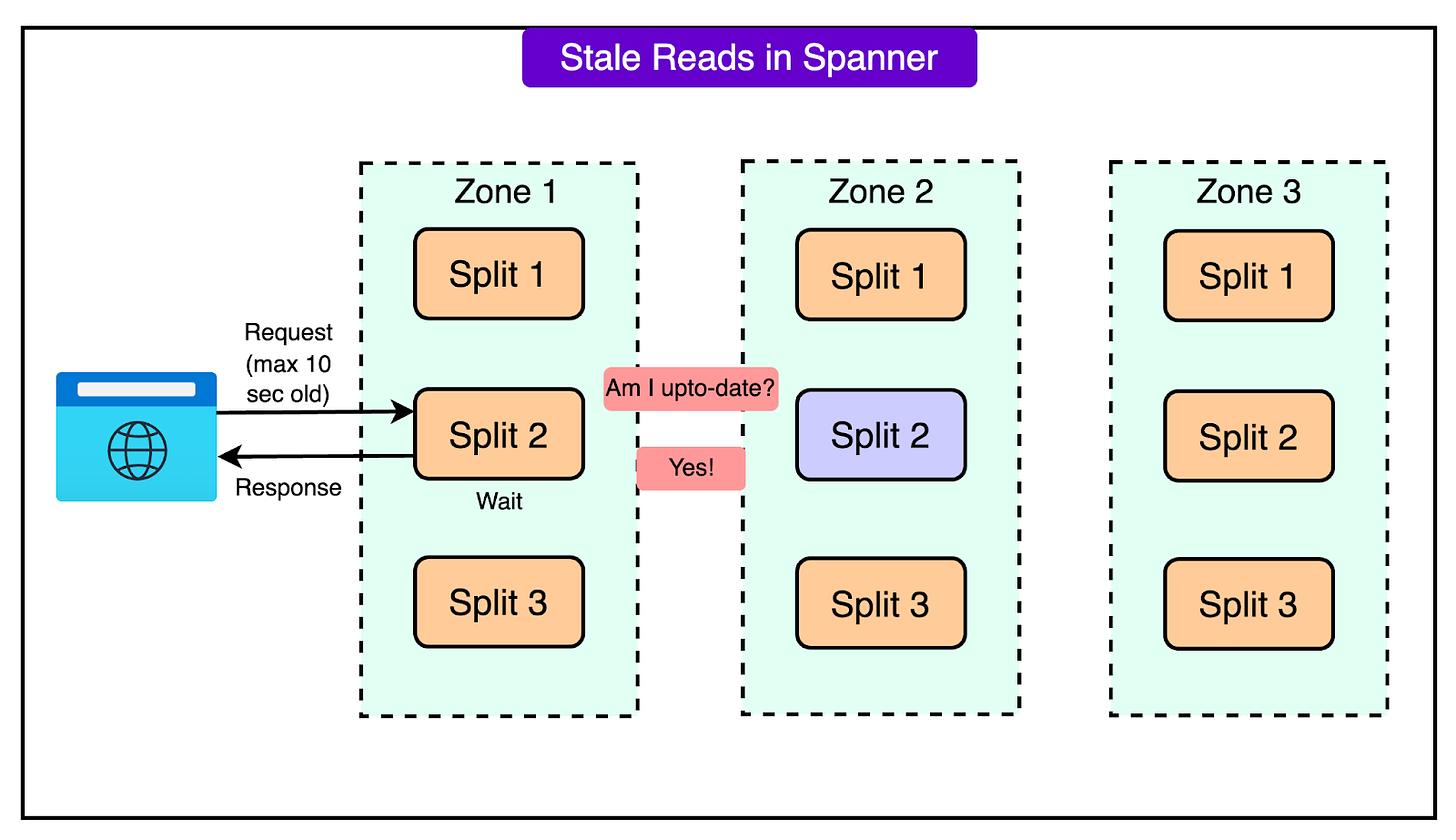

Stale Reads: These reads allow a small degree of staleness (for example, data up to 10 seconds old) in exchange for lower latency. For instance, a client requesting a slightly older value for row ID 1000 can get the data directly from a replica without waiting for confirmation from the leader, speeding up the process.

See the diagram below that shows the strong consistent read scenario.

Also, the diagram below shows the stale reads scenario.

3 - Deadlock Prevention

Spanner avoids deadlocks—a situation where two or more transactions wait for each other to release locks—by using the wound-wait algorithm. Here’s how it works:

If a younger transaction (started later) requests a lock held by an older transaction, it waits.

If an older transaction requests a lock held by a younger one, the younger transaction is wounded (aborted) to allow the older one to proceed.

This strategy ensures that transactions always make progress and deadlock cycles do not occur.

4 - Reliability and Durability

Spanner’s design ensures that data remains consistent and available even during failures.

All writes are stored in Google’s Colossus distributed file system, which replicates data across multiple physical machines. Even if one machine or zone fails, the data can be recovered from other replicas.

TrueTime ensures that all transactions occur in a globally consistent order, even in a distributed environment. This guarantees that once a transaction is visible to one client, it is visible to all clients.

The TrueTime API

The TrueTime API is one of the key innovations in Cloud Spanner, enabling it to function as a globally distributed, strongly consistent database.

TrueTime solves one of the most challenging problems in distributed systems: providing a globally synchronized and consistent view of time across all nodes in a system, even those spread across multiple regions and data centers.

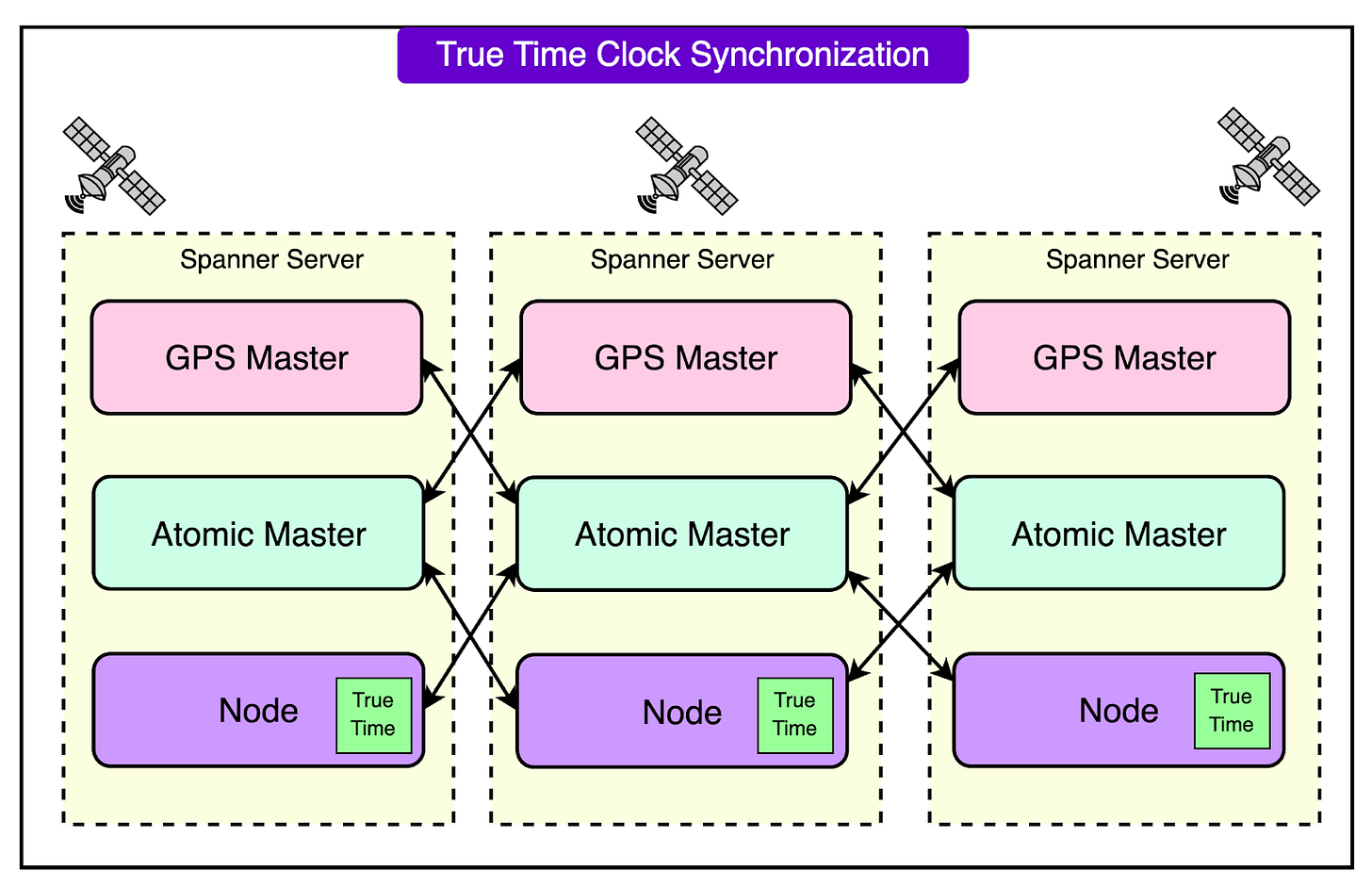

TrueTime is based on a combination of atomic clocks and GPS clocks, which work together to provide highly accurate and reliable time synchronization.

By using both atomic and GPS clocks, TrueTime mitigates the weaknesses of each system. For example:

If GPS signals are disrupted, atomic clocks ensure accurate timekeeping.

Conversely, GPS clocks help correct any long-term drift in atomic clocks.

Here’s how it works:

Atomic Clocks: These devices measure time-based on the vibrations of atoms, providing highly precise timekeeping with minimal drift. Atomic clocks serve as a reliable backup when GPS signals are unavailable or inaccurate.

GPS Clocks: These clocks rely on signals from satellites to provide accurate time information. GPS clocks are useful because they offer a globally synchronized source of time. However, GPS systems can experience issues such as interference, antenna failures, or even spoofing attacks.

Time Representation and Uncertainty

TrueTime represents time as an interval instead of a single point, explicitly acknowledging the uncertainty inherent in distributed systems.

TTInterval: TrueTime provides a time range, [earliest, latest], which guarantees that the actual global time falls somewhere within this range. The width of the interval is determined by factors such as clock drift and network delays.

Error Bound and Synchronization: The uncertainty interval is kept small (typically under 10 milliseconds) through frequent synchronization. Each server in the system synchronizes its local clock with the time masters (atomic and GPS clocks) approximately every 30 seconds.

Key Features Enabled By TrueTime

TrueTime provides some important features that make it so useful:

Global External Consistency: TrueTime ensures that transactions are serialized in the same global order across all replicas. This is critical for maintaining consistency in a distributed database. For example, if one transaction commits before another transaction starts, TrueTime guarantees the timestamps reflect this order globally.

Lock-Free Transactions: TrueTime allows Spanner to perform lock-free read-only requests. These transactions can access consistent snapshots of data without needing locks, improving scalability and performance.

Atomic Schema Updates: Schema changes, such as altering a table structure, are challenging in distributed systems because they often involve many servers. With TrueTime, schema updates are treated as transactions with a specific timestamp, ensuring that all servers apply the change consistently.

Historical Reads: TrueTime enables snapshot reads, where clients can specify a timestamp to read a consistent view of the database as it existed at that time. This is useful for audits or debugging.

Conclusion

Google Spanner stands as a great achievement in database engineering, seamlessly blending the reliability and structure of traditional relational databases with the scalability and global availability often associated with NoSQL systems.

Its innovative architecture, supported by the Paxos consensus mechanism and the TrueTime API, provides a great foundation for handling distributed transactions, ensuring external consistency, and maintaining high performance at a global scale.

Ultimately, Google Spanner redefines what is possible in distributed database systems, setting a standard for scalability, reliability, and innovation.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.