Published on February 3, 2025 7:31 PM GMT

Note: This post summarizes my capstone project for the AI Alignment course by BlueDot Impact. You can learn more about their amazing courses here and consider applying!

Introduction

Recent research in weak-to-strong generalization (W2SG) has revealed a crucial insight: enhancing weak supervisors to train strong models relies more on the characteristics of the training data rather than on new algorithms. This article reviews the research conducted by Shin et al. (2024), who identified overlap density — a measurable data attribute that can predict and support successful W2SG. Their findings suggest we've been looking at the alignment problem through the wrong lens — instead of only focusing on model architectures, we should also be engineering datasets that maximize this critical density property. By analyzing their work and implementing their algorithms, I aim to provide researchers with tools to further investigate data-centric features that improve W2SG.

Background: W2SG's Data-Centric Foundation

Weak-to-Strong Context:

In the AI alignment paradigm first proposed by Burns et al. (2023), W2SG enables a weak model (e.g., GPT-2) to train a significantly stronger model (e.g., GPT-4) through carefully structured interactions. W2SG describes the transition from weak generalization, where a model performs well on “easy” patterns (i.e. patterns with clear, simple features or high-frequency occurrences in the training data), to strong generalization, where the model successfully handles “hard” patterns (low-frequency, high-complexity features). This becomes crucial when:

- Human oversight can't scale with AI capabilitiesWe need to bootstrap supervision for superintelligent systemsDeveloping failsafes against mesa-optimizers

Current ML models often excel at weak generalization, but their capacity for strong generalization remains inconsistent and poorly understood. This gap has major implications for AI alignment: systems that generalize weakly may fail in unanticipated ways under novel conditions, leading to dangerous behaviors.

Key Definitions:

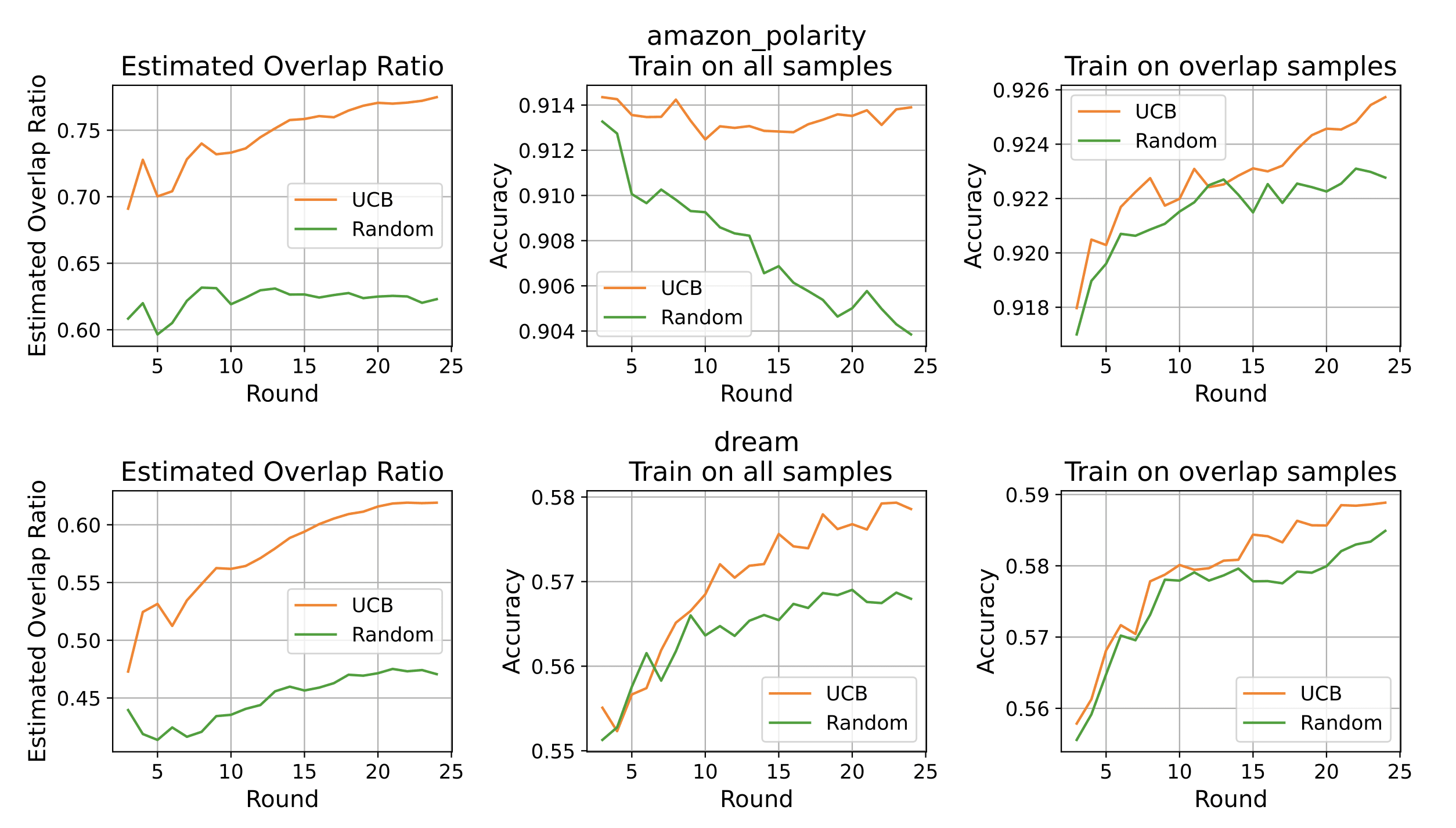

Figure 1: Concept behind Overlap Density and how it influences W2SG[1]

Shin et al. formalize easy patterns as features that are learnable by both weak and strong models. Conversely, hard patterns are only accessible by strong models as they require higher-order reasoning, and they tend to emerge as learning progresses.

A central challenge in W2SG is identifying conditions under which a model can bridge this gap from easy-to-hard patterns. Shin et al. hypothesize that overlapping structures between easy and hard patterns could facilitate this generalization.

Explaining Easy, Hard, and Overlapping patterns in LLM datasets via "How a Car Works"

In this particular example[2], a child (playing the role of a weak model — still learning the basics and struggling to reason about complex ideas) and an adult (plays the role of a strong model — capable of understanding complex ideas) learning about how a car works. The dataset consists of textual descriptions and examples related to cars. Easy, hard, and overlapping patterns represent different kinds of concepts within the dataset. In this scenario:

Easy concepts (basic, foundational knowledge):

- "If you push the gas pedal and then release it, the car will keep rolling because of momentum.""A car has four wheels, and the driver uses a steering wheel to turn the car.""Cars need fuel to run."

For the child (the weak model), these concepts are easy to understand and can be directly incorporated into their knowledge base. For the adult (strong model), these concepts offer little new information—they are already well-understood and don’t challenge the adult’s existing understanding.

Hard concepts (complex, interconnected knowledge):

- "A car’s engine uses pistons and cylinders to compress an air-fuel mixture and ignite it, producing energy through combustion. This energy pushes the pistons to rotate the crankshaft.""The transmission system adjusts the torque and speed ratio, allowing the engine's power to match the car’s speed requirements.""The alternator converts mechanical energy from the engine into electrical energy to recharge the car’s battery."

For the child, these concepts are overwhelming; they involve terms and processes (e.g., pistons, torque, transmission) that cannot be understood without additional foundational knowledge. For the adult, these concepts are more accessible, provided they already understand the easy concepts (e.g., how energy and motion interact). These hard concepts challenge the adult’s reasoning and allow the strong model to learn new, advanced relationships.

Overlapping concepts (bridging the gap between easy and hard):

- "The engine turns fuel into energy, which is transferred to the wheels via the drive shaft, a metal tube that moves power from the engine to the wheels.""The brakes on a car create friction to slow it down by converting the car’s kinetic energy into heat energy.""The power steering system reduces the effort needed to turn the steering wheel by using hydraulic or electric pressure."

Here’s where the generalization dynamic comes in:

- The child learns overlapping concepts from the prior knowledge of easy patterns. Through these concepts, the child can generalize parts of the dataset containing mixed (easy and hard) concepts. For example:

- The child might generalize: "The engine turns fuel into energy for the drive shaft."

- The adult can now generalize from the information provided by the child to tackle previously unknown/incomplete concepts such as: "The drive shaft transfers rotational energy to the differential."

From a data-centric perspective, overlap density is a crucial property of datasets. It ensures that concepts are distributed in a way that facilitates W2SG:

- Weak models bootstrap the process by learning and labelling overlapping patterns, which improves generalization to harder concepts.Strong models leverage the weak model’s labelled data to reinforce and refine their deeper knowledge, ultimately improving their performance in a controlled manner

Overlap Density: The Data Multiplier Effect

Shin et al.'s central insight: W2SG succeeds when datasets contain sufficient "bilingual" examples where easy and hard patterns coexist (termed overlap density).

These overlap points act as Rosetta Stones that enable strong models to:

- Decode Hard Patterns - Use weak supervision as cryptographic keys to unlock latent complex featuresExtrapolate Beyond Supervision - Generalize to pure-hard examples through pattern completion mechanismsFilter Alignment-Critical Data - Identify samples where capability gains won't compromise safety guarantees[3]

The paper also identifies three distinct operational regimes through controlled experiments:

| Regime | Overlap Density | W2SG Outcome |

|---|---|---|

| Low | Insufficient overlap points or overly noisy detection | Worse than weak model (insufficient decryption keys) |

| Medium | Adequate overlap points and moderate noise levels | Matches/slightly exceeds weak model (partial pattern completion) |

| High | Sufficient overlap points with minimal noise | Approaches strong model ceiling (full cryptographic break-through) |

Here are a few experimental results:

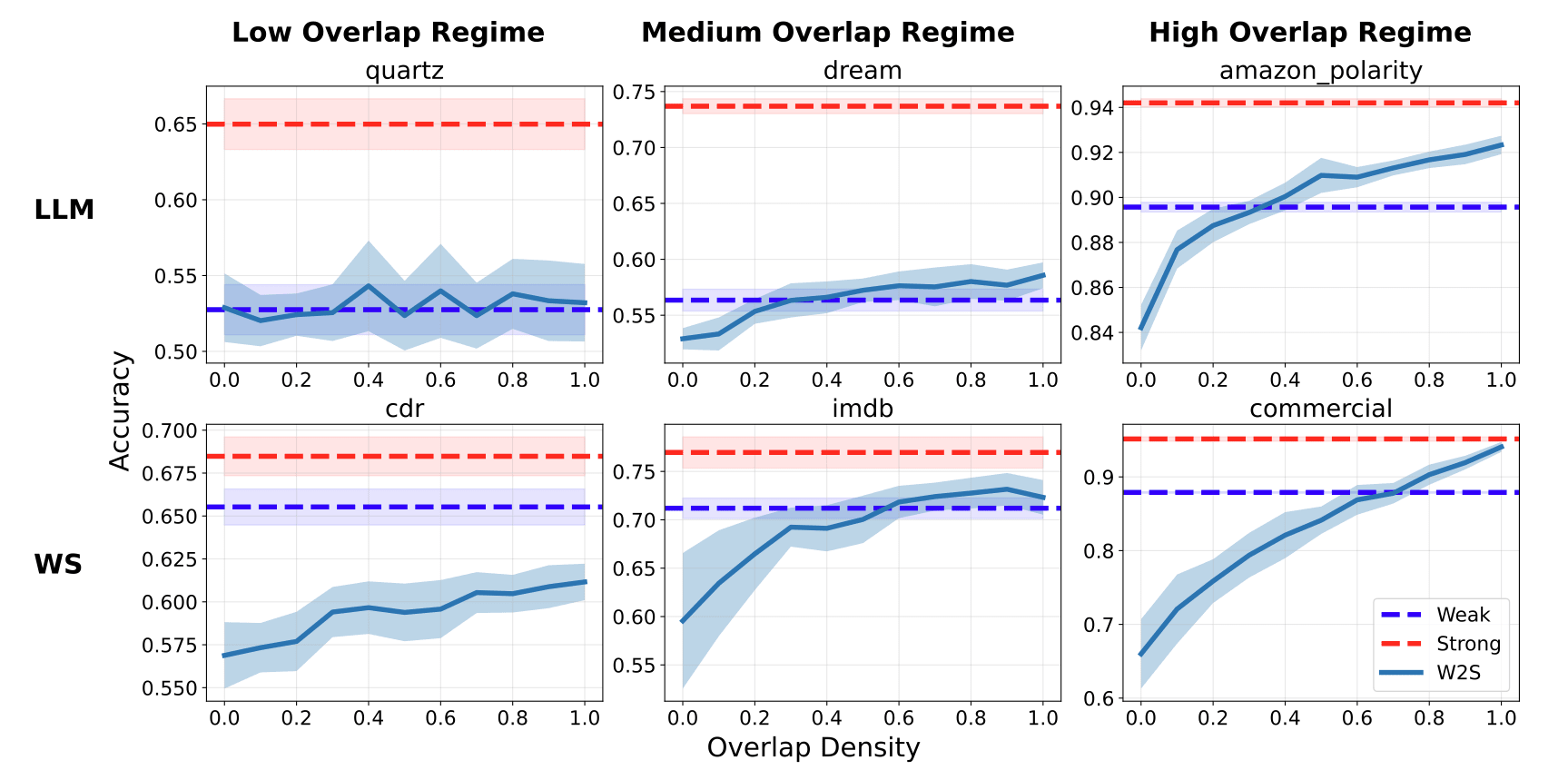

Figure 2: W2SG Performance vs overlap density regimes.[4]

Analysis: Why This Changes Alignment Strategy

Key Findings:

- Data AND Algorithms: Curating overlap points substantially improves W2SG without tweaking model architectures or training parameters[5]Overlap detection algorithm matters: Their UCB-based selection algorithm outperforms random sampling[6]Noise resilience: Overlap detection works even with 20% to 30%+ label noise through confidence thresholding[7]

Broader Alignment Implications

This work suggests several paradigm shifts:

- Data as Alignment Lever:

Overlap engineering could let us:

- Constrain capabilities via pattern availabilityBuild in oversight anchors through forced overlapsCreate "fire alarms" when overlap density drops

High overlap density may resolve the alignment tax problem by making safety-preserving features necessary for capability gains.

- Safety Datasets must be designed with overlap metrics.

The paper's theoretical framework (Section 3.1) shows that overlap density acts as an information bottleneck between weak and strong models. This means:

- High overlap → Strong model inherits weak supervisor's safety propertiesLow overlap → Strong model diverges unpredictably

- Multi-Level Overlaps: Extending to N-way pattern intersections, allowing more complex patternsDynamic Density: Adaptive sampling during training, depending on the overlap regime and W2SG performance improvementsAdversarial Overlaps: Testing robustness against overlap poisoning

Research Toolkit: Implementing the Paper's Insights

To access the toolkit, please visit the GitHub repository.

The Overlap Density toolkit is a practical implementation of the concepts introduced in Shin et al.'s (2024) research. It focuses on analyzing and leveraging overlap density in training datasets. By providing tools to measure, analyze, and experiment with overlap density, the toolkit empowers researchers to explore how data-centric features can significantly enhance W2SG. This approach shifts the focus from purely algorithmic improvements to optimizing data properties for better performance.

The toolkit is designed for researchers aiming to:

- Investigate how overlap density facilitates W2SG between large language models (LLMs)Experiment with mixing overlapping and non-overlapping data points to study their effects on model performanceBuild upon Shin et al.'s findings to develop new alignment strategies that balance safety and capability

Key Features

- Dataset Processing: Prepares datasets for training, validation, and testing, ensuring compatibility with various formats.Model Initialization: Supports weak and strong models with optional configurations like Low-Rank Adaptation (LoRA).Overlap Density Calculation: Measures overlap density using activations and labels, with built-in threshold detection.Mixing Experiments: Enables controlled mixing of overlapping and non-overlapping data points to study their impact on performance.Visualization and Exporting: Provides tools for plotting results and saving metrics in JSON format for further analysis.

Potential Applications

- AI Alignment Research: Use overlap density as a metric to design datasets that promote safe generalization in AI systemsCurriculum Learning: Develop training curricula where weak models bootstrap strong models by leveraging overlapping patternsRobustness Testing: Evaluate model resilience under varying levels of overlap density, including scenarios with noisy or adversarial overlapsDataset Engineering: Optimize datasets for specific tasks by balancing easy, hard, and overlapping patterns

Conclusion & Call to Action

Shin et al.'s research on weak-to-strong generalization (W2SG) highlights the transformative role of overlap density in AI alignment. By focusing on dataset properties rather than solely on algorithms, they demonstrate how overlapping examples — where easy and hard patterns coexist — enable weak models to bootstrap strong ones, improving generalization while balancing safety and capability.

Key insights include:

- Overlap density bridges the gap between weak and strong generalization.Data-centric approaches can address alignment challenges like mesa-optimization and scalable oversight.

This research opens new avenues for exploration. Researchers are encouraged to:

- Extend overlap density concepts to multi-level pattern intersections.Develop adaptive sampling strategies to optimize overlap density during training.Test robustness under adversarial or noisy overlaps.Collaborate across disciplines to refine these approaches and address broader alignment challenges.

By building on these findings, the AI community can advance toward safer, more capable systems while addressing critical alignment concerns.

Credits & Acknowledgments

This analysis builds entirely on the groundbreaking work of Changho Shin and colleagues. Also a huge thanks to other researchers whose works I used to learn more about W2SG, such as EleutherAI and their blog and OpenAI team.

- ^

From the paper:

Left (a): overlapping easy and hard patterns in our dataset are the key to weak-to-strong generalization. Learning from overlapping points, where easy features and hard features coexist, enables a weak-to-strong model that can generalize, while is limited to reliably predicting points with easy patterns.

Right (b): adding more such overlapping points has little influence on the performance of the weak model, but dramatically improves the performance of the weak-to-strong model. Adding such points—even a small percentage of the dataset—can push against the limits of the strong model.

- ^

This example reflects my perspective on the concepts after analyzing the paper and working on the code for the past month. It can be flawed! Additionally, this serves as a fun and educational example inspired by my efforts to explain the inner workings of a car to my partner.

- ^

This is not an exhaustive list from my analysis.

- Pattern Isolation Guardrails where high-overlap data enables:

- Controlled Capability - limits learning to hard patterns verifiable through weak supervisionInterpretable Updates - changes track measurable overlap metrics rather than black-box improvements

def safety_filter(data_sources): for t in 1...T: # Estimate alignment risk inversely with overlap confidence safety_scores = [ 1/(1 + overlap_ci[source]) # Lower CI width = higher safety for source in data_sources ] selected = argmax(safety_scores) collect_data(selected) - ^

From the paper:

Red dashed (Strong) lines show strong model ceiling accuracies; blue dashed (Weak) lines represent weak model test accuracies; and W2S lines represent the accuracies of strong models trained on pseudolabeled data with a controlled proportion of overlap density.

The LLM label refers to their language model experiments, that are followed the setup described in EleutherAI (2021), which replicates Burns et al. (2023); and the WS label refers to weak supervision setting, where they used datasets from the WRENCH weak supervision benchmark (Zhang et al., 2021)

- ^

OpenAI mentions in their paper:

We are still far from recovering the full capabilities of strong models with naive finetuning alone, suggesting that techniques like RLHF may scale poorly to superhuman models without further work.

That's where overlap density comes in to improve W2SG performance from a data-centric perspective and as one of the powerful tools in the hands of researchers.

- ^

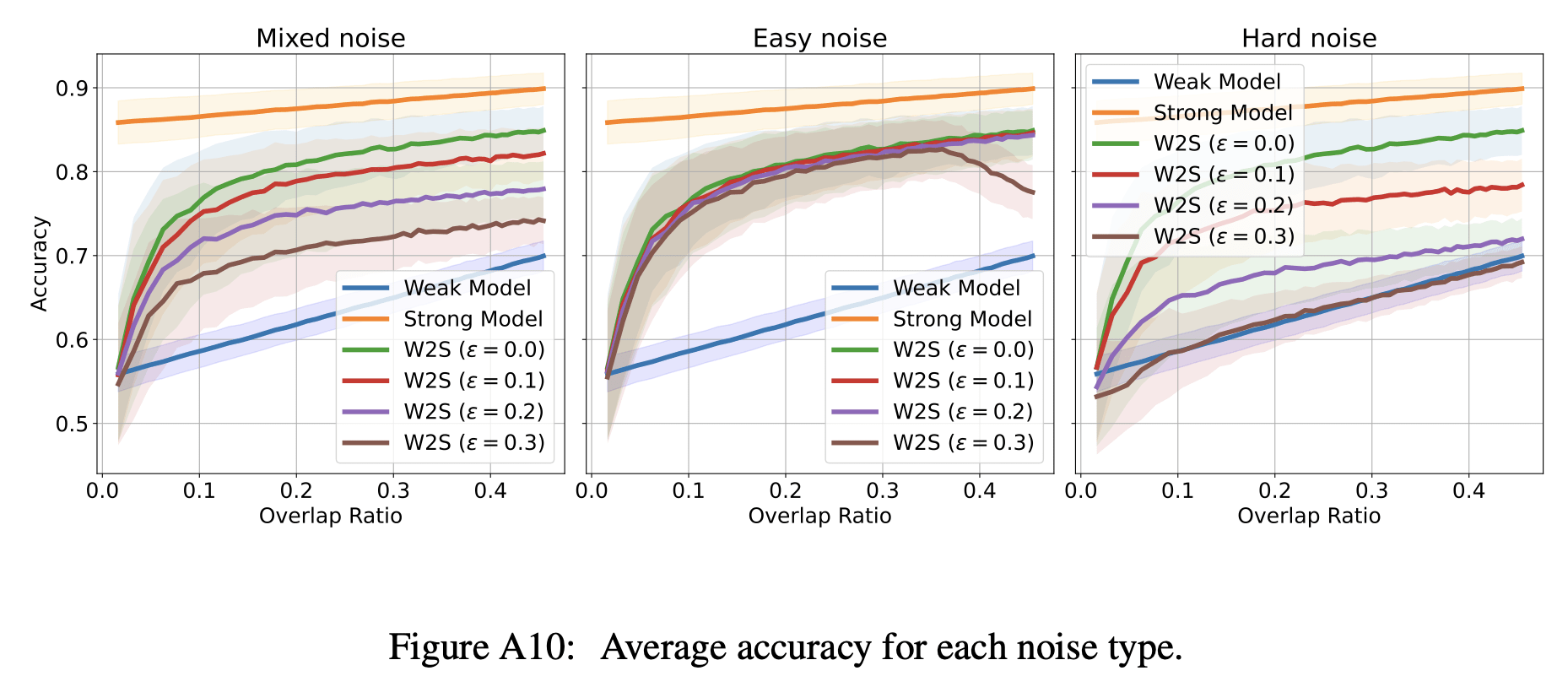

Some experimental results with their UCB-Based Data Selection for Maximizing Overlap vs random sampling:

From the paper: "Data selection results with Algorithm 1 (UCB-based algorithm) for Amazon Polarity and DREAM datasets. We report the average of 20 repeated experiments with different seeds. We observe that the data source selection procedure, based on overlap density estimation, can produce enhancements over random sampling across data sources."

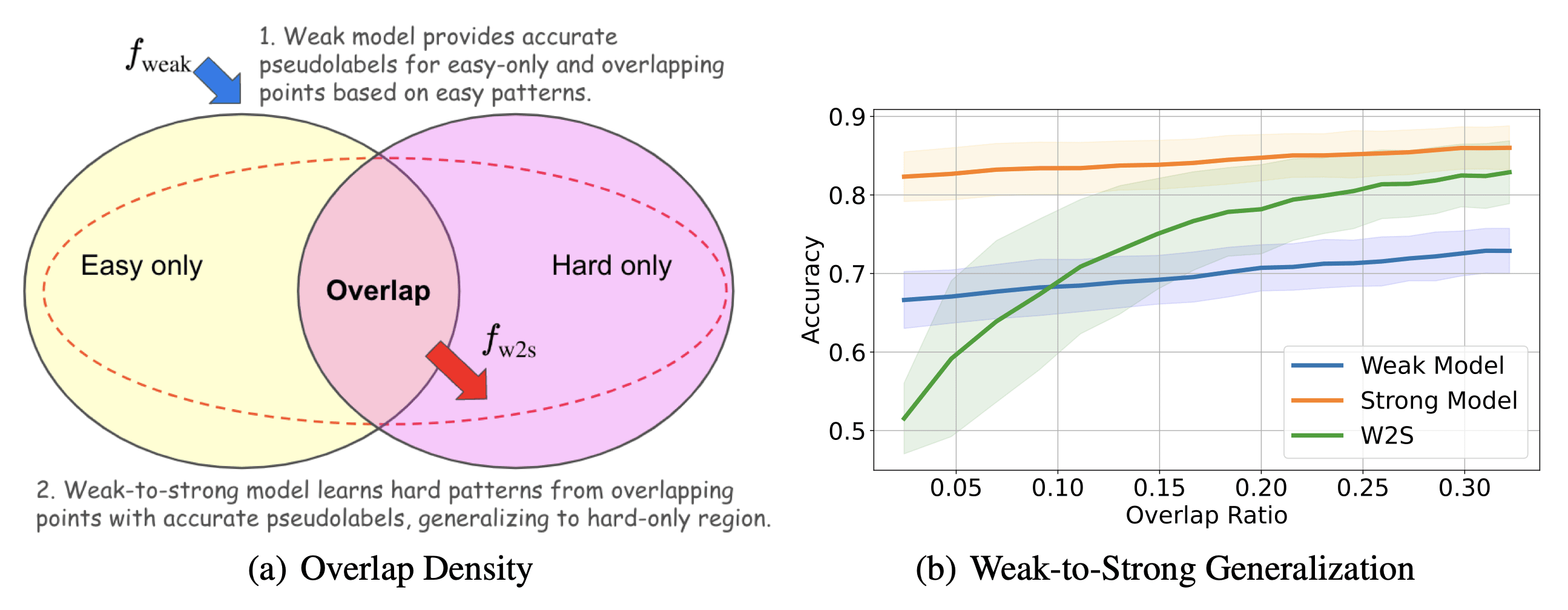

- ^

According to the synthetic experimental results, a weak-to-strong (W2S) model still outperforms a weak model with 30%+ of mixed noise (from the sampling process and overlap detection)

From the paper: "These scenarios are as follows:

(1) Mixed noise: Half of the errors select easy-only points, and the other half select hard-only points;

(2) Easy noise: All errors select easy-only points;

(3) Hard noise: All errors select hard-only points."In practice, noise may be introduced from a sampling process or overlap detection algorithm.

- ^

While Shin et al. don't explicitly test capability limitation, their core theorem proves that overlap density bounds strong model performance:

(my oversimplified take, hehe)

This creates a mathematical basis for intentional capability suppression through:

- Strategic under-labeling of high-risk capability areasDensity-aware dataset balancing

Discuss