There is a surprising connection between the Morris Worm and ChatGPT.

Model Free Methods Workshop

In my video Model Free Methods Workshop I let the students analyze four problems at a high level, but to do it two different ways for each. First they solve it using a Reductionist Stance, and then using a Holistic Stance.

I knew when I held the workshop that Holism was a dirty word in academia and initially used Model Free Methods as a euphemism.

The last problem is about mind reading. But the first one is in many ways more interesting.

The first task is to write a program to detect spelling errors in text. Not correct them, just detect them.

In the workshop video I ask “What is the first question you have to ask if you are writing a spelling error catching program Reductionistically?”

The surprising (or not) answer is “You have to pick a language”.

If we are creating a spelling error detector using 20th Century NLP, then we would have word lists or dictionaries, and possibly grammars or “WordNet”. And such dictionaries are obviously language specific since they list words in the target language.

Can you do this without specifying the language before you start the project? Well, modern LLMs like GPT already know dozens of languages and can effectively detect all errors in your documents. And some can rewritet them. They are not using NLP, they are using 21st Century NLU – Natural Language Understanding.

How come LLMs can understand multiple languages? It learned them. And systems that learn are Holistic. So there is the clue. Our Holistic Stance solution to detecting spelling errors is a system that is able to learn all the languages we want to use it on.

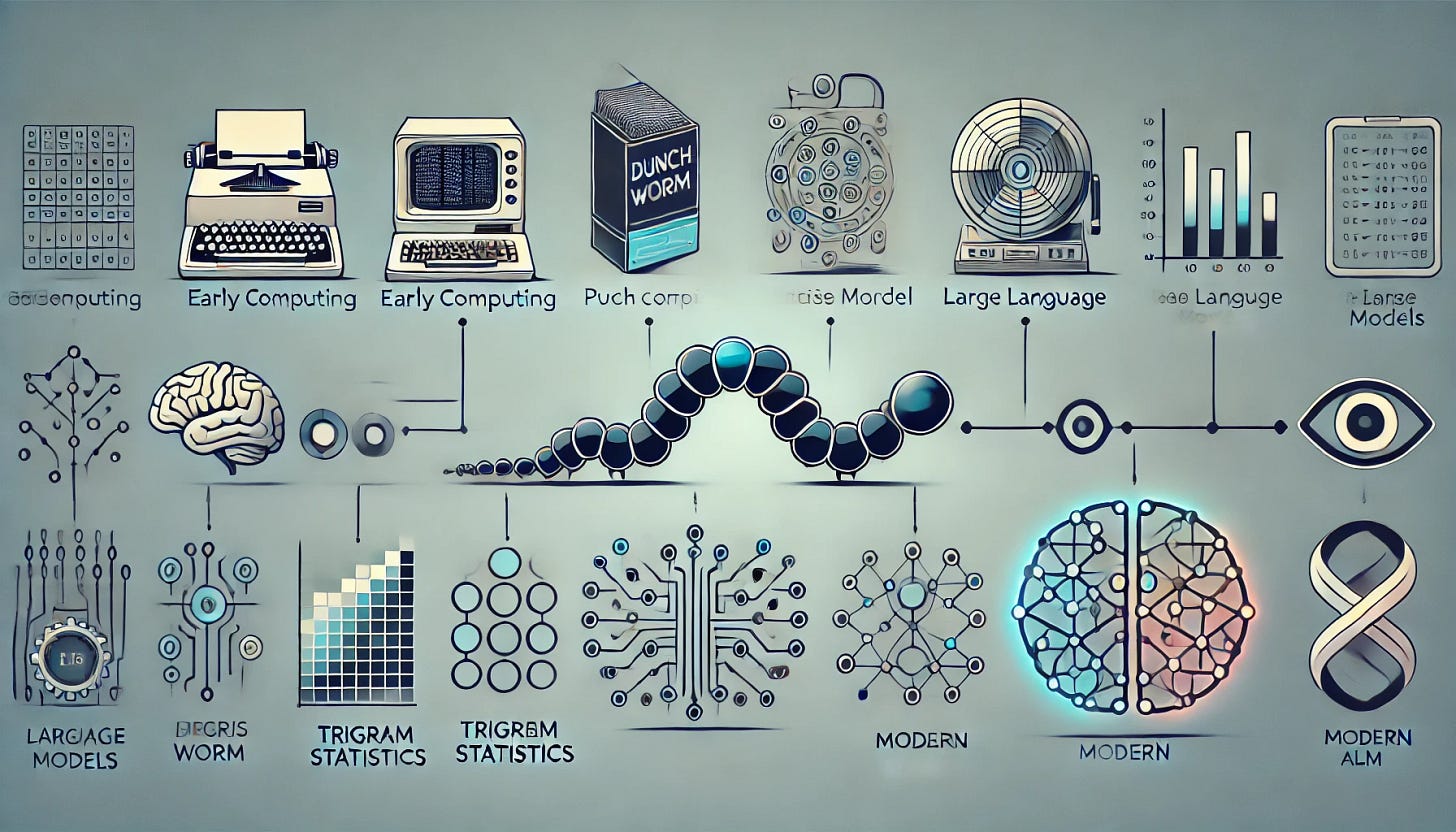

Now we can ask “What was the first and simplest Learned Language Model?”

The First Language Model

Here is an algorithm that was published many years ago.

The idea is to gather(“learn”) trigram statistics for the target language and then to flag all trigrams that have not been previously encountered (with at least some frequency) in the training corpus. This is not 100% reliable, but it can pragmatically outperform many of the old NLP approaches, and it is language agnostic, which NLP never was.

We note that this algorithm belongs to the category “Statistical Methods for Natural Language Understanding”. Modern LLMs are more “context-exploiting” than they are “statistical”, but that’s another show.

An example shows how this trigram algorithm works in practice: You are about to submit your fourth paper to a yearly conference. How can you flag typos in this paper?

You can feed the three older papers you published through the program in a trigram statistics gathering mode. Then feed the fourth (new) paper through in detect mode and it will point out all words that contain trigrams it has not seen before. This is amazingly effective. No matter how much jargon there is on your papers, if it recurs year to year, it’s not going to be flagged.

The four papers could have been written in French and it would have worked anyway. The system learns some amount of the language from its corpus, which is the first three papers.

Very clever idea. Sounds straightforward. Was it ever made available as a commercial product? Yes, some of the earliest UNIX release tapes contained a program named typo.c that did exactly this. You can find the program on GitHub.

And it is the first program that I know of that learns a Model for a Human Language and then uses it to successfully accomplish a language understanding task. As such, I think it is historically significant.

The author of the program? Robert Tappan Morris, professor at MIT CSAIL, who also created the Morris Worm.