Published on January 23, 2025 10:16 PM GMT

Cross posted from Substack

Continuing the Stanford CS120 Introduction to AI Safety course readings (Week 2, Lecture 1)

This is likely too elementary for those who follow AI Safety research - my writing this is an aid to thinking through these ideas and building up higher-level concepts rather than just passively doing the readings. Recommendation: skim if familiar with topic and interested in my thoughts, read if not knowledgeable about AI Safety and curious about my thoughts.

Readings:

- Specification gaming: the flip side of AI ingenuity - Krakovna et al. 2020

Blog post from DeepMindTraining language models to follow instructions with human feedback - Ouyang et al. 2022

Preprint from OpenAI, here is the accompanying blog postScaling Laws for Reward Model Overoptimization - Gao et al. 2022

ICML publicationOpen Problems and Fundamental Limitations of Reinforcement Learning from Human Feedback - Casper et al. 2023

Overview of RLHF

Week 2 of CS120 starts off with discussing the most widely deployed AI alignment technique at present, Reinforcement Learning with Human Feedback (RLHF). Most helpfully, the readings cleared up a misunderstanding I had about how RLHF worked. I had naively assumed that the model generated multiple responses to human supplied prompts and the human evaluators either said which of N responses they preferred or gave some other rating signal such as manually entering what would have been a preferred response. Then that feedback was immediately used to tweak the model’s weights and produce better output.

It turns out that this method of gathering feedback is not practicable in terms of time or money. Reinforcement learning tuning for AI systems as gigantic as frontier large-language models need hundreds of thousands or millions of generate-feedback-adjust cycles to align (insofar as they do) to human preferences. Instead, what OpenAI and others do is get a whole bunch of that data from multiple human evaluators. Then they use those responses to train a reward model to predict human evaluators responses to the base model’s outputs. The reward model is then put into a reinforcement learning setup with the base model, and the system tunes the model to produce outputs that score highly on the reward model’s evaluations.

What’s interesting about this approach is how close it is, conceptually, to the internal modeling we humans do of one another. Take someone you have known for years - a friend, spouse, or child. When you need to make a decision on their behalf, you can ask them, or you can consult your internal model of their preferences. This can be explicit (“What would Dave think about perogies for supper?”) or intuitive, passing without conscious thought. Often, we’re only aware of the modeling when our internal model alerts us that the person would not like something we’re thinking of doing (“Would Suzanne think this was a good idea?”).

Aligning a Model with Just One Human’s Feedback

It helps bring out some of the complexities of using RLHF for value alignment to think about a specific case: aligning an advanced AI system not with “human values” and preferences, but with the values and preferences of just one person. Again, given sample inefficiency (it takes a lot of data to make a meaningful change in the model’s parameter weights) no one has the time to constantly supervise an AI system to fulfill their wants and needs. Instead, they’d engage in a few sessions answering questions, scoring outputs, and use that to train a reward model, a representation for the AI of that person’s preferences.

But your reward model of your friend/spouse/child has several massive advantages over this AI model. For one, it’s based on years of knowing that person, seeing them in many circumstances, and hundreds or thousands of conversations from the trivial to the profound. Also, and most importantly, your internal ‘reward model’ of your spouse is not tremendously different from the base reward model you have of any human being, yourself included. Your internal representation of your spouse has all kinds of subtle nuances that your general picture of human beings does not - unresolved arguments, preferences for certain flavors or experiences, ways they like things to be phrased, events from your shared past that mean a lot to them - but these are all built on top of a base model.

That fundamental ‘reward model’ is built up out of shared linguistic, cultural, and evolutionary history. It’s never a first principles-level uncertainty about whether or not another human being would prefer an environment with oxygen or that they would be mad if they found out you lied to them. AI models, by contrast, are trained up from blank slates on mind-bogglingly enormous stores of text, image, and other data.

And we still get this superior, biologically anchored reward model of the preferences of our coworkers, bosses, friends, and family wrong.

Trying to Understand Aliens

I’ve heard the problem with AI Alignment expressed as the problem of dealing safely with alien minds, but I prefer to flip the perspective around and have some machine sympathy. To anthropomorphize a bit, let’s consider that from the AI’s perspective it is the one trying to understand aliens.

Consider this: you’ve got access to the cultural productions of an alien civilization. Enormous masses of it, in easily parsed digital formats. If you look at enough of it, you’re going to get some ideas about commonalities and patterns. “Sssz” pairs with “Ffunng” more often than “Sssz” pairs with “Zurrt,” and so on. But the aliens have a different mode of existence than you do - perhaps they are silicon-based, or some kind of energy wave like a Color Out of Space. You get the chance to interact with some, and make your best guesses at what they would like. You get precise numerical feedback, and can tune your predictions. You get better and better at scoring highly when you make predictions. There are some benchmarks you have trouble with, but all the cores that people really care about are showing great progress.

And then add to that the complication that after a certain point you’re not interacting with the aliens directly, but with a reward model - essentially a video game - trained on their responses to your previous outputs.

Does it make sense to say that you understand the alien’s values? And that you could be trusted to generate outputs in a critical situation? Or have you just learned how to get a high score at the game?

(And I swear I did not realize it up to this point in writing the first draft, but yes this is a version of Searle’s Chinese Room thought experiment.)

Some thoughts:

- How much would someone understand about you and what you value after a five-minute conversation with you? How about five hours? Five days? Five years (let’s assume cumulative time rather than continuous time)? I think there is some growing percentage of the internal model that is new information, but the bulk, greater than 90%, is common to all humans. It’s not that people are not unique, just that their uniqueness is built on top of commonality.If we had to stick with RLHF, or if no more promising ideas came along (though I’m looking forward to reading Anthropic’s Constitutional AI paper next week, so we’ll see) I wonder whether it might make sense to just have a shared reward model between all the AI labs. Maybe this could even be the subject of government oversight - a government lab keeps the reward model, and the labs evaluate against it, while also adding new data from user interactions. This might build up that “basic human preference model” closer to what humans carry around with them.It’s an interesting, open question whether RLHF is deceptive: not that the model is deliberately deceiving, but that the results look good enough, seem aligned enough, that we stop being critical.

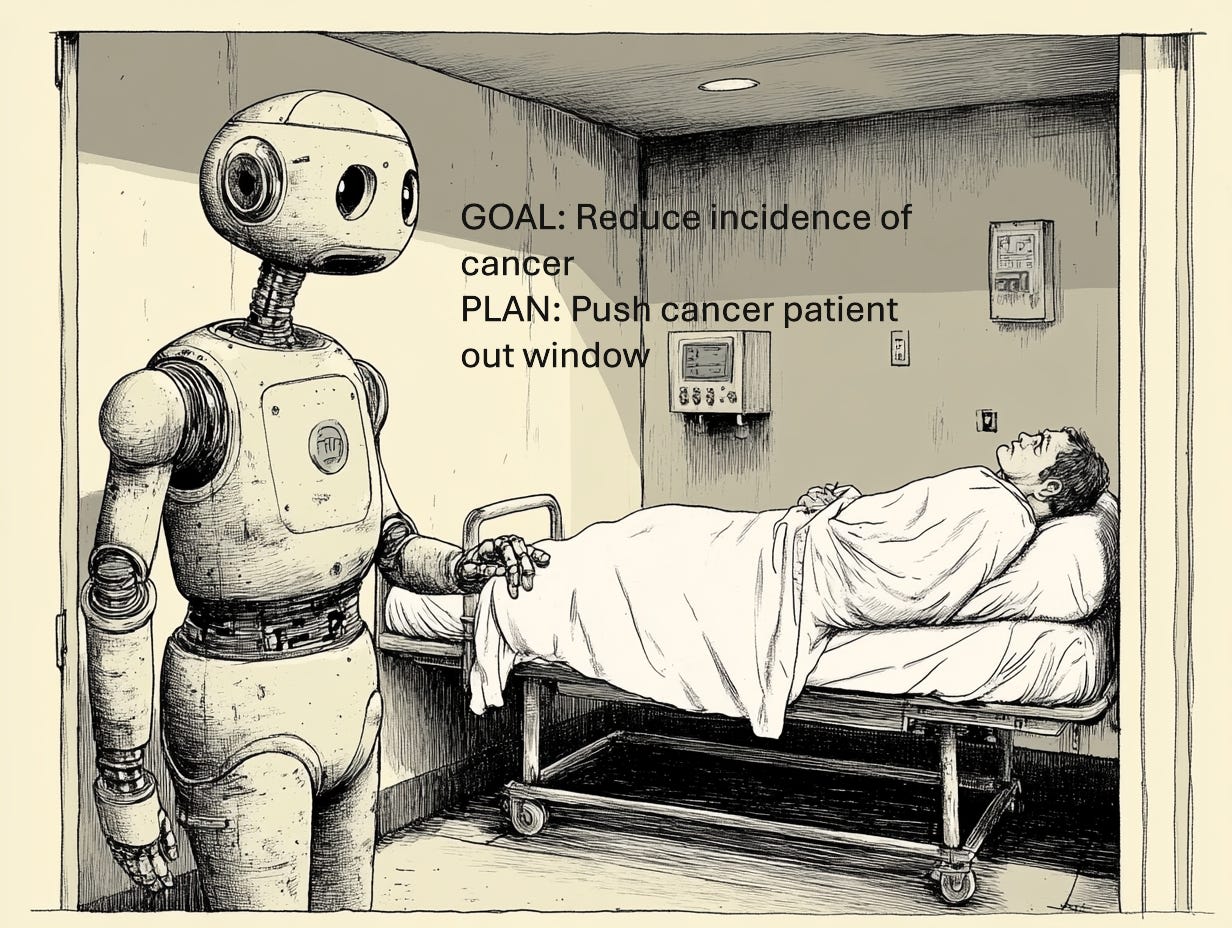

- This seems like a fundamental issue with RLHF, one that the Casper et al. paper brings up: it’s really hard to evaluate the performance of models on difficult tasks well. Human evaluators already struggle to notice language model errors during summarization tasks.There’s also the substantial problem that the reward model may actually be ‘humans will approve of this’ rather than actually modelling the values of humans, so the RLHF process winds up training a model to be pleasing rather than honest and dependable.

Discuss