Published on January 8, 2025 7:35 AM GMT

This is an article in the featured articles series from AISafety.info. AISafety.info writes AI safety intro content. We'd appreciate any feedback.

The most up-to-date version of this article is on our website, along with 300+ other articles on AI existential safety.

For a “monosemantic” neuron, there’s a single feature that determines whether or not it activates strongly. If a neuron responds only to grandmothers, we might call it a grandmother neuron. For a “polysemantic” neuron, in contrast, there are multiple features that can cause it to activate strongly.

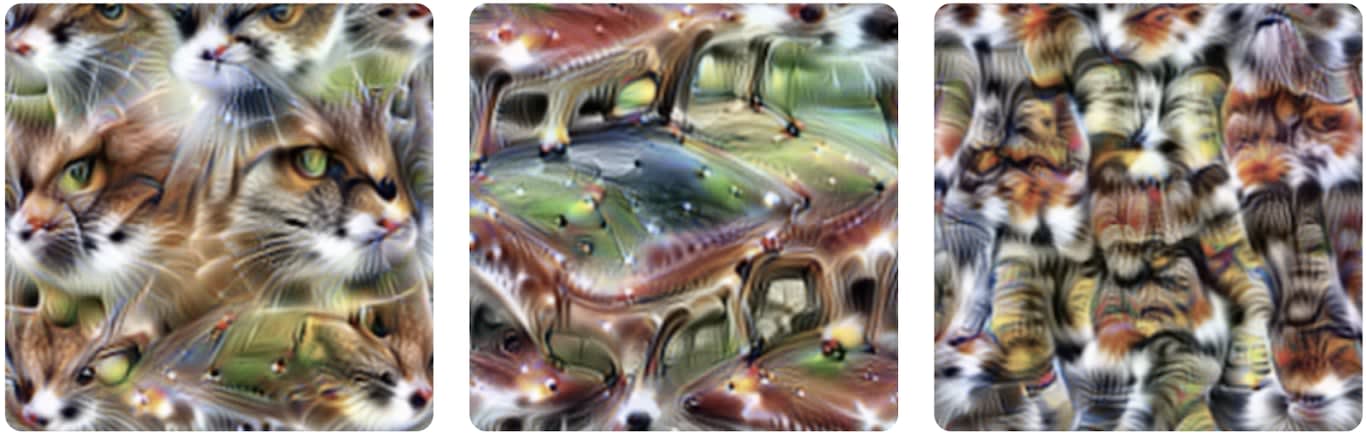

As an example, this image shows feature visualizations of a neuron that activates when it sees either a cat face, cat legs, or the front of a car. As far as anyone can tell, this neuron is not responding to both cats and cars because cars and cats share some underlying feature. Rather, the neuron just happened to get two unrelated features attached to it.

How do we know that the neurons are not encoding some sort of shared similarity?

Suppose a polysemantic neuron is picking out some feature shared by cars and cats. Say, the neuron is representing “sleekness”. Then we’d expect images of other “sleek” things, like a snake or a ferret, to activate the neuron. So if wegenerate lots of different images which highly activate our neuron, and find that they do contain snakes and ferrets, that’s evidence for the neuron picking up on a unified concept of sleekness. Researchers have run experiments like this on neurons like this one and found that, no, they just activate on cats and cars — just as the “polysemantic” hypothesis would lead us to expect.

Why do polysemantic neurons form?

Polysemantic neurons seem to result from a phenomenon known as “superposition”. Superposition means a neural net combines multiple features in individual neurons, so that it can pack more features into the limited number of neurons it has available, or use fewer neurons, conserving them for more important tasks. In fact, if we only care about packing as many features into n neurons as we can, then using polysemantic neurons lets us pack roughly as many as exp(C * n) features, where C is a constant depending on how much overlap between concepts you allow.[1] In contrast, using monosemantic neurons would only let you pack in n features.

What are the consequences of polysemantic neurons arising in networks?

Polysemantic neurons are a major challenge for the “circuits” agenda because they limit our ability to reason about neural networks. Because they encode multiple features, it’s harder to interpret these neurons individually when thinking about circuits. As an example: if we only have two polysemantic neurons, which encode five different features each, then we have effectively 25 different connections between features that are all governed by a single weight.

However, there has been some recent progress. In 2023, Anthropic claimed to achieve a breakthrough in this problem in their paper “Towards Monosemanticity”. Anthropic trained large sparse autoencoders to decompose the polysemantic neurons in a neural network into a larger number of monosemantic neurons, which are (claimed to) be more interpretable.

Christopher Olah of Anthropic stated he is “now very optimistic [about superposition]”, and would “go as far as saying it’s now primarily an engineering problem — hard, but less fundamental risk.” Why did we caveat Anthropic’s claims? Because some researchers, like Ryan Greenblatt, are more skeptical about the utility of sparse autoencoders as a solution to polysemanticity.

- ^

This is a consequence of the Johnson-Lindenstrauss lemma. As this estimate doesn’t account for using the exponential number of features for useful computations, it is unclear if neural networks actually achieve this bound in practice. (The use of polysemanticity in computations is an active research area. For a model of how polysemanticity aids computations, see “Towards a Mathematical Framework for Computation in Superposition”.) What about lower bounds on how much computation can be done with polysemantic neurons? Well, these estimates depend on assumptions about the training data, number of concepts, initialization of weights etc. So it is hard to give a good lower bound in general. But for some cases, we do have estimates: e.g., “Incidental polysemanticity” notes that, depending on the ratio of concepts to neurons, the initialization process can lead to a constant fraction of polysemantic neurons.

Discuss