Published on January 8, 2025 12:31 AM GMT

In my experience, the proofs that you see in probability theory are much shorter than the longer, more involved proofs that you might see in other areas of math (like e.g. analytical number theory). But that doesn't mean that good technique isn't important. In probability theory, there are a set of tools that are useful across a broad variety of situations and you need to be able to recognize when it's the appropriate time to use each tool in your toolkit.

One of the most useful of tools to have is Markov's inequality. What Markov's inequality says is that, given a non-negative random variable and a positive real number , the probability that is greater than can be upperbounded in the following manner:

This is a pretty simple formula. You might have even been able to guess it beforehand using dimension analysis. Probabilities are often thought of as dimensionless quantities. The expectation and the threshold both have units of "length", so we would need them to exactly cancel out to create a dimensionless quantity. We naturally expect that the probability of exceeding the threshold to (a) increase as the expectation of increases and (b) decrease as the value of the threshold increases. These sorts of arguments alone would point you in the direction of a formula of the form:

Why does equal in the case of Markov's inequality? There is probably some elegant scaling argument that uniquely pins down the power , but we won't dwell on that detail.

Markov's inequality might not seem that powerful, but it is. For two reasons:

One reason is that Markov's inequality is a "tail bound" (a statement about the behavior of the random variable far away from its center) that makes no reference to the variance. This has both its strengths and its weaknesses. The strength is that there are many situations where we know the mean of a random variable but not its variance, so Markov's inequality is the only tool in our repertoire that will allow us to control the tail behavior of the variable. The weakness is that because it assumes nothing about the variance (or any higher moments), Markov's inequality is not a very tight bound. If you also know the variance of your random variable, you can do much better (though you will need more heavy-duty techniques like moment-generating functions).

The other reason why Markov's inequality is powerful is that, in a sense, many properties of random variables are just statements about the mean (the expectation) of some other random variable.

For example, the variance of a random variable is nothing more than the expectation of the random variable where is the mean of . Let's say we know that the variance of is . Because is a non-negative random variable, it follows that is well-defined and also a non-negative random variable. We can then show that:

This relationship is special enough that it has its own name: Chebyshev's inequality. But it's just Markov's inequality in disguise! Let's do one more.

We can define the parametrized set of random variables . The parametrized set of expectations has a name called the moment-generating function (because if you take derivatives of with respect to and evaluate at , you get the moments of the original random variable ). We then have that:

Hey, that's the Chernoff bound!

Despite being fairly simple as math goes, it took a couple of exposures for Markov's inequality to properly stick with me. To see why, let's walk through the proof which conceptually involves two steps.

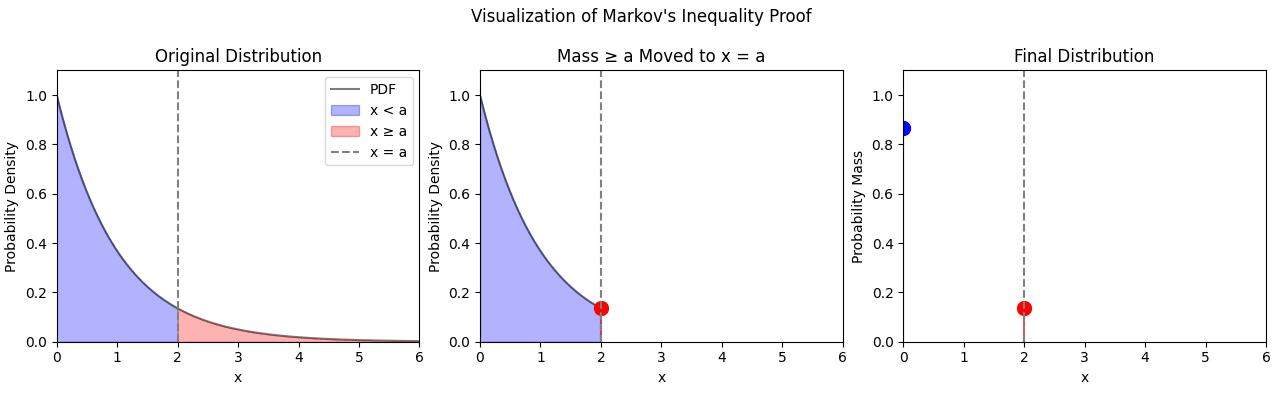

Let's say we have a threshold . We want to divide the probability distribution of into two regions: and .

If we take all of the probability mass for values of larger than and smush it at , then the new distribution will have an expectation which is less than or equal to the expectation of .

Similarly, if we take the probability mass for all points and smush it to , then the new distribution will also have an expectation less than or equal to the previous one.

What is the expectation of this final distribution? There would be two contributions to the expectation: the probability mass at multiplied by 0 and the probability mass at multiplied by . The first term vanishes so we are left with an overall expectation . We can then compare this expectation to the expectation of our original distribution and then divide by to get Markov's inequality:

Well this is a simple proof. Where did I struggle before?

An important thing to keep in mind is that Markov's inequality only holds for non-negative random variables. The first part of the proof is easy for me to remember because sticks out to me as an obvious threshold to smush probability mass to. But the second part where we smush all the mass to used to elude me because I didn't keep in mind that the non-negativity of makes a kind of threshold as well.

Discuss