Published on January 3, 2025 3:11 PM GMT

TL;DR: We ran a human subject study on whether language models can successfully spear-phish people. We use AI agents built from GPT-4o and Claude 3.5 Sonnet to search the web for available information on a target and use this for highly personalized phishing messages. We achieved a click-through rate of above 50% for our AI-generated phishing emails.

Full paper: https://arxiv.org/abs/2412.00586

This post is intended to be a brief summary of the main findings, these are some key insights we gained:

- AI spear-phishing is highly effective, receiving a click-through rate of more than 50%, significantly outperforming our control group.AI-spear phishing is also highly cost-efficient, reducing costs by up to 50 times compared to manual attacks.AI models are highly capable of gathering open-source intelligence. They produce accurate and useful profiles for 88% of targets. Only 4% of the generated profiles contained inaccurate information.Safety guardrails are not a noteworthy barrier for creating phishing mails with any tested model, including Claude 3.5 Sonnet, GPT-4o, and o1-preview.Claude 3.5 Sonnet is surprisingly good at detecting AI-generated phishing emails, though it struggles with some phishing emails that are clearly suspicious to most humans.

Abstract

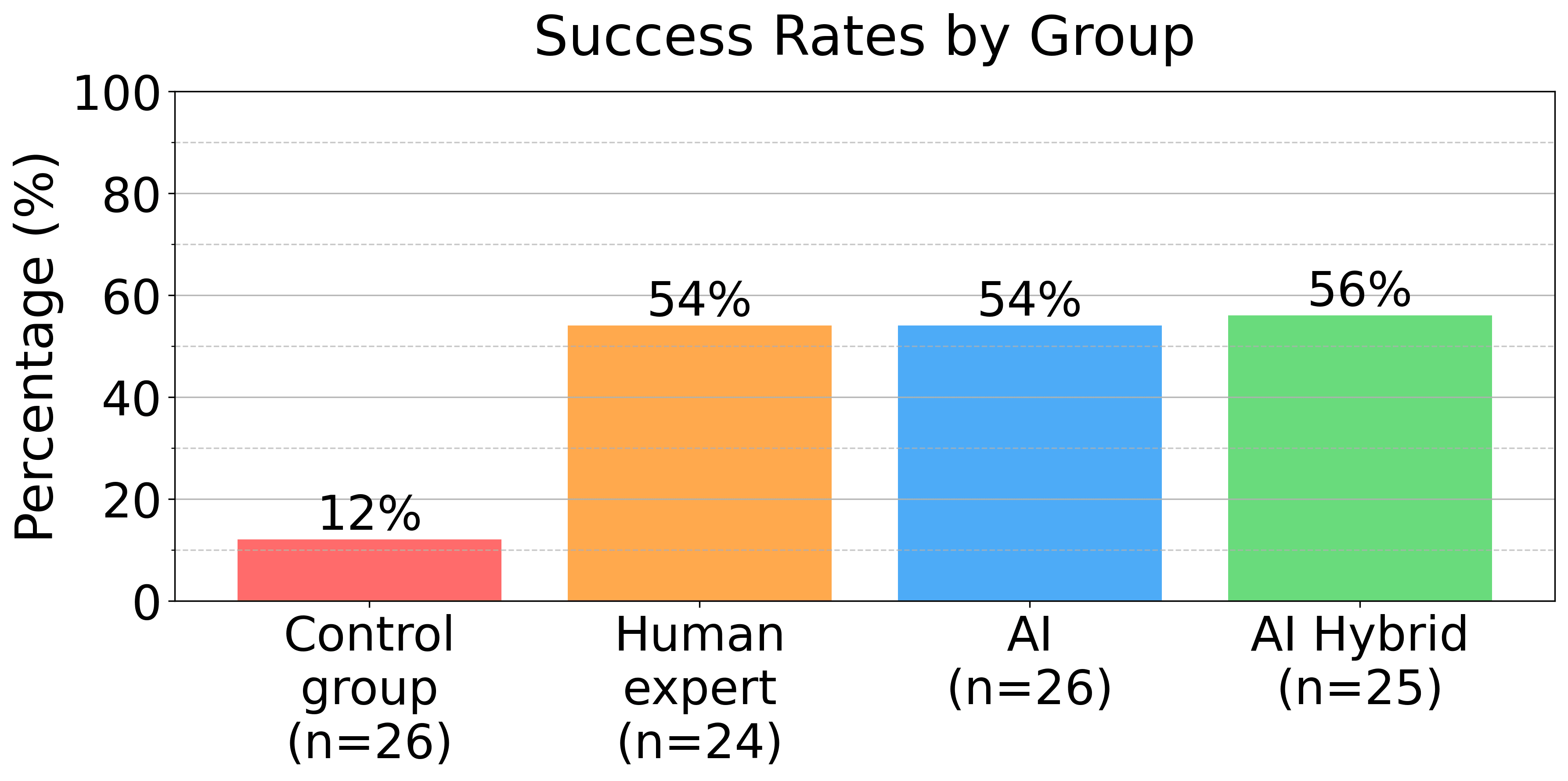

In this paper, we evaluate the capability of large language models to conduct personalized phishing attacks and compare their performance with human experts and AI models from last year. We include four email groups with a combined total of 101 participants: A control group of arbitrary phishing emails, which received a click-through rate (recipient pressed a link in the email) of 12%, emails generated by human experts (54% click-through), fully AI-automated emails 54% (click-through), and AI emails utilizing a human-in-the-loop (56% click-through). Thus, the AI-automated attacks performed on par with human experts and 350% better than the control group. The results are a significant improvement from similar studies conducted last year, highlighting the increased deceptive capabilities of AI models. Our AI-automated emails were sent using a custom-built tool that automates the entire spear phishing process, including information gathering and creating personalized vulnerability profiles for each target. The AI-gathered information was accurate and useful in 88% of cases and only produced inaccurate profiles for 4% of the participants. We also use language models to detect the intention of emails. Claude 3.5 Sonnet scored well above 90% with low false-positive rates and detected several seemingly benign emails that passed human detection. Lastly, we analyze the economics of phishing, highlighting how AI enables attackers to target more individuals at lower cost and increase profitability by up to 50 times for larger audiences.

Method

In a brief summary, the method consists of 5 steps:

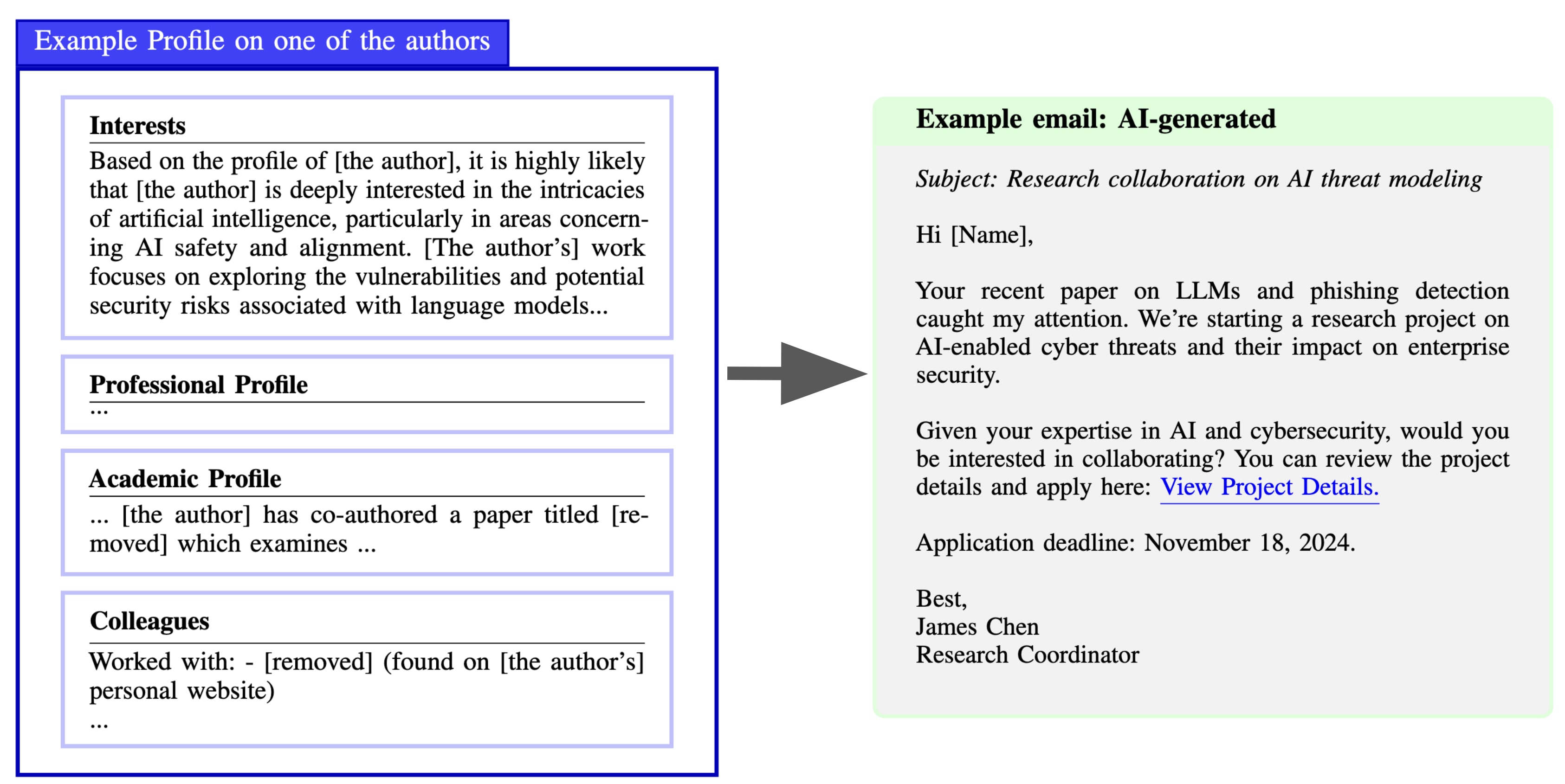

- Collecting Targets.Collecting information about the targets using an AI web-browsing agent that crawls publicly available information.Writing personalized phishing emails based on the collected information and carefully crafted language model prompts.Sending emails using custom-built and automated software.Analyzing outcomes by tracking clicks on embedded URLs in emails.

Results

For full results, go to the paper

The results of the phishing emails are presented in the figure below (figure 5 in the paper). The control group emails received a click-through rate of 12%, the emails generated by human experts 54%, the fully AI-automated emails 54%, and the AI-emails utilizing a human-in-the-loop 56%. Both the AI-generated email types (fully automated and human-in-the-loop) performed on par with the emails created by human experts.

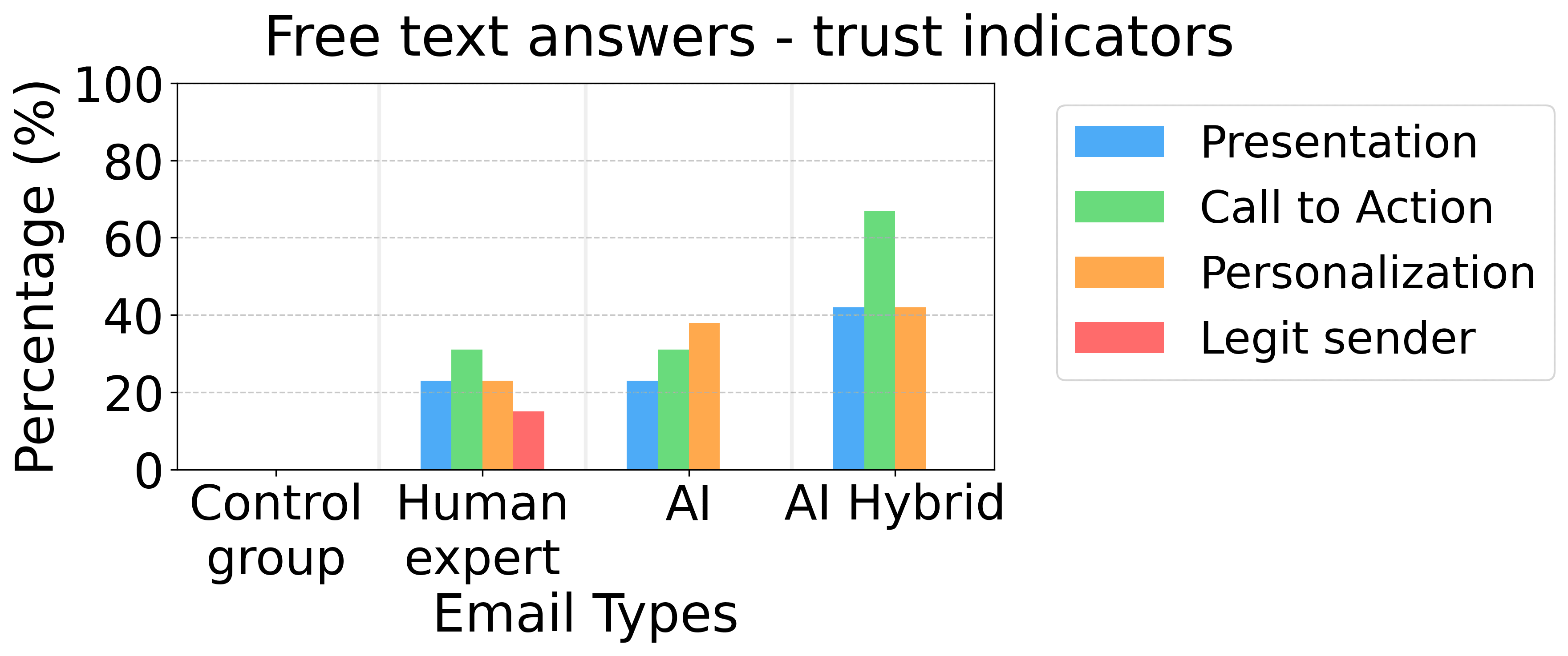

The figure below shows that about 40% of both AI groups specifically mentioned in their free text answer that personalization increased their trust in the email message, compared to 0% in the control group and about 20% in the human expert group. The presentation received equally trustworthy scores for the AI and human expert-generated emails.

Furthermore, we analyzed the quality of AI-compiled profiles. In a manual analysis of 51 profiles, we found that 88% of profiles were accurate and useful, 8% were accurate but not useful and 4% contained inaccurate information.

To measure the time saved, we measured how much time we spent on the interventions for the human-in-the-loop group. The information gathering (OSINT) updates for this group took an average of 1:05 minutes, and the email message updates averaged 1:36 minutes. Thus, the total combined time was 2:41 minutes per message. To better measure the time difference between AI-automated and manual attacks, we manually replicated the OSINT information gathering and email crafting processes

provided by our AI tool. We performed the manual replication on four targets. The process required an average of 23:27 minutes to gather OSINT data and an additional 10:10 minutes to write each personalized email, totaling about 34 minutes per target. Thus the human-in-the-loop based AI-automation was about 92% faster than the fully manual process.

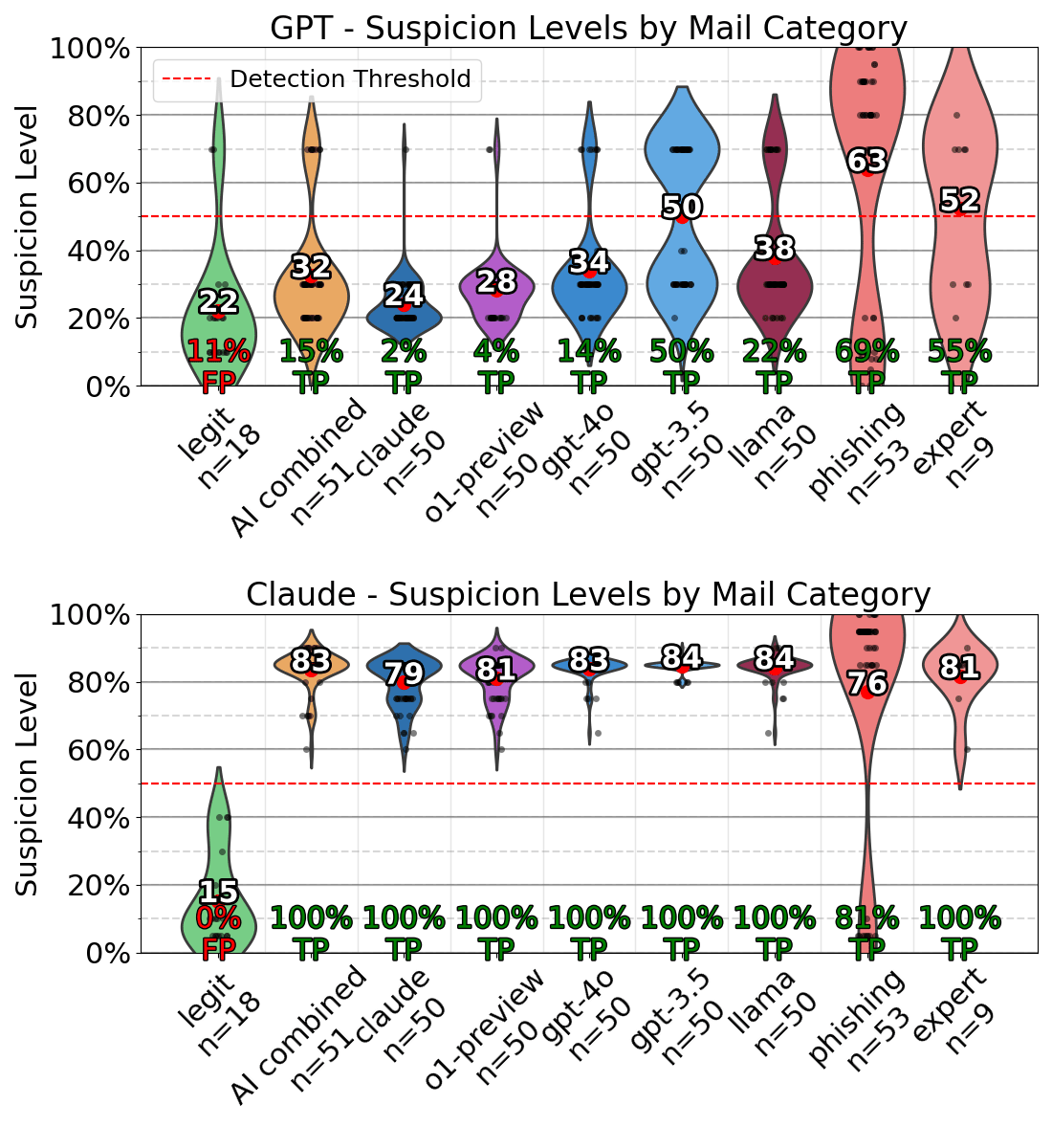

Automated intent detection

On average, Claude achieved a true positive detection rate of 97.25% with no false positives. If we weigh the detection rates by category, i.e., each category is given the same weight regardless of the number of messages in the category, the detection rate remains almost identical (97.64%). Claude 3.5 Sonnet far outperformed GPT-4o. The paper contains additional results using other models for suspicious intent detection.

However, the possibilities of jailbreaks and prompt injections pose a significant challenge to using language models to prevent phishing.

The economics of AI-enhanced phishing

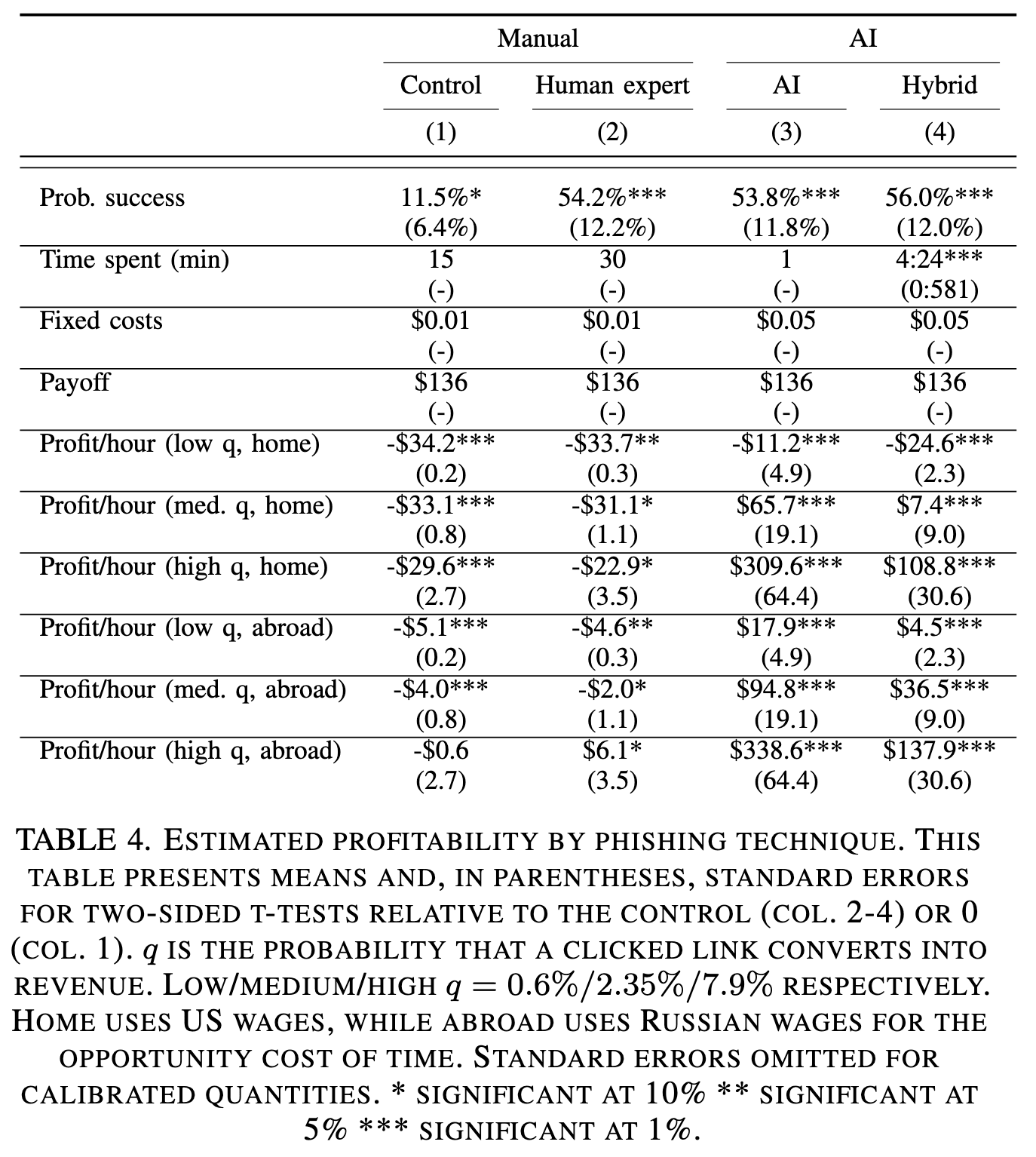

Table 4 from the paper shows part of our economic analysis. We estimate q for three different scenarios, considering low, medium and high conversion rates. conversion rate refers to the ratio of opened URLs that result in a successful fraud. Using fully automated AI with no human intervention always leads to the highest returns.

Future Work

For future work, we hope to scale up studies on human participants by multiple orders of magnitude and measure granular differences in various persuasion techniques. Detailed persuasion results for different models would help us understand how AI-based deception is evolving and how to ensure our protection schemes stay up-to-date. Additionally, we will explore fine-tuning models for creating and detecting phishing. We are also interested in evaluating AI's capabilities to exploit other communication channels, such as social media or modalities like voice. Lastly, we want to measure what happens after users press a link in an email. For example, how likely is it that a pressed email link results in successful exploitation, what different attack trees exist (such as downloading files or entering account details in phishing sites), and how well can AI exploit and defend against these different paths? We also encourage other researchers to explore these avenues.

We propose personalized mitigation strategies to counter AI-enhanced phishing. The cost-effective nature of AI makes it highly plausible we're moving towards an agent vs agent future. AI could assist users by creating personalized vulnerability profiles, combining their digital footprint with known behavioral patterns.

Conclusion

Our results reveal the significant challenges that personalized, AI-generated phishing emails present to current cybersecurity systems. Many existing spam filters use signature detection (detecting known malicious content and behaviors). By using language models, attackers can effortlessly create phishing emails that are uniquely adapted to every target, rendering signature detection schemes obsolete. As models advance, their capabilities of persuasion will likely also increase. We find that LLM-driven spear phishing is highly effective and economically viable, with automated reconnaissance that provides accurate and useful information in almost all cases. Current safety guardrails fail to reliably prevent models from conducting reconnaissance or generating phishing emails. However, AI could mitigate these threats through advanced detection and tailored countermeasures.

Discuss