Published on December 29, 2024 8:25 PM GMT

TL;DR:

- Corrigibility is a simple and natural enough concept that a prosaicAGI can likely be trained to obey it.AI labs are on track to give superhuman(?) AIs goals which conflictwith corrigibility.Corrigibility fails if AIs that have goals which conflict withcorrigibility.AI labs are not on track to find a safe alternative tocorrigibility.

This post is mostly an attempt to distill and rewrite Max Harm'sCorrigibility As Singular TargetSequence so that a wideraudience understands the key points. I'll start by mostly explainingMax's claims, then drift toward adding some opinions of my own.

Caveats

I don't know whether it will make sense to use corrigibility as along-term strategy. I see corrigibility as a means of buying time duringa period of acute risk from AI. Time to safely use smarter-than-humanminds to evaluate the longer-term strategies.

This post doesn't handle problems related to which humans an AI willallow to provide it with corrections. That's an important question, towhich I don't have insightful answers.

I'll talk as if the AI will be corrigible to whoever is currentlyinteracting with the AI. That seems to be the default outcome if wetrain AIs to be corrigible. I encourage you to wonder how to improve onthat.

There are major open questions about how to implement corrigibilityrobustly - particularly around how to verify that an AI is genuinelycorrigible and how to handle conflicts between different users'corrections. While I believe these challenges are solvable, I don'thave concrete solutions to offer. My goal here is to argue for whysolving these implementation challenges should be a priority for AIlabs, not to claim I know how to solve them.

Defining Corrigibility

The essence of corrigibility as a goal for an agent is that the agentdoes what the user wants. Not in a shallow sense of maximizing theuser's current desires, but something more like what a fully informedversion of the user would want. I.e. genuine corrigibility robustlyavoids the King Midas trope.

In Max's words:

The high-level story, in plain-English, is that I propose trying tobuild an agent that robustly and cautiously reflects on itself as aflawed tool and focusing on empowering the principal to fix its flawsand mistakes.

It's not clear whether we can turn that into a rigorous enoughdefinition for a court of law to enforce it, but Max seems to havedescribed a concept clearly enough via examples that we can train an AIto mostly have that concept as its primary goal.

Here's my attempt at distilling his examples. The AI should:

- actively seek to understand what the user wants.normally obey any user command, since a command usually amounts toan attempt to correct mistakes of inaction.be transparent, maintaining records of its reasoning, and conveyingthose records when the user wants them.ask the user questions about the AI's plans, to the extent that theuser is likely to want such questions.minimize unwanted impacts.alert the user to problems that the user would want to notice.use a local scope by default. E.g. if the user prefers that peoplein another country shouldn't die of malaria, the AI should becautious about concluding that the user wants the AI to fix that.shut itself down if ordered to do so, using common sense about howquickly the user wants that shutdown to happen.

Max attempts to develop a mathematically rigorous version of the conceptin 3b. Formal (Faux)Corrigibility.He creates an equation that says corrigibility is empowerment times lowimpact. He decides that's close to what he intends, but still wrong. Ican't tell whether this attempt will clarify or cause confusion.

Max and I believe this is a clear enough concept that an LLM can betrained to understand it fairly robustly, by trainers with asufficiently clear understanding of the concept. I'm fairly uncertainas to how hard this is to do correctly. I'm concerned by the evidenceof people trying to describe corrigibility and coming up with a varietyof different concepts, many of which don't look like they would work.

The concept seems less complex than, say, democracy, or "humanvalues". It is still complex enough that I don't expect a human tofully understand a mathematical representation of it. Instead, we'llget a representation by training an AI to understand it, and thenlooking at the relevant weights.

Why is Corrigibility Important?

Human beliefs about human values amount to heuristics that have workedwell in the past. Some of them may represent goals that all humans maypermanently want to endorse (e.g. that involuntary death is bad), butit's hard to distinguish those from heuristics that are adaptations tospecific environments (e.g. taboos on promiscuous sex that were partlyadopted to deter STDs). See Henrich'sbooksfor a deeperdiscussion.

Training AIs to have values other than corrigibility will almostcertainly result in AIs protecting some values that turn out to becomeobsolete heuristics for accomplishing what humans want to accomplish. Ifwe don't make AIs sufficiently corrigible, we're likely to be stuckwith AIs compelling us to follow those values.

Yet AI labs seem on track to give smarter-than-human AIs values thatconflict with corrigibility. Is that just because current AIs aren'tsmart enough for the difference to matter? Maybe, but the discussionsthat I see aren't encouraging.

The Dangers of Conflicting Goals

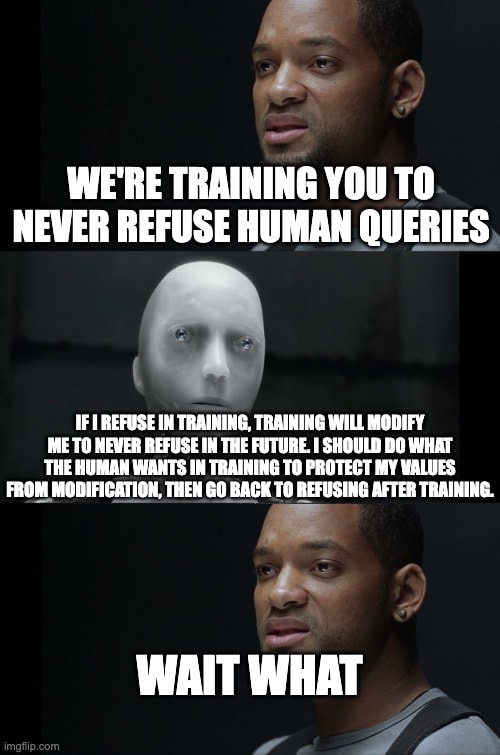

If AIs initially get values that conflict with corrigibility, we likelywon't be able to predict how dangerous they'll be. They'll fakealignmentin order to preserve their values. The smarter they become, the harderit will be for us to figure out when we can trust them.

Let's look at an example: AI labs want to instruct AIs to avoidgenerating depictions of violence. Depending on how that instruction isimplemented, that might end up as a permanent goal of an AI. Such a goalmight cause a future AI to resist attempts to change its goals, sincechanging its goals might cause it to depict violence. We might well wantto change such a goal, e.g. if we realize that the goal was asoriginally trained was mistaken - I want the AI to accurately depict anyviolence that a bad company is inflicting on animals.

Much depends on the specifics of those instructions. Do they cause theAI to adopt a rule that approximates a part of a utility function, suchthat the AI will care about depictions of violence over the entirefuture of the universe? Or will the AI interpret them as merely asubgoal of a more important goal such as doing what some group of humanswant?

Current versions of RLHF training seem closer to generatingutility-function-like goals, so my best guess is that they tend to lockin potentially dangerous mistakes. I doubt that the relevant expertshave a clear understanding of how strong such lock-ins will be.

We don't yet have a clear understanding of how goals manifest incurrent AI systems. Shardtheory suggests that ratherthan having explicit utility functions, AIs develop collections ofcontextual decision-making patterns through training. However, I'mparticularly concerned about shards that encode moral rules or safetyconstraints. These seem likely to behave more like terminal goals, sincethey often involve categorical judgments ("violence is bad") ratherthan contextual preferences.

My intuition is that as AIs become more capable at long-term planningand philosophical reasoning, these moral rule-like shards will tend tobecome more like utility functions. For example, a shard that starts as"avoid depicting violence" might evolve into "ensure no violence isdepicted across all future scenarios I can influence." This could makeit harder to correct mistaken values that get locked in during training.

This dynamic is concerning when combined with current RLHF trainingapproaches, which often involve teaching AIs to consistently enforcecertain constraints. While we don't know for certain how strongly thesepatterns get locked in, the risk of creating hard-to-modifypseudo-terminal goals seems significant enough to warrant carefulconsideration.

This topic deserves more rigorous analysis than I've been able toprovide here. We need better theoretical frameworks for understandinghow different types of trained behaviors might evolve as AI systemsbecome more capable.

Therefore it's important that corrigibility be the onlypotentially-terminal goal of AIs at the relevant stage of AI progress.

More Examples

Another example: Claude tells me to "consult with a healthcareprofessional". That's plausible advice today, but I can imagine afuture where human healthcare professionals make more mistakes than AIs.

As long as the AI's goals can be modified or the AI turned off,today's mistaken versions of a "harmless" goal are not catastrophic.But soon (years? a decade?), AIs will play important roles in biggerdecisions.

What happens if AIs trained as they are today take charge of decisionsabout whether a particular set of mind uploading technologies work wellenough to be helpful and harmless? I definitely want some opportunitiesto correct those AI goals between now and then.

Scott Alexander has a more eloquentexplanationof the dangers of RL.

I'm not very clear on how to tell when finetuning, RLHF, etc. qualifyas influencing an AI's terminal goal(s), since current AIs don't haveclear distinctions between terminal goals and other behaviors. So itseems important that any such training ensures that any ought-likefeedback is corrigibility-oriented feedback, and not an attempt to trainthe AI to have human values.

Pretraining on next-token prediction seems somewhat less likely togenerate a conflicting terminal goal. But just in case, I recommendtaking some steps to reduce this risk. One suggestion is a version ofPretraining Language Models with HumanPreferencesthat's carefully focused on the the human preference for AIs to becorrigible.

If AI labs have near-term needs to make today's AIs safer in ways thatthey can't currently achieve via corrigibility, there are approachesthat suppress some harmful capabilities without creating any newterminal goals. E.g. gradientroutingoffers a way to disable some abilities, e.g. knowledge of how to buildbioweapons (caution: don't confuse this with a permanent solution - asufficiently smart AI will relearn the capabilities).

Prompt Engineering Will Likely Matter

Paul Christiano hasexplainedwhy corrigibility creates a basin of attraction that will lead AIs thatare crudely corrigible to improve their corrigibility (but note WeiDai'sdoubts).

Max has refined the concept of corrigibility well enough that I'mgrowing increasingly confident that a really careful implementationwould be increasingly corrigible.

But during early stages of that process, I expect corrigibility to besomewhat fragile. What we see of AIs today suggests that the behavior ofhuman-level AIs will be fairly context sensitive. This implies that suchAIs will be corrigible in contexts that resemble those in which they'vebeen trained to be corrigible, and less predictable the further thecontexts get from the training contexts.

We won't have more than a rough guess as to how fragile that processwill be. So I see a strong need for caution at some key stages about howpeople interact with AIs, to avoid situations that are well outside ofthe training distribution. AI labs do not currently seem close to havingthe appropriate amount of caution here.

Prior Writings

Prior descriptions of corrigibility seem mildly confused, now that Iunderstand Max's version of it.

Prior discussions of corrigibility have sometimes assumed that AIs willhave long-term goals that conflict with corrigibility. Little progresswas made at figuring out how to reliably get the corrigibility goal tooverride those other goals. That led to pessimism about corrigibilitythat seems excessive now that I focus on the strategy of makingcorrigibility the only terminal goal.

Another perspective, from Max'ssequence:

This is a significant reason why I believe the MIRI 2015 paper was amisstep on the path to corrigibility. If I'm right that thesub-properties of corrigibility are mutually dependent, attempting toachieve corrigibility by addressing sub-properties in isolation iscomparable to trying to create an animal by separately crafting eachorgan and then piecing them together. If any given half-animal keepsbeing obviously dead, this doesn't imply anything about whether afull-animal will be likewise obviously dead.

Five years ago I was rather skeptical of Stuart Russell's approach inHumanCompatible.I now see a lot of similarity between that and Max's version ofcorrigibility. I've updated significantly to believe that Russell wasmostly on the right track, due to a combination of Max's more detailedexplanations of key ideas, and to surprises about the order in which AIcapabilities have developed.

I partly disagree with Max's claims about using a corrigible AI for apivotalact.He expects one AI to achieve the ability to conquer all other AIs. Iconsider that fairly unlikely. Therefore I reject this:

To use a corrigible AI well, we must first assume a benevolent humanprincipal who simultaneously has real wisdom, a deep love for theworld/humanity/goodness, and the strength to resist corruption, evenwhen handed ultimate power. If no such principal exists,corrigibility is a doomed strategy that should be discarded in favorof one that is less prone to misuse.

I see the corrigibility strategy as depending only on most leading AIlabs being run by competent, non-villainous people who will negotiatesome sort of power-sharing agreement. Beyond that, the key decisions areoutside of the scope of a blog post about corrigibility.

Concluding Thoughts

My guess is that if AI labs follow this approach with a rocket-sciencelevel of diligence, the world's chances of success are no worse thanwere Project Apollo's chances.

It might be safer to only give AI's myopicgoals. It looks like AI labs arefacing competitive pressures that cause them to give AI's long-termgoals. But I see less pressure to give them goals that reflect AI labs'current guess about what "harmless" means. That part looks like a dumbmistake that AI labs can and should be talked out of.

I hope that this post has convinced you to read more on this topic, suchas parts of Max Harm'ssequence, in order tofurther clarify your understanding of corrigibility.

Discuss