Published on December 23, 2024 9:38 AM GMT

I'm seeing a lot of people on LW saying that they have very short timelines (say, five years or less) until AGI. However, the arguments that I've seen often seem to be just one of the following:

- "I'm not going to explain but I've thought about this a lot""People at companies like OpenAI, Anthropic etc. seem to believe this""Feels intuitive based on the progress we've made so far"

At the same time, it seems like this is not the majority view among ML researchers. The most recent representative expert survey that I'm aware of is the 2023 Expert Survey on Progress in AI. It surveyed 2,778 AI researchers who had published peer-reviewed research in the prior year in six top AI venues (NeurIPS, ICML, ICLR, AAAI, IJCAI, JMLR); the median time for a 50% chance of AGI was either in 23 or 92 years, depending on how the question was phrased.

While it has been a year since fall 2023 when this survey was conducted, my anecdotal impression is that many researchers not in the rationalist sphere still have significantly longer timelines, or do not believe that current methods would scale to AGI.

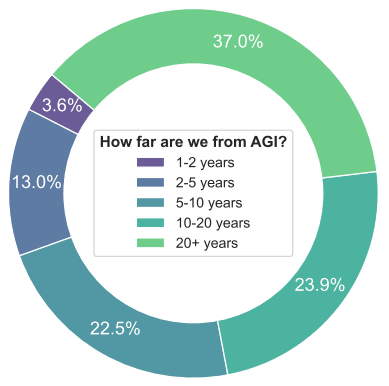

A more recent, though less broadly representative, survey is reported in Feng et al. 2024, In the ICLR 2024 "How Far Are We From AGI" workshop, 138 researchers were polled on their view. "5 years or less" was again a clear minority position, with only 16.6% respondents. On the other hand, "20+ years" was the view held by 37% of the respondents.

Most recently, there were a number of "oh AGI does really seem close" comments with the release of o3. I mostly haven't seen these give very much of an actual model for their view either; they seem to mostly be of the "feels intuitive" type. There have been some posts discussing the extent to which we can continue to harness compute and data for training bigger models, but that says little about the ultimate limits of the current models.

The one argument that I did see that felt somewhat convincing were the "data wall" and "unhobbling" sections of the "From GPT-4 to AGI" chapter of Leopold Aschenbrenner's "Situational Awareness", that outlined ways in which we could build on top of the current paradigm. However, this too was limited to just "here are more things that we could do".

So, what are the strongest arguments for AGI being very close? I would be particularly interested in any discussions that explicitly look at the limitations of the current models and discuss how exactly people expect those to be overcome.

Discuss