Published on December 23, 2024 8:51 AM GMT

Project summary

AI Safety Camp has a seven-year track record of enabling participants to try their fit, find careers and start new orgs in AI Safety. We host up-and-coming researchers outside the Bay Area and London hubs.

If this fundraiser passes…

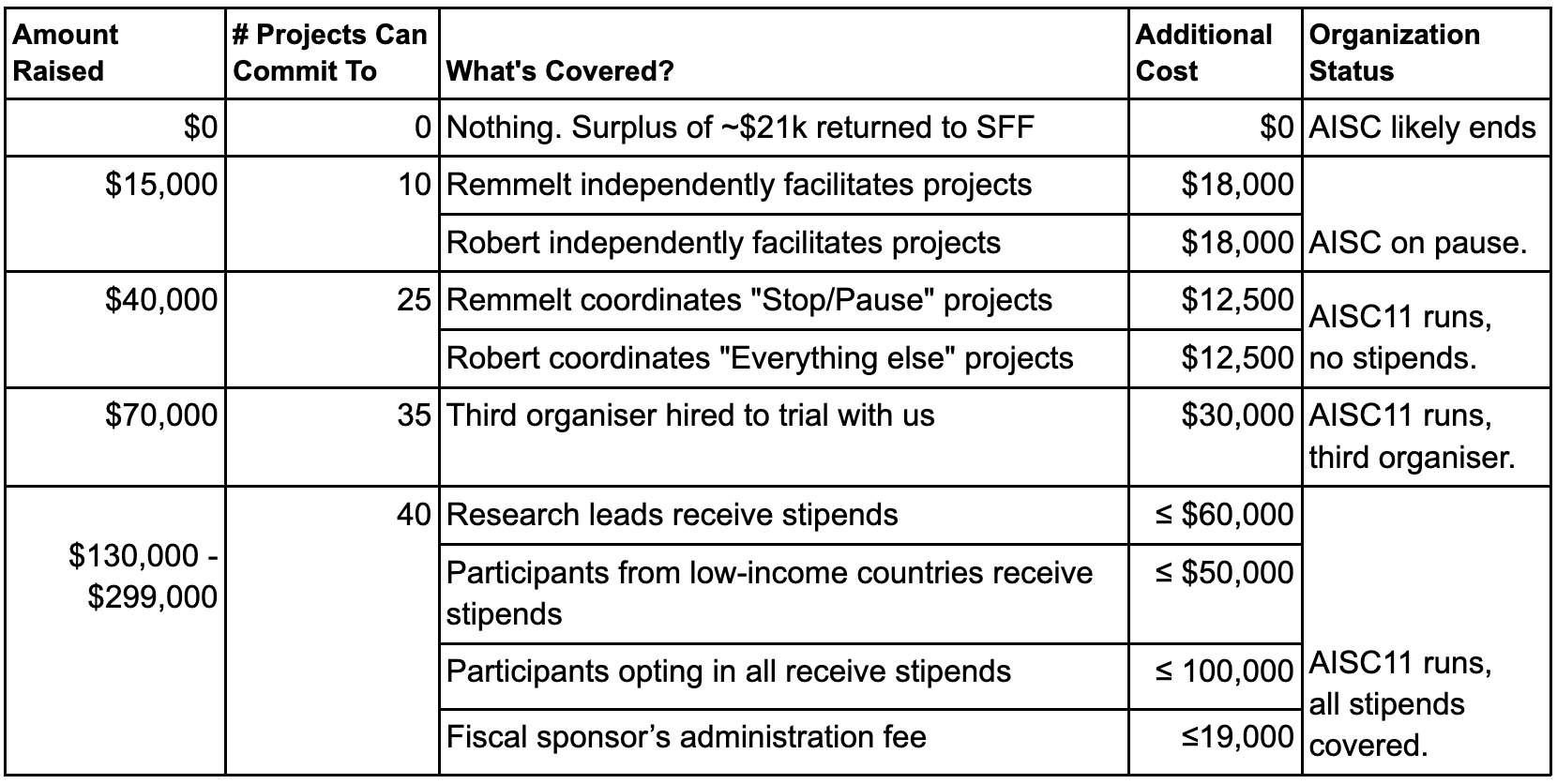

- $15k, we won’t run a full program, but can facilitate 10 projects.$40k, we can organise the 11th edition, for 25 projects.$70k, we can pay a third organiser, for 35 projects.$300k, we can cover stipends for 40 projects.

What are this project's goals? How will you achieve them?

By all accounts they are the gold standard for this type of thing. Everyone says they are great, I am generally a fan of the format, I buy that this can punch way above its weight or cost. If I was going to back [a talent funnel], I’d start here.

— Zvi Mowshowitz

AI Safety Camp is part incubator and part talent funnel:

- an incubator in that we help experienced researchers form new collaborations that can last beyond a single edition. Alumni went on to found 10 organisations.a talent funnel in that we help talented newcomers to learn by doing – by working on a concrete project in the field. This has led to alumni 43 jobs in AI Safety.

The Incubator case is that AISC seeds epistemically diverse initiatives. Edition 10 supports new alignment directions, control limits research, neglected legal regulations, and 'slow down AI' advocacy. Funders who are uncertain about approaches to alignment – or believe we cannot align AGI on time – may prioritise funding this program.

The Maintaining Talent Funnels case is to give some money just to sustain the program. AISC is no longer the sole program training collaborators new to the field. There are now many programs, and our community’s bottlenecks have shifted to salary funding and org management. Still, new talent will be needed. For them, we can run a cost-efficient program. Sustaining this program retains optionality – institutions are waking up to AI risks and could greatly increase funding and positions there. If AISC still exists, it can help funnel people with a security mindset into those positions. But if by then organisers have left to new jobs, others would have to build AISC up from scratch. The cost of restarting is higher than it is to keep the program running.

As a funder, you may decide that AISC is worth saving as a cost-efficient talent funnel. Or you may decide that AISC is uniquely supports unconventional approaches, and that something unexpectedly valuable may come out.

Our program is both cost-efficient and scalable.

- For edition 10, we received 405 applications (up 65%) for 32 projects (up 19%).For edition 11, we could scale to 40 projects, projected from recent increases in demand on the technical safety side and the stop/pause AI side.

How will this funding be used?

Grant funding is tight. Without private donors, we cannot continue this program.

$15k: we won’t run a full program, but can facilitate 10 projects and preserve organising capabilities.

If we raise $15k, we won't run a full official edition.

We can still commit to facilitating projects. Robert and Remmelt are already supporting projects in their respective fields of work. Robert has collaborated with other independent alignment researchers, as well as informally mentoring junior researchers doing conceptual and technical research on interpretable AI. Remmelt is kickstarting projects to slow down AI (eg. formalization work, MILD, Stop AI, inter-community calls, film by an award-winning director).

We might each just support projects independently. Or we could (also) run an informal event where we only invite past alumni to collaborate on projects together.

We can commit to this if we are freed from needing to transition to new jobs in 2025. Then we can resume full editions when grantmakers make more funds available. With a basic income of $18k each, we can commit to starting, mentoring, and/or coordinating 10 projects.

$40k: we can organise the 11th edition, for 25 projects.

Combined with surplus funds from past camps (conservatively estimated at $21k), this covers salaries to Robert and Remmelt of $30.5k each.

That is enough for us to organise the 11th edition. However, since we’d miss a third organiser, we’d only commit to hosting 25 projects.

$70k: we can pay a third organiser, for 35 projects.

With funding, we are confident that we can onboard a new organiser to trial with us. They would assist Robert with evaluating technical safety proposals, and help with event ops. This gives us capacity to host 35 projects.

$300k: we can cover stipends for 40 projects.

Stipends act as a commitment device, and enable young researchers to focus on research without having to take on side-gigs. We only offer stipends to participants who indicate it would help their work. Our stipends are $1.5k per research lead and $1k per team member, plus admin fees of 9%.

We would pay out stipends in the following order:

- To research leads (for AISC10, this is ≤$36k).To team members in low-income countries (for AISC10, this is ≤$28k).To remaining team members (for AISC10, this would have been ≤$78k, if we had the funds).

The $230k extra safely covers stipends for edition 11. This amount may seem high, but it cost-efficiently supports 150+ people's work over three months. This in turn reduces the load on us organisers, allowing us to host 40 projects.

Who is on your team?

Remmelt is coordinator of 'Stop/Pause AI' projects:

- Remmelt leads a project with Anders Sandberg to formalize AGI uncontrollability, which received $305k in grants.Remmelt also wrote about the control problem, presented here.Remmelt works in diverse communities to end harmful scaling – from Stop AI, to creatives, to environmentalists.

Robert is coordinator of 'Conceptual and Technical AI Safety Research' projects:

- Robert is an independent AI Alignment researcher previously funded by the Long Term Future Fund, having done work on Simulator Theory of LLMs, Agent Foundations, and more interpretable cognitive architecturesRobert does non-public mentoring of aspiring and junior AI Safety researchers

Linda will take a break from organising, staying on as an advisor. We can hire a third organiser to take up her tasks.

What's your track record?

AI Safety Camp is primarily a learning-by-doing training program. People get to try a role and explore directions in AI safety, by collaborating on a concrete project.

Multiple alumni have told us that AI Safety Camp was how they got started in AI Safety.

Papers that came out of the camp include:

- Goal Misgeneralization, AI Governance and the Policymaking Process, Detecting Spiky Corruption in Markov Decision Processes, RL in Newcomblike Environments, Using soft maximin for risk averse multi-objective decision-making, Reflection Mechanisms as an Alignment Target, Representation noising effectively prevents harmful fine-tuning

Projects started at AI Safety Camp went on to receive a total of $952k in grants:

AISC 1: Bounded Rationality team

$30k from Paul

AISC 3: Modelling Cooperation

$24k from CLT, $50k from SFF, $83k from SFF, $83k from SFF

AISC 4: Survey

$5k from LTTF

AISC 5: Pessimistic Agents

$3k from LTFF

AISC 5: Multi-Objective Alignment

$20k from EV

AISC 6: LMs as Tools for Alignment

$10K from LTFF

AISC 6: Modularity

$125k from LTFF

AISC 7: AGI Inherent Non-Safety

$170k from SFF, $135k from SFF

AISC 8: Policy Proposals for High-Risk AI

$10k from NL, $184k from SFF

AISC 9: Data Disclosure

$10k from SFFsg

AISC 9: VAISU

$10k from LTFF

Organizations launched out of camp conversations include:

- Arb Research, AI Safety Support, AI Standards Lab.

Alumni went on to take positions at:

FHI (1 job+4 scholars+2 interns), GovAI (2 jobs), Cooperative AI (1 job), Center on Long-Term Risk (1 job), Future Society (1 job), FLI (1 job), MIRI (1 intern), CHAI (2 interns), DeepMind (1 job+2 interns), OpenAI (1 job), Anthropic (1 contract), Redwood (2 jobs), Conjecture (3 jobs), EleutherAI (1 job), Apart (1 job), Aligned AI (1 job), Timaeus (2 jobs), MATS (1 job), ENAIS (1 job), Pause AI (2 jobs), Stop AI (1 founder), Leap Labs (1 founder, 1 job), Apollo (2 founders, 4 jobs), Arb (2 founders), AISS (2 founders), AISAF (2 founders), AISL (2+ founders, 1 job), ACS (2 founders), ERO (1 founder), BlueDot (1 founder).

These are just the positions we know about. Many more are engaged in AI Safety in other ways, eg. as PhD or independent researcher.

We consider positions at OpenAI to be net negative and are seriously concerned about positions at other AGI labs.

For statistics of previous editions, see here.

What are the most likely causes and outcomes if this project fails?

Not receiving minimum funding:

- Given how tight grant funding is currently, we don’t expect to be able to run an AISC edition if most funds are not covered on Manifund.

Projects are low priority:

- We enable researchers to pursue their interests and get ‘less wrong’. We are open to diverse projects as long as the theory of change makes sense under plausible assumptions. We may accept proposals that we don’t yet think are a priority, if research leads use feedback to refine their proposals and put the time into guiding teammates to do interesting work.

Projects support capability work:

- We decline such projects. Robert and Remmelt are aware and wary of infohazards.

How much money have you raised in the last 12 months, and from where?

- $65.5k on Manifund to run our current 10th edition.$7.5k from other private donors.$30k from Survival and Flourishing speculation grantors, but no main grant. The feedback we got was (1) “I’m a big believer in this project and am keen for you to get a lot of support” and (2) a general explanation that SFF was swamped by ~100 projects and that funding got tighter after OpenPhil stopped funding the rationalist community.

Discuss