Published on December 21, 2024 12:15 AM GMT

How do you make sense of a 300-page book in 15 minutes? Or get insights from thousands of news articles without relying on recommendation algorithms? Our usual approach is to filter: we use search engines, summaries, or recommendations to select a manageable subset of content. But what if we could keep all the content and instead adjust how deeply we engage with each piece?

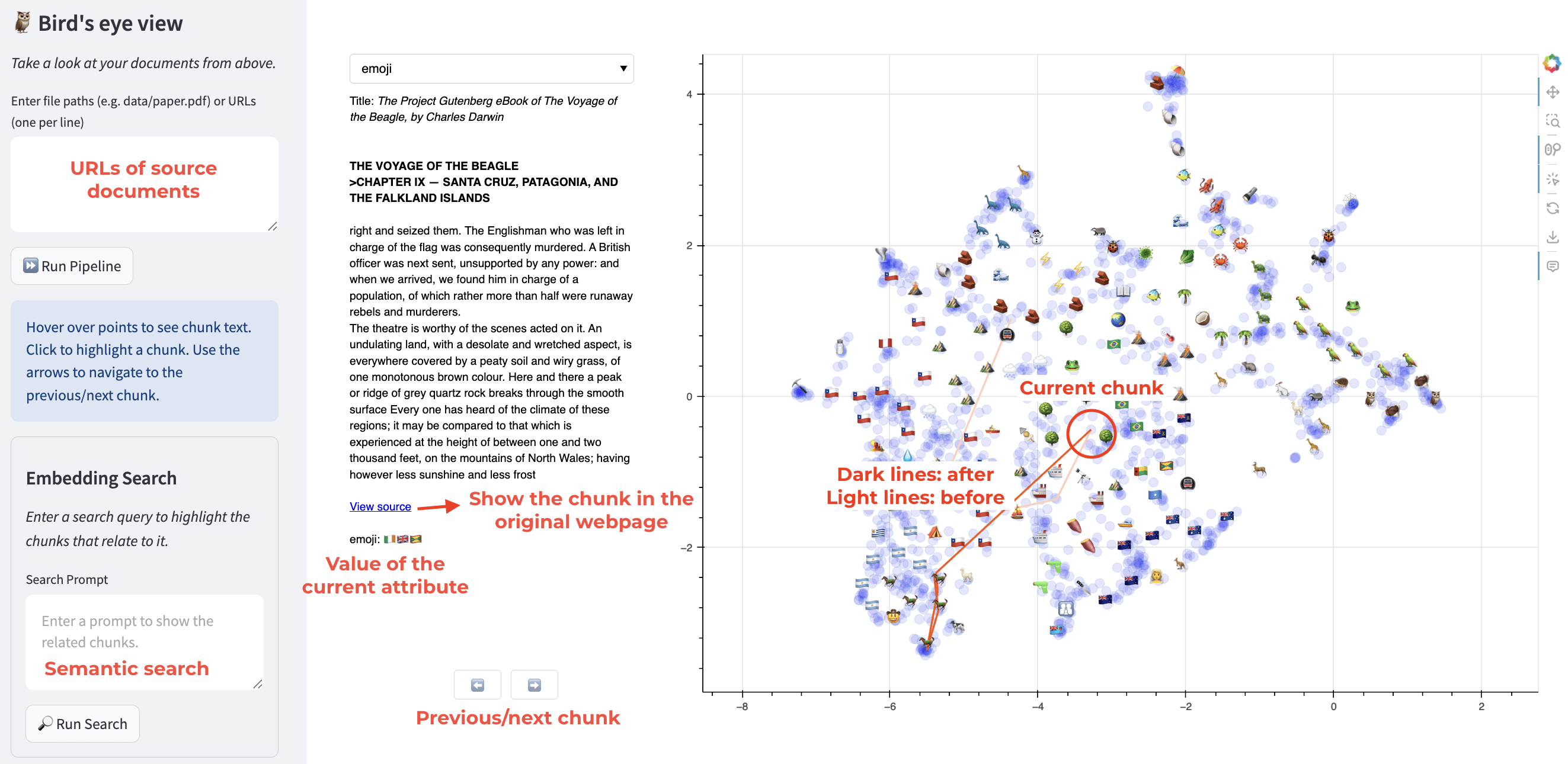

I created bird’s eye view, an interactive visualization that lets you explore large collections of text from above, like looking at a landscape from an airplane. Instead of reading sequentially or filtering content, you get a complete view of the document’s scope while retaining the ability to zoom into specific areas. This perspective allows you to gradually build understanding at your own pace, moving between high-level semantic clusters and in-depth reading of excerpts.

The tool has already proven valuable for my own use cases for:

- Getting quick insights from large documents or collectionsExploring AI model outputs at scale for brainstormingQualitatively evaluate LLM benchmarks

This post will first succinctly explain how bird’s eye view works, then demonstrate its applications through concrete examples - from exploring Darwin’s travel diary to visualizing the MMLU benchmark. We’ll then show you how to use the tool yourself, and finally describe how this tool fits in a broader reflexion about the future of AI development.

I encourage you to interact with the visualizations throughout this post - they are the main feature, and exploring them directly will give you a better understanding of the tool than any written description.

The code is open-source, and you can create your own semantic maps using this web application.

How Bird’s Eye View Works

Bird’s eye view transforms documents into interactive maps through six steps:

- Chunking: Long documents are split into smaller pieces (around 600 characters each)Embedding: Each chunk is converted into a semantic vector using OpenAI embedding APIDimension Reduction: UMAP reduces these high-dimensional embeddings to 2D coordinatesEmoji Assignment: Each point is assigned representative emojis based on its dot product between the chunk embedding and the emoji embeddings.Visualization: The result is rendered as an interactive plot where:

- Points represent chunks of textPosition reflects semantic similarity (the axis units are abitrary).Emojis provide quick visual contextLines show document flow

See the linked blog post for a series of interactive examples!

How to Use Bird’s Eye View

Web Interface

The simplest way to start is through the web interface powered by Streamlit. You’ll see a simple input field where you can enter URLs of documents you want to visualize. The tool supports:

- HTML format (preferred, preserves rich text)PDF documents (text extraction only)Plain text filesJSON files with specific formatting (see the code example below). In the online app, only web urls are supported. You can use path to local files if you run the app locally!

Processing takes around 30 seconds for a 400-page book. For a quick start, you can explore pre-computed examples from the home menu.

Interacting with the Map

Once your visualization is generated, you’ll see a scatter plot where each point represents a chunk of text. The visualization offers several ways to explore:

Basic Navigation

The light orange lines connect to chunks that come before your selected point in the original text, while dark orange lines point to what comes after. Click any point to display its content in the side panel, then use the arrows below the text to move sequentially through the document.

Emoji View

The default view shows emojis representing the content of each region. As you zoom in and out, the visualization adjusts to show the most representative emojis for each area, preventing visual clutter while maintaining context.

Semantic Search

The embedding search feature lets you highlight related content across the map. Enter a prompt in the search box, and points will be colored based on their semantic similarity to your query. The coloring uses a sharpened version of the raw similarity scores to enhance contrast.

Search works best with:

- Lists of keywordsShort descriptive phrasesCaptions that capture the kind of content you’re looking for

For example, searching for “A paragraph talking about birds” in Darwin’s Voyage clearly highlights regions discussing geese, raptors, and hummingbirds, even when these specific words aren’t mentioned.

Python Interface

For developers and researchers, the Python package offers more flexibility. Here is a minimal example:

from birds_eye_view.core import ChunkCollectionfrom birds_eye_view.plotting import visualize_chunkschunks = [ { "text": str(n), "number": n, "modulo 10": n % 10, "quotient 10": n // 10, } for n in range(1000)]collection = ChunkCollection.load_from_list(chunks)collection.process_chunks()visualize_chunks(collection, n_connections=0)Install via pip:

pip install git+https://github.com/aVariengien/birds-eye-view.gitThe Python interface allows integration with existing workflows and custom data processing pipelines. See the GitHub repository for detailed documentation and richer examples.

Current Limitations and Future Development

Bird’s eye view remains an early prototype with rough edges. The code is experimental, and the interface needs refinement. Yet even in this state, it demonstrates the value of having alternative ways to access information at scale.

Epistemic value of bird’s eye view. When we rely solely on filtered information, we miss the context and connections that might challenge our assumptions. Bird’s eye view enables discovery of “unknown unknowns” - data points that would have never surfaced through targeted searches or recommendations.

Several promising directions for development emerge:

- Visualisation beyond emojis. While emojis are fun and provide a good way to visualize collection of text that spans different topics, they become less relevant when analysis corpus with homengenous topics. Possible improvement could be to assign keywords of clusters at different scale.Beyond Semantic Mapping The current implementation relies on general semantic embeddings, but we could develop specialized “prisms” for viewing content. Imagine toggling between maps organized by writing style, technical complexity, or chronological relationships.Comparative Embedding Space We could anchor new documents in familiar semantic spaces. For instance, when reading a new paper, you could visualize it within a reference space made from a familiar set of papers from the same field. This would leverage your existing mental models to accelerate comprehension of new material.

The Broader Picture: Building Alternative Futures for AI Development

Bird’s eye view points to a broader possibility: AI tools that enhance rather than replace human cognitive practices. Current AI development focuses heavily on increasingly autonomous and general agents. This direction is driven by major AI labs racing towards artificial general intelligence (AGI), motivated by economic incentives and a particular vision of progress.

This narrow focus on agent AI creates an imagination shortage in the field. While the pursuit of increasingly powerful autonomous agents might be the fastest path to transformative AI, it’s also a risky one.

We need alternative development paths that:

- Provide immediate societal benefits that scale safelyCreate tools that augment rather than replace human capabilitiesFoster human connections and improve collective decision-makingDemonstrate that “AI progress” doesn’t have to mean “increasingly autonomous agents”

Current AI tools prioritize immediate ease of use - you can chat with them like a person from day one. This design principle, while valuable, might be blocking exploration of tools that require practice to master. Like musical instruments or programming languages, some tools deliver their full value only after users develop new mental models and ways of thinking. Bird’s eye view exemplifies this: it disrupts normal reading patterns but potentially enables new ways of understanding text.

The goal isn’t to make tools unnecessarily complex, but to recognize that some valuable capabilities might only emerge through sustained engagement and practice. This approach frames AI systems not just as autonomous entities, but as mediators - “smart pipes” that can:

- Connect humans with each other and with information in novel waysCreate new cognitive artifacts and ways of thinkingIncrease the bandwidth between humans and machines, leading to better intuitive understanding of their behavior

This suggests an alternative narrative for AI development: instead of racing towards autonomous superintelligent entities, we could build towards a healthy ecosystem of hybrid human-AI cognitive tools. “Healthy” here means a multi-level system that maintains balance through:

- Collective epistemic practices that help us understand the system’s dynamicsInformation governance that enables informed collective decision-makingTechnical and social mechanisms to correct course when needed

While major AI labs might continue their current trajectory, developing and demonstrating compelling alternatives could help shift public perception and support towards safer, more beneficial directions for AI progress.

Discuss