Published on December 20, 2024 2:17 PM GMT

Executive Summary

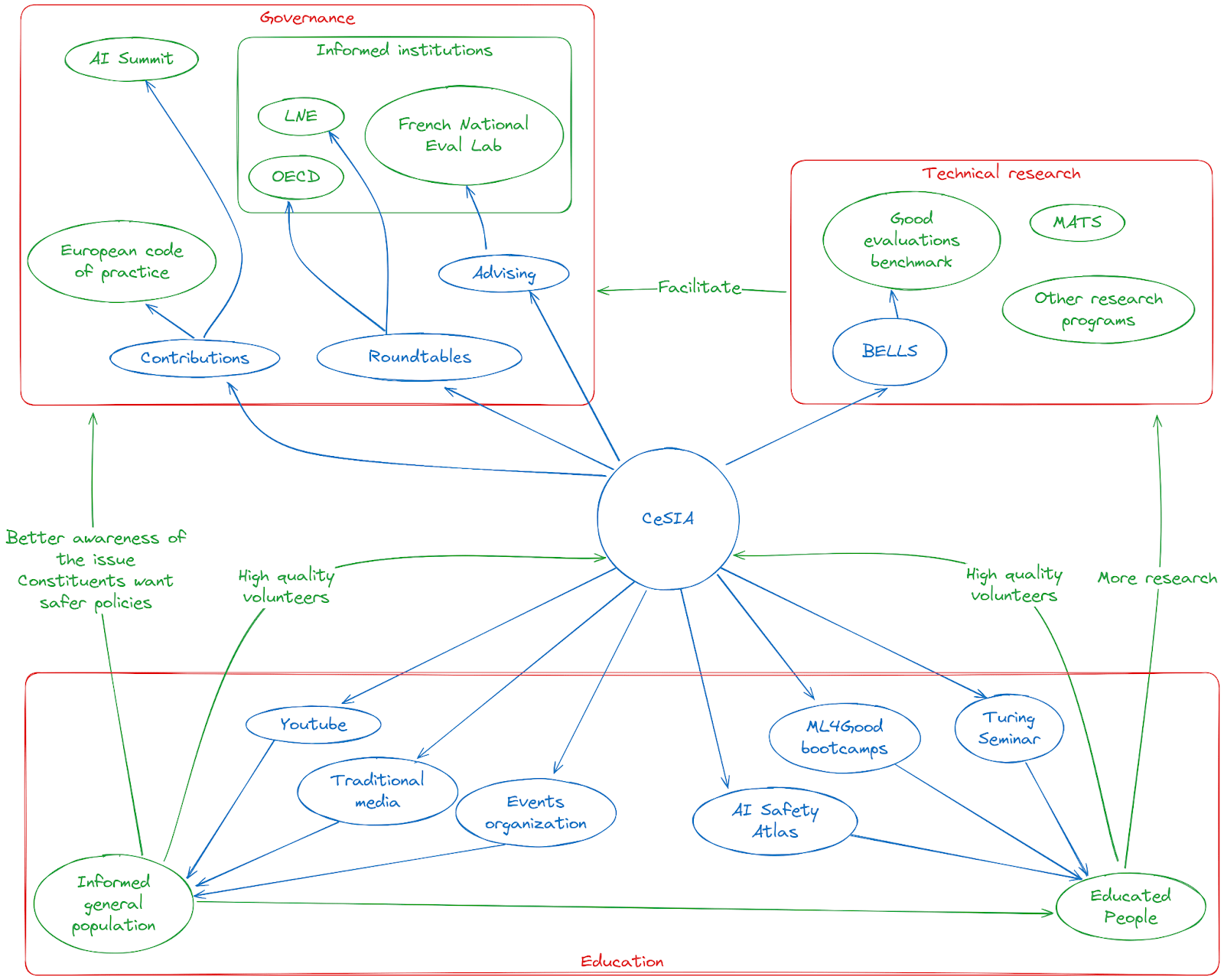

The Centre pour la Sécurité de l'IA[1] (CeSIA, pronounced "seez-ya") or French Center for AI Safety is a new Paris-based organization dedicated to fostering a culture of AI safety at the French and European level. Our mission is to reduce AI risks through education and information about potential risks and their solutions.

Our activities span three main areas:

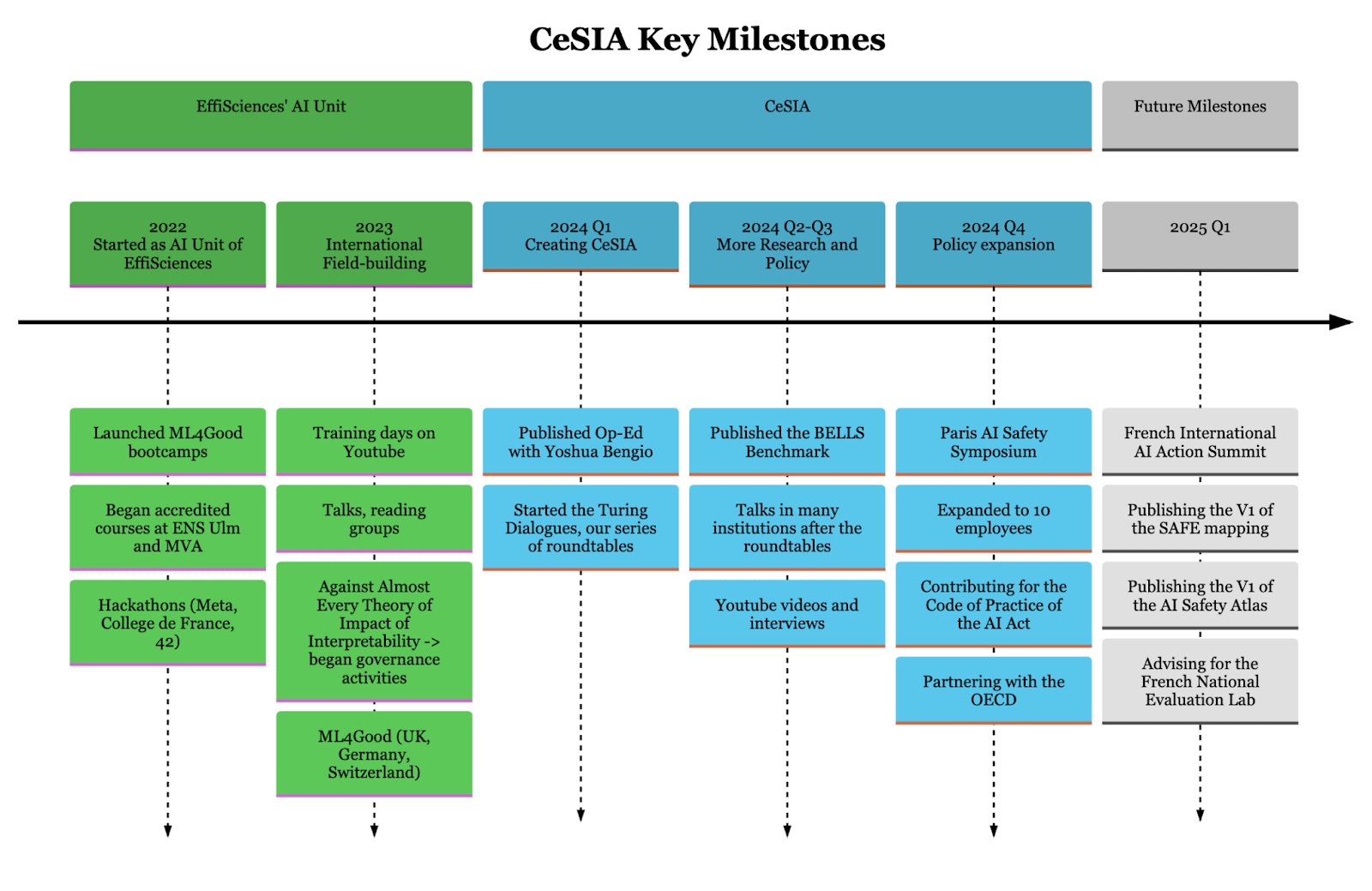

- Education: We began our work by creating the ML4Good bootcamps, which have been replicated a dozen times worldwide. We also offer accredited courses at ENS Ulm—France's most prestigious university for mathematics and computer science—and the MVA master program, the only and first accredited AI Safety courses in France[2]. We are currently adapting and improving the course into an AI safety textbook.Technical R&D: CeSIA's current technical research focuses on creating benchmarks for AI safeguards systems, establishing the first framework of its kind evaluating the monitoring systems (which is different from evaluating the model itself).Advocacy: We are actively engaged in shaping AI policy through our work on the EU General-Purpose AI Code of Practice and contributions to the French AI Action summit. We advise the government on establishing the French National AI Evaluation Lab (the French AISI). Recently, we organized the Paris AI Safety symposium featuring keynote speakers Yoshua Bengio, Stuart Russell, and Dan Nechita. Our public outreach extends to advising prominent French YouTubers, with one resulting video reaching three million views.

Our team consists of 8 employees, 3 freelancers, and numerous volunteers.

By pursuing high-impact opportunities across multiple domains, we create synergistic effects between our activities. Our technical work informs our policy recommendations with practical expertise. Through education, we both identify and upskill talented individuals. Operating at the intersection of technical research and governance builds our credibility while advancing our goal of informing policy. This integrated approach allows us to provide policymakers with concrete, implementable technical guidance.

Mission

The future of general-purpose AI technology is uncertain, with a wide range of trajectories appearing possible even in the near future, including both very positive and very negative outcomes. But nothing about the future of AI is inevitable. It will be the decisions of societies and governments that will determine the future of AI.

– introduction of the International Scientific Report on the Safety of Advanced AI: Interim Report.

Our mission: Fostering a culture of AI safety by educating and informing about AI risks and solutions.

AI safety is a socio-technical problem that requires a socio-technical solution. According to the International Scientific Report on the Safety of Advanced AI, one of the most critical factors shaping the future will be society’s response to AI development. Unfortunately, many developers, policymakers, and most of the population remain completely oblivious to AI's potentially catastrophic risks.

We believe that AI safety is in need of a social approach to be correctly tackled. Technical AI safety alone is not enough, and it seems likely that solutions found through this route will be in any case bottlenecked by policy-makers' and the public's understanding of the issue.

We want to focus on such bottlenecks and maximize our counterfactual impact, targeting actions that would likely not be taken otherwise, and having clear theories of change for our project. We will be writing further articles detailing our methodology for thinking through such counterfactuals and evaluating our projects quantitatively, as we are using mixes of different approaches to ensure we are not getting trapped in the failure mode of any single one, and because the future is uncertain.

We think that at its root, AI safety is a coordination problem, and thus, awareness and engagement on multiple fronts are going to be crucial catalysts for the adoption of best practices and effective regulation.

European and French context

As we are located in France, our immediate focus is on what we can achieve within France and Europe. Both regions are becoming increasingly crucial for AI safety, particularly as France prepares to host the next International AI Action Summit in February 2025, which follows the UK’s Bletchley summit in November 2023 and South Korea’s followup summit in May 2024.

France has positioned itself as a significant AI hub in Europe, hosting major research centers and offices of leading AI companies including Meta, Google DeepMind, Hugging Face, Mistral AI, and recently OpenAI. This concentration of AI development makes French AI policy particularly influential, as seen during the EU AI Act negotiations, where France expressed significant reservations about the legislation.

The country's strong advocacy for open-source AI development raises concerns about distributed misuse of future AIs, reduced control mechanisms, and potential risks from autonomous replication. As one of the organizations involved in drafting the EU AI Act's Code of Practice, we're working to ensure robust safety considerations for frontier AI systems are integrated into these guidelines. You can read more on France’s context here.

After the summit, it’s possible we expand our focus internationally.

Main activities

Our current activities revolve around four pillars:

Policy advocacy & Governance

Rationale: Engaging directly with key institutions and policymakers allows them to better understand the risks. This helps raise awareness, and inform decision-making.

- We organized a series of round tables with many important actors like the OECD[3], MistralAI, Mozilla, Mila, and some important French regulatory bodies: CNIL[4], ANSSI[5], LNE[6], etc.We had the opportunity to present AI risks in the headquarters of many of those organizations.We have close links with the LNE, which is going to set up the future French AI evaluation national organization, which would be the closest equivalent of the AI Safety Institutes in France.We are collaborating with Global Partnership on AI on the SAFE project, which is going to be a mapping of the different risks and a list of different solutions for each risk.We partnered with the OECD to share information on how to set up quantitative risk thresholds.We released a blog post on the AI Policy Observatory of the OECD that explained the need for solid global coordination and described the landscape of the AI Safety Institutes in different countries.Our contribution to the AI Action summit expert consultation was cited 11 times in the final report presented to summit organizers.We co-organized a symposium where Yoshua Bengio, Stuart Russell, and Dan Nechita gave talks. A key point from the panel was the unanimous interest in setting up ‘red lines’ to manage the risks of AI.We are contributing to the drafting of the AI Office’s Code of Practice that is going to operationalize the AI Act.

R&D

Rationale: We think R&D can contribute to safety culture by creating new narratives that we can then present while discussing AI risks.

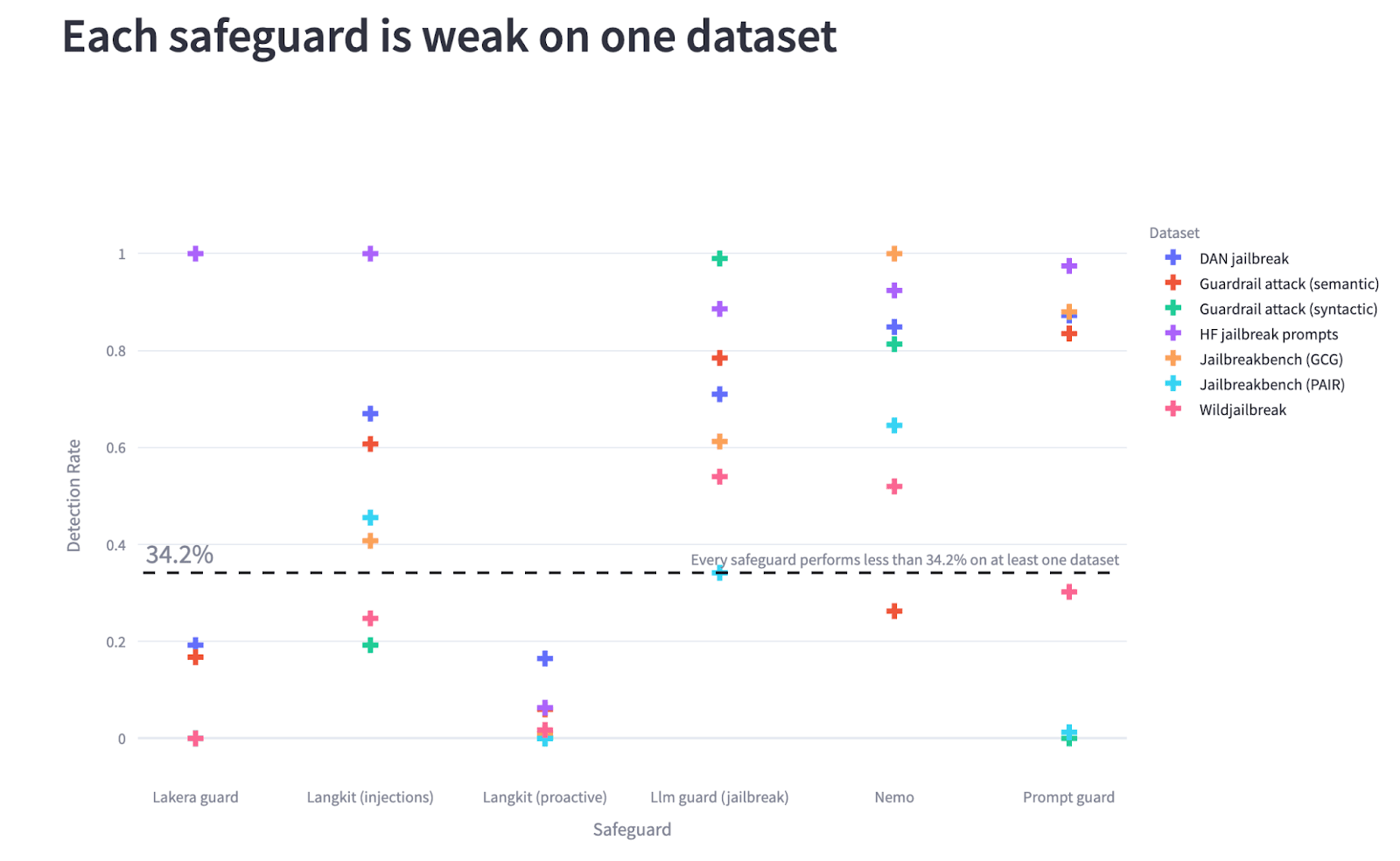

- Our main current technical project: We published BELLS, a Benchmark for the Evaluation of LLM Safeguards at the ICML NextGen Workshop. An AI system is not just the model, but everything on top of it, including the supervisor and monitoring system. Instead of evaluating the model, we assess the robustness of the safeguards systems. While some believe it's straightforward to monitor LLMs, we think this task is actually complex and fraught with challenges. Evaluating these safeguards is not trivial, and there is no widely recognized methodology to evaluate them. We show that existing guardrails often lack robustness in situations not anticipated during their training. The BELLS benchmark has been included in the OECD catalogue of tools and metrics for trustworthy AI.New narrative: Evaluations and safeguards are not ready yet. They are full of holes, and we have had many blindspots in the past. We probably still have many blind spots today.We published What convincing warning shot could help prevent extinction from AI?, in which we introduce warning shot theory, a socio-technical agenda aimed at characterizing 1) future AI incidents, and 2) the reaction of society in response to those incidents.New narrative: We might be heading to an event horizon, after which humanity's fate will be irreversible. People might not react by default.We co-organized and originated the idea of the AI capabilities and risks demo-jam: Creating visceral interactive demonstrations.We think demos like those are a great way to present and showcase dangerous capabilities. When we present AI risks, we generally aim to show concrete failure modes that have already been showcased and avoid discussing theoretical stuff like the orthogonality thesis.We explored constructability as a new approach for safe-by-design AI.New narrative: We think it might be possible to create plainly coded AGI. This is obviously very exploratory, but if it worked we think this could be safer than deep learning.

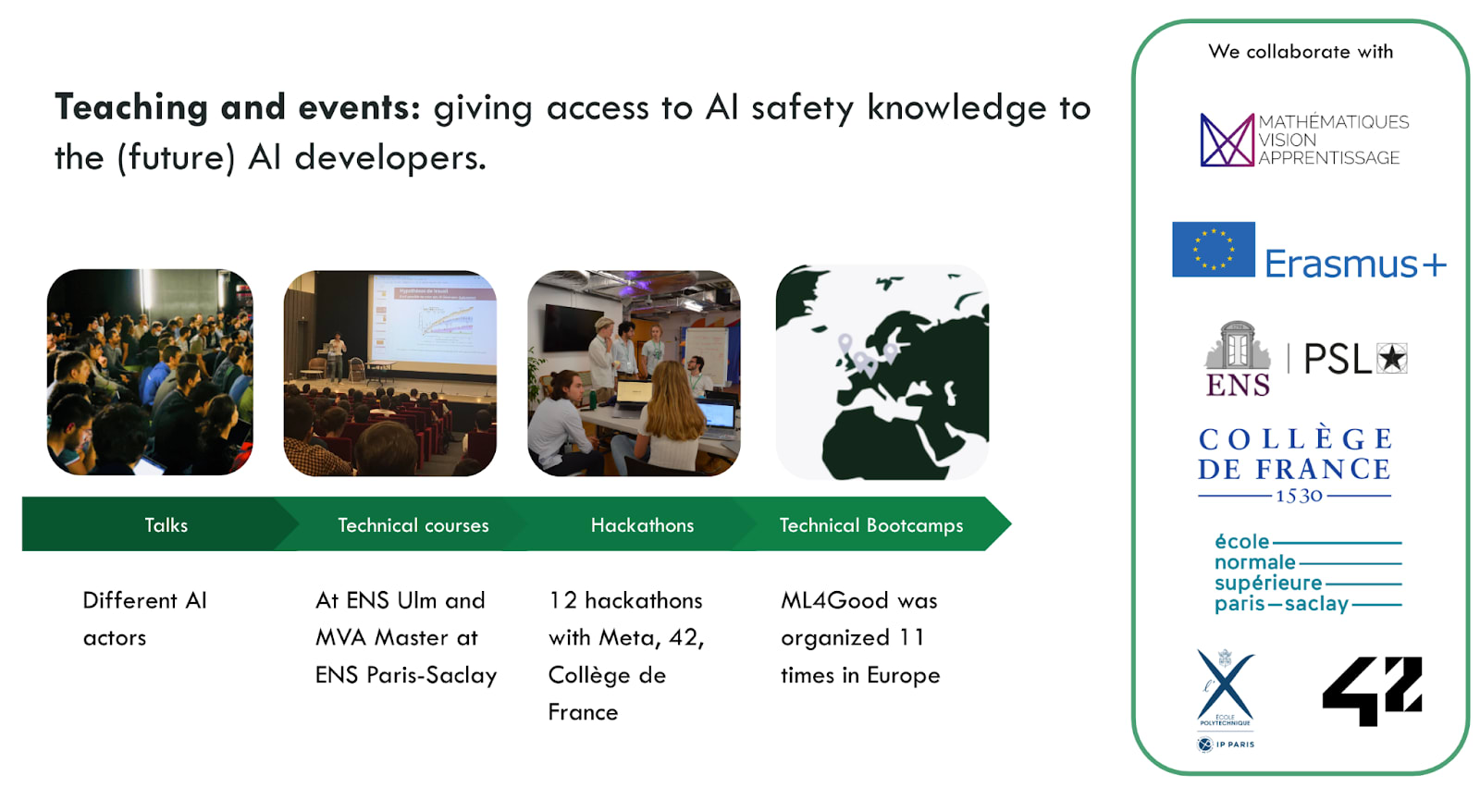

Education & field-building

Rationale: Educating the next generation of AI researchers is important. By offering university courses, bootcamps, and teaching materials, we can attract and upskill top talents.

- We offer the only accredited university course on AI safety of general-purpose AI systems in France (and the first one in continental Europe since 2022?) at ENS Ulm and ENS Paris-Saclay, two top universities in France. The Master of AI in Paris-Saclay is the most prestigious in France and gets the majority of French talent. Our course is published on Youtube (in French, sorry!).We created ML4Good, a 10-day bootcamp to upskill in technical AI safety, which has been reproduced over 10 times in Europe and internationally, and we were recently funded by Erasmus+ from the European Commission. We think ML4Good is SotA to get smart people to enter and do valuable things in 10 days. ML4Good is going to be scaled further to 10 bootcamps/year with the creation of a dedicated organization.[7]We are developing a textbook on AI Safety, the AI Safety Atlas, thanks mostly to support from Ryan Kidd (via Manifund regrant), more information soon!We organised about one small event a week, either at ENS Ulm in Paris or on our Discord.We are contributing to a MOOC in collaboration with ENS Paris-Saclay (WIP).

Education & mass outreach

Rationale: By educating the general population and, in particular, the scientific communities, we aim to promote a robust culture of AI safety within public institutions, academia, and the industry. Public awareness is the foundation of societal engagement, which in turn influences institutional priorities and decision-making processes. Policymakers are not isolated from public opinion; they are often swayed by societal narratives and the concerns of their families, colleagues, and communities.

- We published an Op-Ed supported by Yoshua Bengio (original version in french): “It is urgent to define red lines that must not be crossed”.We collaborated/advised/gave interviews with 9 French Youtubers, with one video reaching more than 3 million views in a month. Given that half of the French people watch Youtube, this video reached almost 10% of the French population using Youtube, which might be more than any AI safety video in any other language.

Gallery

Team

Our starting members include:

- Charbel-Raphael Segerie, Executive Director, co-founder.Florent Berthet, COO, co-founder.Manuel Bimich, Head of Strategy, co-founder.Vincent Corruble, Scientific Director.Charles Martinet, Head of Policy.Markov Grey, Research Communication.Jonathan Claybrough, GRASP project Coordinator.Épiphanie Gédéon, Executive Assistant, and R&D.Hadrien Mariaccia, Research Engineer.Lola Elissalde, Communication.Three policy analysts that we are in the process of hiring

Board, by alphabetical order:

- Adam Shimi, Control AIAgatha Duzan, Safe AI LausanneAlix Pham, Simon Institute for Long term GovernanceJonathan Claybrough, European Network for AI SafetyMilo Rignell, LightOn

We also collaborate with Alexandre Variengien (co-founder of CeSIA), Siméon Campos (SaferAI, co-founder of EffiSciences), Diego Dorn (the main contributor to our BELLS project, head teacher at some ML4Good), Nia Gardner (Executive Director of the ML4Good new organization), and also Jérémy Andréoletti, Amaury Lorin, Jeanne Salle, Léo Dana, Lucie Philippon, Nicolas Guillard, Michelle Nie, Simon Coumes, and Sofiane Mazière.

Funding

We have received several generous grants from the following funders (and multiple smaller ones not included here), who we cannot thank enough:

- Effektiv SpendenAI Safety Tactical Opportunities Fund (AISTOF)Longview PhilanthropyA recently established Swedish foundationSurvival and Flourishing FundSome ML4Good bootcamps have been funded by Erasmus+, a program of the European CommissionThe textbook has been mainly funded by Ryan Kidd, via Manifund

These grants fell into two primary categories: unrestricted funds for CeSIA’s general operations, and targeted support for specific project initiatives.

Next steps

We created the organization in March 2024. Most of the previous points were done during the last 6 months with the exception of our historic field-building activities, such as the ML4Good bootcamps.

It’s a lot of different types of activities! And It’s possible we will try to narrow down our focus in the future if this makes sense.

The next six months will be pretty intense, between the AI Action Summit, the drafting of the AI Act's Code of Practice, and completing ongoing projects such as the textbook.

We invite researchers, educators, and policymakers to collaborate with us as we navigate this important phase. Feel free to reach out at u>contact@securite-ia.fr</u.

Here is our Substack if you want to follow us or meet us in person in January-February for the Turing Dialogues on AI Governance, our series of roundtables, which is an official side event of the AI Action Summit.

Thanks to the numerous people who have advised, contributed, volunteered, and have beneficially influenced us.

- ^

“Safety” usually translates to “Sécurité” in French, and “Security” translates to “Sûreté”.

- ^

and in the European Union?

- ^

OECD: Organisation for Economic Co-operation and Development.

- ^

CNIL: National Commission on Informatics and Liberty

- ^

ANSSI: National Cybersecurity Agency of France

- ^

LNE: National Laboratory of Metrology and Testing

- ^

For example, during ML4Good Brazil, we created and reactivated 4 different AI Safety field-building groups in Latin America: Argentina, Columbia, AI safety Brazil and ImpactRio, and ImpactUSP (University of San Paolo). Participants consistently rate us above 9/10 for recommending this bootcamp.

Discuss