Published on December 3, 2024 4:33 AM GMT

MIRI is a nonprofit research organization with a mission of addressing the most serious hazards posed by smarter-than-human artificial intelligence. In our general strategy update and communications strategy update earlier this year, we announced a new strategy that we’re executing on at MIRI, and several new teams we’re spinning up as a result. This post serves as a status update on where things stand at MIRI today.

We originally planned to run a formal fundraiser this year, our first one since 2019. However, we find ourselves with just over two years of reserves, which is more than we’ve typically had in our past fundraisers.

Instead, we’ve written this brief update post, discussing recent developments at MIRI, projects that are in the works, and our basic funding status. In short: We aren’t in urgent need of more funds, but we do have a fair amount of uncertainty about what the funding landscape looks like now that MIRI is pursuing a very different strategy than we have in the past. Donations and expressions of donor interest may be especially useful over the next few months, to help us get a sense of how easy it will be to grow and execute on our new plans.

You can donate via our Donate page, or get in touch with us here. For more details, read on.

About MIRI

For most of MIRI’s 24-year history, our focus has been on a set of technical challenges: “How could one build AI systems that are far smarter than humans, without causing an extinction-grade catastrophe?”

Over the last few years, we’ve come to the conclusion that this research, both at MIRI and in the larger field, has gone far too slowly to prevent disaster. Although we continue to support some AI alignment research efforts, we now believe that absent an international government effort to suspend frontier AI research, an extinction-level catastrophe is extremely likely.

As a consequence, our new focus is on informing policymakers and the general public about the dire situation, and attempting to mobilize a response.

Learn more:

|

We began to scale back our alignment research program starting in late 2020 and 2021, and began pivoting to communications and governance research in 2023. Over the past year, we’ve been focusing on hiring and training new staff, and we have a number of projects in the pipeline, including new papers from our technical governance team, a new introductory resource for AI risk, and a forthcoming book.

We believe that our hard work in finding the right people is really paying off; we’re proud of the new teams we’re growing, and we’re eager to add a few more communications specialists and technical governance researchers in 2025. Expect some more updates over the coming months as our long-form projects come closer to completion.

Funding Status

As noted above, MIRI hasn’t run a formal fundraiser since 2019. Thanks to the major support we received in 2020 and 2021, as well as our scaled-back alignment research ambitions as we reevaluated our strategy in 2021–2022, we haven’t needed to raise additional funds in some time.

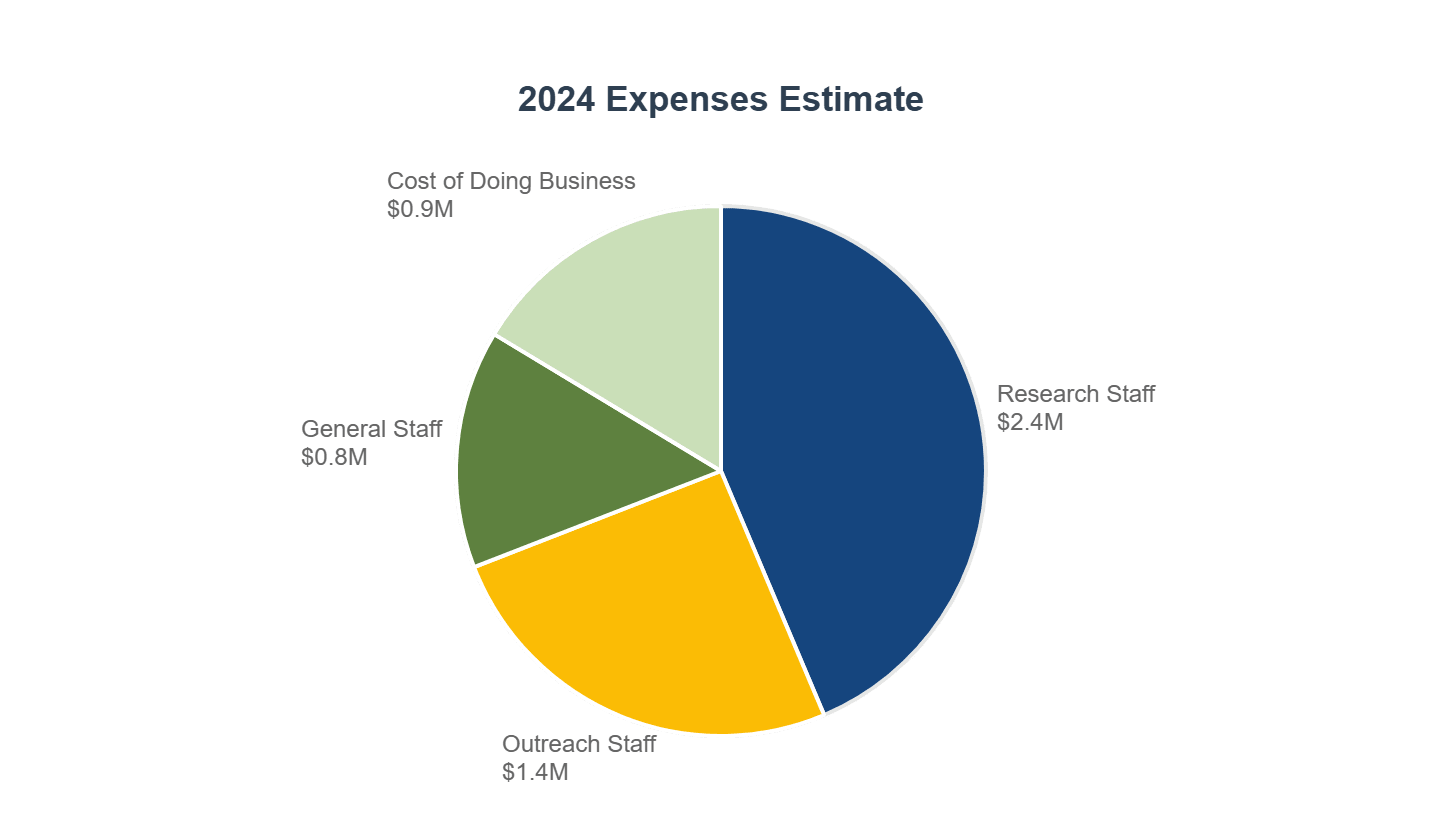

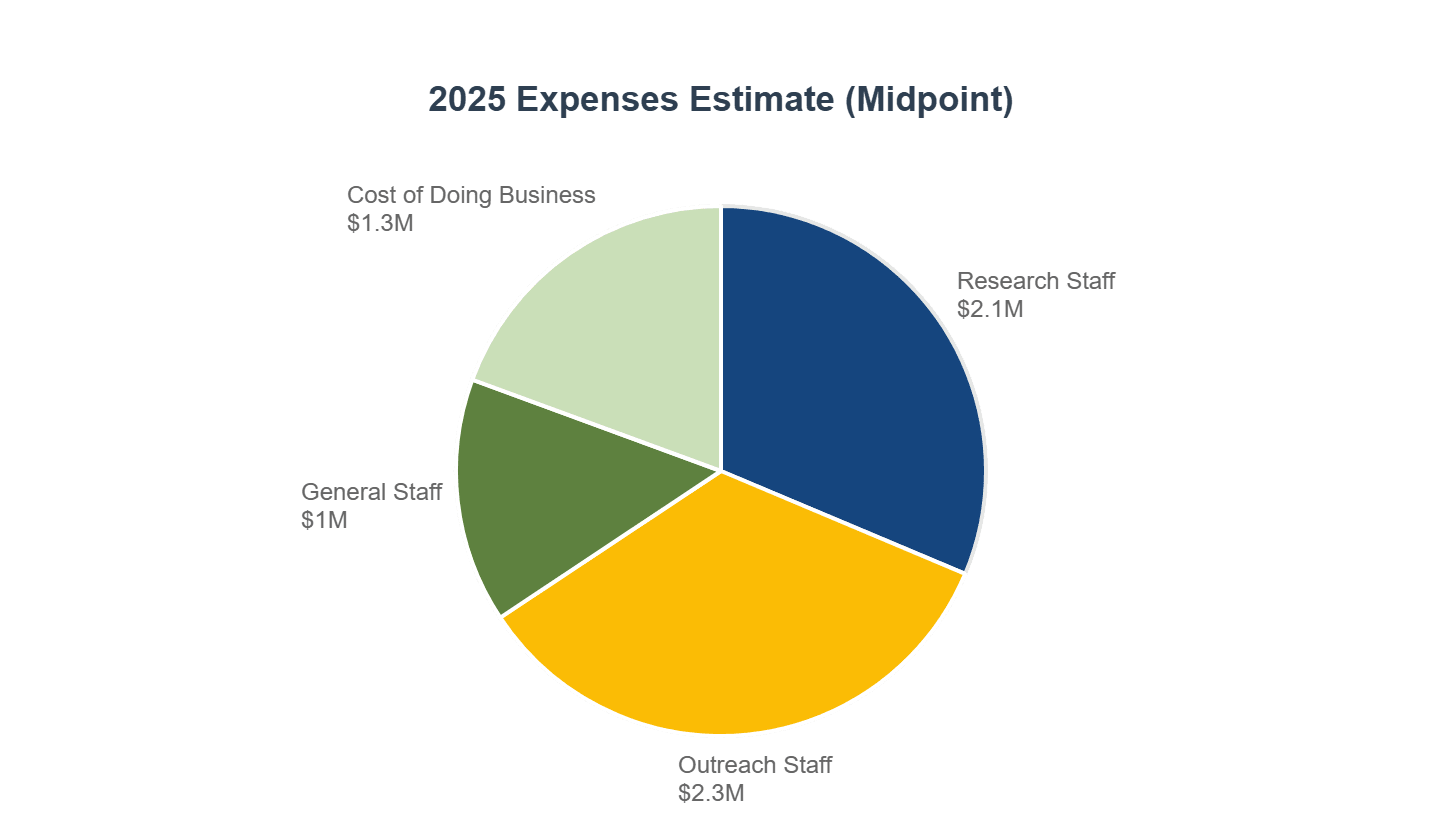

Our yearly expenses in 2019–2023 ranged from $5.4M to $7.7M, with a high in 2020 and a low in 2022. Our projected spending for 2024 is $5.6M, and (with a high degree of uncertainty, mostly about hiring outcomes) we expect our 2025 expenses to be in the range of $6.5M to $7M.

With our new strategy, we are in the process of scaling up our new communications and technical governance teams. Earlier this year, we expected to have dipped below two years of reserves at this point, but (1) our 2024 expenses were lower than expected, and (2) the majority of our reserves are invested in a broad range of US and foreign stocks and bonds, and the market performed well in 2024. As a consequence, given our mainline staff count and hiring estimates, we now project that our $16M end-of-year reserves will be enough to fund our activities for just over two years.

We generally aim to maintain at least two years of reserves, and thanks to the generosity of our past donors, we’re still able to hit that target.

However, we’re also in a much more uncertain funding position than we have been in recent years: we don’t know how many of the funders who supported our alignment research in 2019 will also be interested in supporting our communications and policy efforts going forward, and much has changed in the world in the last five years.

It’s entirely possible that it will be easier to find support for MIRI’s new strategy than for our past alignment research program. AI extinction scenarios have received more scientific and popular attention over the last two years, and the basic case for MIRI’s new strategy is a lot simpler and less technical than was previously the case: “Superintelligence is likely to cause an extinction-grade catastrophe if we build it before we’re ready; we’re nowhere near ready, so we shouldn’t build it. Few scientists are candidly explaining this situation to policymakers or the public, and MIRI has a track record of getting these ideas out into the world."

That said, it’s also possible that it will be more difficult to fund our new efforts. If so, this would force us to drastically change our plans and scale back our ambitions.

One of our goals in sharing all of this now is to solicit donations and hear potential donors’ thoughts, which we can then use as an early indicator of how likely it is that we’ll be able to scale our teams and execute on our plans over the coming years. So long as we’re highly uncertain about the new funding environment, donations and words from our supporters can make a big difference for how we think about the next few years.

If you want to support our efforts, visit our Donate page or reach out.

(Posting on Alex Vermeer's behalf; MIRI Blog mirror.)

Discuss