Introduction

The rise of Large Language Models (LLMs) has marked a significant advancement in the era of Artificial Intelligence (AI). During this period, Cloud Graphic Processing Units (GPUs) offered by Paperspace + DigitalOcean have emerged as pioneers in providing high-quality NVIDIA GPUs, pushing the boundaries of computational technology.

NVIDIA, was founded in 1993 by three visionary American computer scientists - Jen-Hsun (“Jensen”) Huang, former director at LSI Logic and microprocessor designer at AMD; Chris Malachowsky, an engineer at Sun Microsystems; and Curtis Priem, senior staff engineer and graphic chip designer at IBM and Sun Microsystems - embarked on its journey with a deep focus on creating cutting-edge graphics hardware for the gaming industry. This dynamic trio's expertise and passion set the stage for NVIDIA's remarkable growth and innovation.

As tech evolution happened, NVIDIA recognized the potential of GPUs beyond gaming and explored the potential for parallel processing. This led to the development of CUDA (originally Compute Unified Device Architecture) in 2006, helping developers around the globe to use GPUs for a variety of heavy computational tasks. This led to the stepping stone for a deep learning revolution, positioning NVIDIA as the leader in the field of AI research and development.

NVIDIA's GPUs have become integral to AI, powering complex neural networks and enabling breakthroughs in natural language processing, image recognition, and autonomous systems.

Introduction to the H100: The latest advancement in NVIDIA's lineup

The company’s commitment to innovation continues with the release of the H100 GPU, a powerhouse that represents the peak of modern computing. With its cutting-edge Hopper architecture, the H100 is set to revolutionize deep learning, offering unmatched performance and efficiency.

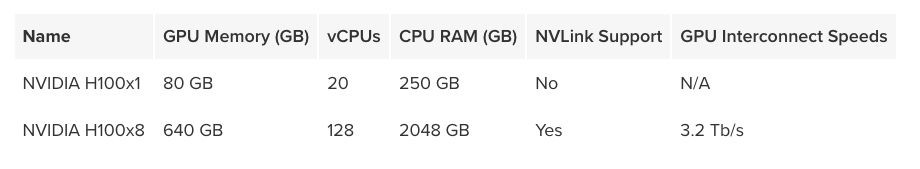

The NVIDIA H100 Tensor Core GPU, equipped with the NVIDIA NVLink™ Switch System, allows for connecting up to 256 H100 GPUs to accelerate processing workloads. This GPU also features a dedicated Transformer Engine designed to handle trillion-parameter language models efficiently. Thanks to these technological advancements, the H100 can enhance the performance of large language models (LLMs) by up to 30 times compared to the previous generation, delivering cutting-edge capabilities in conversational AI.

NYC2 datacenter.

The Architecture of the H100

The NVIDIA Hopper GPU architecture delivers high-performance computing with low latency and is designed to operate at data center scale. Powered by the NVIDIA Hopper architecture, the NVIDIA H100 Tensor Core GPU marks a significant leap in computing performance for NVIDIA's data center platforms. Built using 80 billion transistors, the H100 is the most advanced chip ever created by NVIDIA, featuring numerous architectural improvements.

As NVIDIA's 9th-generation data center GPU, the H100 is designed to deliver a substantial performance increase for AI and HPC workloads compared to the previous A100 model. With InfiniBand interconnect, it provides up to 30 times the performance of the A100 for mainstream AI and HPC models. The new NVLink Switch System enables model parallelism across multiple GPUs, targeting some of the most challenging computing tasks.

- Built on Hopper architecture H100 GPUs is designed for high-performance computing and AI workloads.H100 provides fourth-generation tensor cores which allows faster communications between chips when compared to A100.Combines software and hardware optimizations to accelerate Transformer model training and inference, achieving up to 9x faster training and 30x faster inference.Fourth-Generation NVLink is responsible for the increase in bandwidth for multi-GPU operations, providing 900 GB/sec total bandwidth.

These architectural advancements make the H100 GPU a significant step forward in performance and efficiency for AI and HPC applications.

Key Features and Innovations

Fourth-Generation Tensor Cores:

- The fourth-gen tensor cores provides up to 6x faster than the A100 for chip-to-chip communication.Provides 2x the Matrix Multiply-Accumulate (MMA) computational rates per Streaming Multiprocessor or SM for equivalent data types.Offers 4x the MMA rate using the new FP8 data type compared to previous 16-bit floating-point options.Includes a Sparsity feature that doubles the performance of standard Tensor Core operations by exploiting structured sparsity in deep learning networks.

New DPX Instructions:

- Accelerates dynamic programming algorithms by up to 7x over the A100 GPU.Useful for algorithms like Smith-Waterman for genomics and Floyd-Warshall for optimizing routes in dynamic environments.

Improved Processing Rates:

- Achieves 3x faster IEEE FP64 and FP32 processing rates compared to the A100, thanks to higher clocks and additional SM counts.

Thread Block Cluster Feature:

- Extends the CUDA programming model to include Threads, Thread Blocks, Thread Block Clusters, and Grids.Allows multiple Thread Blocks to synchronize and share data across different SMs.

Asynchronous Execution Enhancements:

- Features a new Tensor Memory Accelerator (TMA) unit for efficient data transfers between global and shared memory.Supports asynchronous data copies between Thread Blocks in a Cluster.Introduces an Asynchronous Transaction Barrier for atomic data movement and synchronization.

New Transformer Engine:

- Combines software with custom Hopper Tensor Core technology to accelerate Transformer model training and inference.Automatically manages calculations using FP8 and 16-bit precision, delivering up to 9x faster AI training and 30x faster AI inference for large language models compared to the A100.

HBM3 Memory Subsystem:

- Offers nearly double the bandwidth of the previous generation.H100 SXM5 GPU is the first to feature HBM3 memory, providing 3 TB/sec of memory bandwidth.

Enhanced Cache and Multi-Instance GPU Technology:

- 50 MB L2 cache reduces memory trips by caching large model and dataset portions.Second-generation Multi-Instance GPU (MIG) technology offers about 3x more compute capacity and nearly 2x more memory bandwidth per GPU instance than the A100.MIG supports up to seven GPU instances, each with dedicated units for video decoding and JPEG processing.

Confidential Computing and Security:

- New support for Confidential Computing to protect user data and defend against attacks.First native Confidential Computing GPU, offering better isolation and protection for virtual machines (VMs).

Fourth-Generation NVIDIA NVLink®:

- Increases bandwidth by 3x for all-reduce operations and by 50% for general operations compared to the previous NVLink version.Provides 900 GB/sec total bandwidth for multi-GPU IO, outperforming PCIe Gen 5 by 7x.

Third-Generation NVSwitch Technology:

- Connects multiple GPUs in servers and data centers with improved switch throughput.Enhances collective operations with multicast and in-network reductions.

NVLink Switch System:

- Enables up to 256 GPUs to connect over NVLink, delivering 57.6 TB/sec of bandwidth.Supports an exaFLOP of FP8 sparse AI compute.

PCIe Gen 5:

- Offers 128 GB/sec total bandwidth, doubling the bandwidth of Gen 4 PCIe.Interfaces with high-performing CPUs and SmartNICs/DPUs.

Additional Improvements:

- Enhancements to improve strong scaling, reduce latency and overheads, and simplify GPU programming.

Data Center Innovations:

- New H100-based DGX, HGX, Converged Accelerators, and AI supercomputing systems are discussed in the NVIDIA-Accelerated Data Centers section.Details on H100 GPU architecture and performance improvements are covered in-depth in a dedicated section.

Implications for the Future of AI

GPUs have become crucial in this ever evolving field of AI and deep learning will continue to grow. The parallel processing and accelerated computing are the key advantages of H100. The tensor cores and architecture of H100 significantly increases the performance of AI models particularly LLMs. The improvement is specially during the training time and inferencing. This allows the developers and researchers to effectively work with complex models.

The H100’s dedicated Transformer Engine optimizes the training and inference of Transformer models, which are fundamental to many modern AI applications, including natural language processing and computer vision. This capability helps accelerate research and deployment of AI solutions across various fields.

Being said that blackwell is the successor to NVIDIA H100 and H200 GPUS the future GPUs are more likely to focus on further improving efficiency and reducing power consumption. Hence moving a step towards sustainable environments. Further future GPUs may offer even greater flexibility in balancing precision and performance.

The NVIDIA H100 GPU has been considered to be a cutting-edge GPU in AI and computing, shaping the future of technology and its applications across industries.

The role of the H100 in advancing AI capabilities

- Autonomous Vehicles and Robotics: The improved processing power and efficiency can help algorithms like YOLO to develop autonomous systems, making self-driving cars and robots more reliable and capable of operating in complex environments.Financial Services: AI-driven financial models and algorithms will benefit from the H100’s performance improvements, enabling faster and more accurate risk assessments, fraud detection, and market predictions.Entertainment and Media: The H100’s advancements in AI can enhance content creation, virtual reality, and real-time rendering, leading to more immersive and interactive experiences in gaming and entertainment.Research and Academia: The ability to handle large-scale AI models and datasets will empower researchers to tackle complex scientific challenges, driving innovation and discovery across various disciplines.Integration of AI with Other Technologies: The H100’s innovations may pave the path for edge computing, IoT, and 5G, enabling smarter, more connected devices and systems.

Conclusion

NVIDIA H100 represents a massive jump in AI and high-performance computing. The hopper architecture and the transformer engine, has sucessfully set up a new bar of efficiency and power. As we look to the future, the H100's impact on deep learning and AI will continue to drive innovation, more breakthroughs in fields such as healthcare, autonomous systems, and scientific research, ultimately shaping the next era of technological progress.