Datawhale干货

整理:机器之心、Datawhale

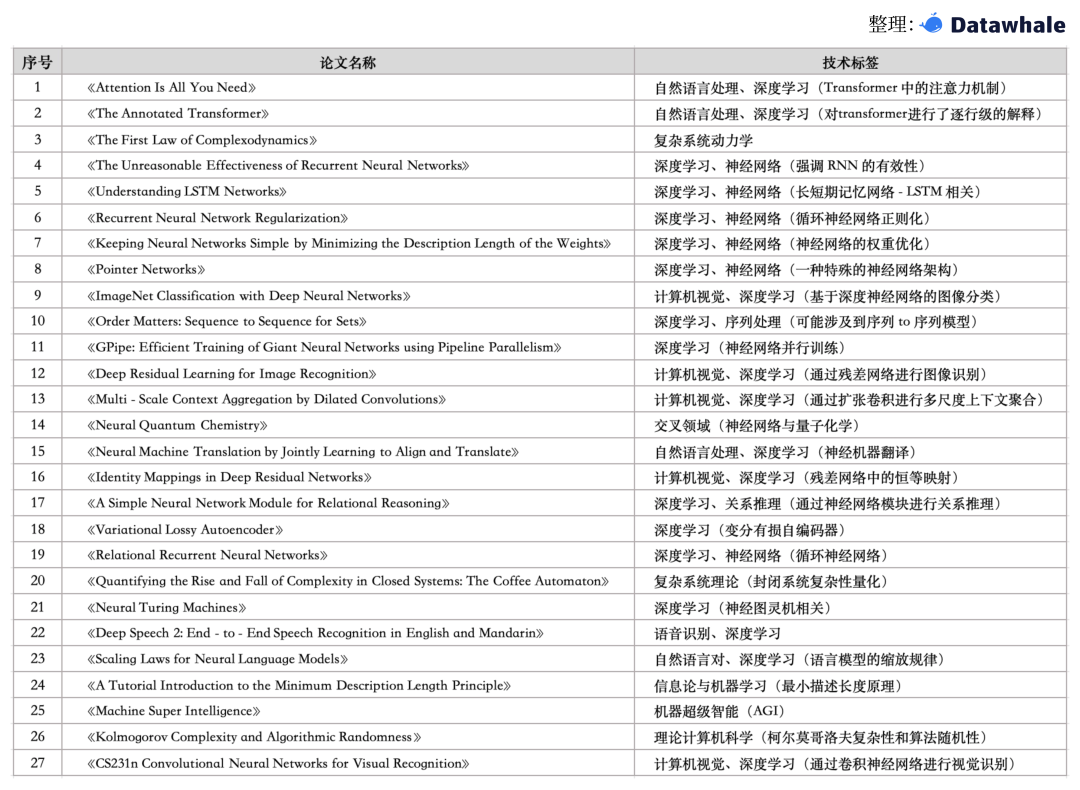

一份AI领域研究的经典论文清单

随着生成式 AI 模型掀起新一轮 AI 浪潮,越来越多的行业迎来技术变革。许多行业从业者、基础科学研究者需要快速了解 AI 领域发展现状、掌握必要的基础知识。

而在转行 AI 的过程中,研究「论文」一定是最不可缺少的一环。

传奇程序员、3D 游戏之父,id Software 联合创始人 John Carmack 在 2020 年想转行 AGI 时,前 OpenAI 联合创始人兼首席科学家 Ilya Sutskever 给他写了一份 AI 领域研究的论文清单。

这份清单被 50 多万人浏览过,网友称:Ilya 认为掌握了这些内容,你就了解了当前(人工智能领域) 90% 的重要内容。甚至有人表示它是 OpenAI 入职培训内容的一部分。

与此同时,一个名为 Taro Langner 的贡献者对清单做了补充,还指出了一些必须注意的额外内容,包括 Yann LeCun等重要 AI 学者的工作,以及关于 U-Net、YOLO 目标检测、GAN、WaveNet、Word2Vec 等技术的论文。

Datawahle 将完整的论文清单整理如下:

完整论文清单

卷积神经网络:

《ImageNet Classification with Deep Neural Networks》

论文地址:https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

《CS231n Convolutional Neural Networks for Visual Recognition》

论文地址:https://cs231n.github.io/

《Deep Residual Learning for Image Recognition》

论文地址:https://arxiv.org/pdf/1512.03385

《Identity Mappings in Deep Residual Networks》

论文地址:https://arxiv.org/pdf/1603.05027

《Multi-Scale Context Aggregation by Dilated Convolutions》

论文地址:https://arxiv.org/pdf/1511.07122

循环神经网络:

《The Unreasonable Effectiveness of Recurrent Neural Networks》

论文地址:https://karpathy.github.io/2015/05/21/rnn-effectiveness/

《Understanding LSTM Networks》

论文地址:https://colah.github.io/posts/2015-08-Understanding-LSTMs/

《Recurrent Neural Network Regularization》

论文地址:https://arxiv.org/pdf/1409.2329

《Pointer Networks》

论文地址:https://arxiv.org/pdf/1506.03134

《Relational Recurrent Neural Networks》

论文地址:https://arxiv.org/pdf/1806.01822

《Neural Turing Machines》

论文地址:https://arxiv.org/pdf/1410.5401

《Deep Speech 2: End-to-End Speech Recognition in English and Mandarin》

论文地址:https://arxiv.org/pdf/1512.02595

《Order Matters: Sequence to Sequence for Sets》

论文地址:https://arxiv.org/pdf/1511.06391

《Neural Machine Translation by Jointly Learning to Align and Translate》

论文地址:https://arxiv.org/pdf/1409.0473

《A Simple Neural Network Module for Relational Reasoning》

论文地址:https://arxiv.org/pdf/1706.01427

Transformers:

《Attention Is All You Need》

论文地址:https://arxiv.org/pdf/1706.03762

《The Annotated Transformer》

论文地址:https://nlp.seas.harvard.edu/annotated-transformer/

《Scaling Laws for Neural Language Models》

论文地址:https://arxiv.org/pdf/2001.08361

《Attention Is All You Need》

论文地址:https://arxiv.org/pdf/1706.03762

《The Annotated Transformer》

论文地址:https://nlp.seas.harvard.edu/annotated-transformer/

《Scaling Laws for Neural Language Models》

论文地址:https://arxiv.org/pdf/2001.08361

信息论:

《The First Law of Complexodynamics》

论文地址:https://scottaaronson.blog/?p=762

《Keeping Neural Networks Simple by Minimizing the Description Length of the Weights》

论文地址:https://www.cs.toronto.edu/~hinton/absps/colt93.pdf

《A Tutorial Introduction to the Minimum Description Length Principle》

论文地址:https://arxiv.org/pdf/math/0406077

《Kolmogorov Complexity and Algorithmic Randomness》

论文地址:https://www.lirmm.fr/~ashen/kolmbook-eng-scan.pdf

《Quantifying the Rise and Fall of Complexity in Closed Systems: The Coffee Automaton》

论文地址:https://arxiv.org/pdf/1405.6903

其他项:

《GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism》

论文地址:https://arxiv.org/pdf/1811.06965

《Variational Lossy Autoencoder》

论文地址:https://arxiv.org/pdf/1611.02731

《Neural Quantum Chemistry》

论文地址:https://arxiv.org/pdf/1704.01212

《Machine Super Intelligence》

论文地址:https://www.vetta.org/documents/Machine_Super_Intelligence.pdf

元学习:

《Meta-Learning with Memory-Augmented Neural Networks》 论文地址:https://proceedings.mlr.press/v48/santoro16.pdf 《Prototypical Networks for Few-shot Learning》 论文地址:https://arxiv.org/abs/1703.05175 《Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks》 论文地址:https://proceedings.mlr.press/v70/finn17a/finn17a.pdf

强化学习:

《Human-level concept learning through probabilistic program induction》 论文地址:https://amygdala.psychdept.arizona.edu/labspace/JclubLabMeetings/Lijuan-Science-2015-Lake-1332-8.pdf 《Neural Architecture Search with Reinforcement Learning》 论文地址:https://arxiv.org/pdf/1611.01578 《A Simple Neural Attentive Meta-Learner》 论文地址:https://arxiv.org/pdf/1707.03141

自我博弈:

《Hindsight Experience Replay》 论文地址:https://arxiv.org/abs/1707.01495 《Continuous control with deep reinforcement learning》 论文地址:https://arxiv.org/abs/1509.02971 《Sim-to-Real Transfer of Robotic Control with Dynamics Randomization》 论文地址:https://arxiv.org/abs/1710.06537 《Meta Learning Shared Hierarchies》 论文地址:https://arxiv.org/abs/1710.09767 《Temporal Difference Learning and TD-Gammon ,1995》 论文地址:https://www.csd.uwo.ca/~xling/cs346a/extra/tdgammon.pdf 《Karl Sims - Evolved Virtual Creatures, Evolution Simulation, 1994》 论文地址:https://dl.acm.org/doi/10.1145/192161.192167 《Emergent Complexity via Multi-Agent Competition》 论文地址:https://arxiv.org/abs/1710.03748 《Deep reinforcement learning from human preferences》 论文地址:https://arxiv.org/abs/1706.03741

额外补充:

Yann LeCun 等人的工作,他在 CNN 的实际应用方面做出了开创性的工作 ——《Gradient-based learning applied to document recognition》

论文地址:https://www.cs.princeton.edu/courses/archive/spr08/cos598B/Lectures/LeCunEtAl.pdf Ian Goodfellow 等人的工作,他在生成对抗网络(GAN)方面的工作长期主导了图像生成领域 ——《Generative Adversarial Networks》 论文地址:https://arxiv.org/pdf/1406.2661 Demis Hassabis 等人的工作,他在 AlphaFold 方面的强化学习研究获得了诺贝尔奖 ——《Human-level control through deep reinforcement learning》、《AlphaFold at CASP13》 论文地址:https://storage.googleapis.com/deepmind-media/dqn/DQNNaturePaper.pdf

《GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism》

论文地址:https://arxiv.org/pdf/1811.06965

《Variational Lossy Autoencoder》

论文地址:https://arxiv.org/pdf/1611.02731

《Neural Quantum Chemistry》

论文地址:https://arxiv.org/pdf/1704.01212

《Machine Super Intelligence》

论文地址:https://www.vetta.org/documents/Machine_Super_Intelligence.pdf

一起“点赞”三连↓

一起“点赞”三连↓