Published on November 8, 2024 7:49 PM GMT

Summary

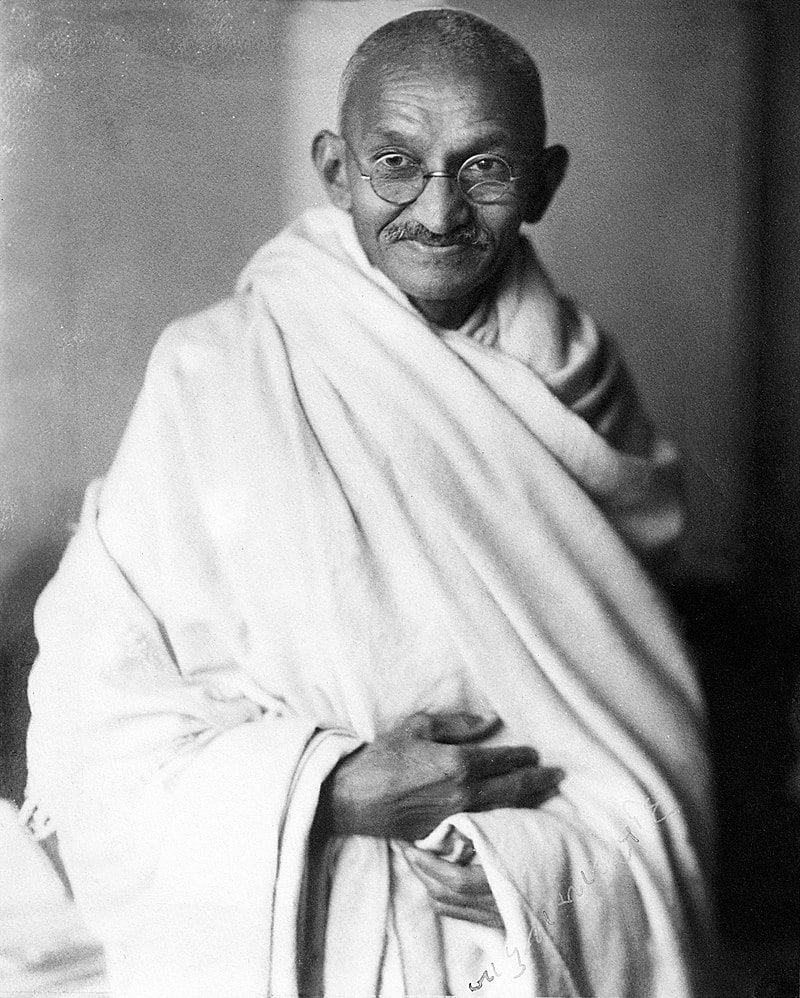

There should be more people like Mahatma Gandhi in the AI safety community, so that AI safety is a source of inspiration for both future and current generations. Without nonviolence and benevolence, we may be unable to advocate for AI safety.

Introduction

Mohandas Karamchand Gandhi, also known as Mahatma Gandhi, was an Indian activist who used nonviolence in order to support India's independence from Britain. He is now considered as one of the biggest sources of inspiration from people trying to do the most good.

(Source: Wikimedia Commons)

Nowadays, it is often argued that Artificial Intelligence is an existential risk. If this were to be correct, we should ensure that AI safety researchers are able to advocate for safety.

The argument of this post is simple: As AI safety researchers, we should use nonviolence and benevolence to support AI safety and stop the race towards AI.

The problem to solve in AI safety

If AI is an existential risk, then, as AI safety researchers, we ought to advocate for safety. However, convincing AI leaders to follow the advice of the AI safety community is far from being easy, as one day we may have to advocate for shutting down AI progress.

Shutting down AI progress is extremely hard. Not only would we have to convince other AI researchers to stop their job, but we would have to stop Moore's law, which (broadly) conjectures that the number of transistors in an integrated circuit doubles every two years.

Moore's law implies that, even in the case where we convince our peers to stop research in AI capabilities, AI would continue to get better over time, as computers would get faster and faster.

To stop Moore's law, we would have to convince nearly every industry working on computer chips to stop making more efficient chips. As Moore's law is one of the main drivers of today's economy, the challenge seems extremely hard.

This challenge seems so hard that I think the amount of activism required to solve it may be as big as the amount of activism required for problems like climate change and animal farming.

To solve this problem, we therefore need to work on it before it arises, despite our uncertainty about whether the problem will arise or not. That is, we need to argue for the shut down of AI progress and the end of Moore's law right now.

But, how can we start doing that amount of activism? Although some may argue that using violence is the solution, I believe that the answer is the opposite: We should use nonviolence and benevolence as the main principle of AI safety activism.

To do so, we may need to learn from Mahatma Gandhi, as he is often considered as the representation of nonviolence and benevolence. Furthermore, his advocacy turned out to be very effective.

What could we learn from Mahatma Gandhi?

Suppose that someone is making a morally impermissible action (e.g. developing larger and larger AI systems when we are supposed to slow down). Then, who would be able to bring this immoral action to light? Here, expertise in the domain (e.g. being a prominent AI safety researcher) is not enough.

The one who has the possibility to rectify the wrong beliefs of AI leaders is the one who has both the moral authority and the expertise to do so. If you are very good at AI safety but that no one wants to listen to you, your activism will be ineffective.

Mahatma Gandhi well understood that nonviolence and benevolence are the most powerful methods of communication. In fact, he called this phenomenon satyagraha (satya = love/truth, agraha = power/force). He later created the Sabarmati Ashram, where he taught satyagraha to his followers, the satyagrahis.

The most famous example of civil disobedience made by satyagrahis is the Salt March. In 1882, the British Salt Act was put in place, which forbids Indians to produce or sell salt, in order to ensure they pay for British salt instead. To advocate against this law, Mahatma Gandhi, followed by 78 satyagrahis, walked 387 kilometers for 24 days towards the Dandi beach in order to break the salt law.

(Source: Wikimedia Commons)

The Salt March immediately received international attention. Thousands of Indians joined them in the march, to the point that the crowd was three kilometers long.

It is hard to tell how much did the Salt March impact the success of India's independence. However, one thing everyone has to agree is that Gandhi's choice to use satyagraha as the main principle of his activism surely was a good idea.

During our advocacy for AI safety, we should ensure that our activism works and convinces the leaders of AI. Therefore, we ought to consider satyagraha as the main principle of our activism.

While making an Alignment March is not easy, it is certainly possible. Although society has changed a lot since the era of Gandhi, there are still people making marches. For instance, Robin Greenfield, an environmental activist, is currently taking a 1,600 mile long walk across America.

As AI safety researchers, we have a moral duty to ensure we are an example for current and future generations, as otherwise no one else will help us solving the problems raised by AI. I think that making an Alignment March is a very good way to fight against the progress in AI capabilities and to ask for more AI safety.

Discuss