The advent of generative AI has supersized the appetite for GPUs and other forms of accelerated computing. To help companies scale up their accelerated compute investments in a predictable manner, GPU giant Nvidia and several server partners have borrowed a page from the world of high performance computing (HPC) and unveiled the Enterprise Reference Architectures (ERA).

Large language models (LLMs) and other foundation models have triggered a Gold Rush for GPUs, and Nvidia has been arguably the biggest beneficiary. In 2023, the company shipped 3.76 million data center GPU units, more than 1 million more than 2022. That growth hasn’t eased up in 2024, as companies continue to scramble for GPUs to power GenAI, which has driven Nvidia to become the most valuable company in the world, with a market capitalization of $3.75 trillion.

Nvidia launched its ERA program today against this backdrop of a mad scramble to scale up compute to build and serve GenAI applications. The company’s goal with ERA is to provide a blueprint to help customers scale up their HPC compute infrastructure in a predictable and repeatable manner that minimizes risk and maximizes results.

Nvidia says the ERA program will accelerate time to market for server makers while boosting performance, scalability, and manageability. This approach also bolsters security, Nvidia says, while reducing complexity. So far, Nvidia has ERA agreements in place for Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro, with more server makers expected to join the program.

“By bringing the same technical components from the supercomputing world and packaging them with design recommendations based on decades of experience,” the company says in a white paper on ERA, “Nvidia’s goal is to eliminate the burden of building these systems from scratch with a streamlined approach for flexible and cost-effective configurations, taking the guesswork and risk out of deployment.”

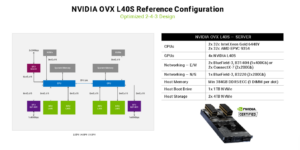

One of the Nvidia ERA reference configurations (Source: Nvidia ERA Overview whitepaper)

The ERA approach leverages certified server configurations of GPUs, CPUs, and network interface cards (NICs) that Nvidia says are “tested and validated to deliver performance at scale.” This includes the Nvidia Spectrum-X AI Ethernet platform, Nvidia BlueField-3 DPUs, among others.

The ERA is tailored toward large-scale deployments that range from four to 128 nodes, containing anywhere from 32 to 1,024 GPUs, according to Nvidia’s white paper. This is sweet spot where the company sees companies turning their data centers into “AI factories.” It’s also a bit smaller than the company’s existing NCP Reference Architecture, which is designed for larger-scale foundational model training starting with a minimum of 128 nodes and scaling up to 100,000 GPUs.

ERA calls for several different design patterns, depending on the size of the cluster. For instance, there is Nvidia’s “2-4-3” approach, which includes a 2U compute node that contains up to four GPUs, up to three NICs, and two CPUs. Nvidia says this can work on clusters ranging from eight to 96 nodes. Alternatively, there is the 2-8-5 design pattern, which calls for 4U nodes equipped with up to eight GPUs, five NICs, and two CPUs. This pattern scales from four up to 64 nodes in a cluster, Nvidia says.

Partnering with server makers on proven architectures for accelerated compute helps to move customers toward their goal of building AI factories in a fast and secure manner, according to Nvidia.

“The transformation of traditional data centers into AI Factories is revolutionizing how enterprises process and analyze data by integrating advanced computing and networking technologies to meet the substantial computational demands of AI applications,” the company says in its white paper.

This article first appeared on sister site BigDATAwire.