Last week, I caught the tail end of a trend going around - a "meme prompt," so to speak, for ChatGPT:

From all of our interactions what is one thing that you can tell me about myself that I may not know about myself

The original post (which currently has 4.3 million views) generated thousands of replies and retweets. Many were impressed with ChatGPT's flattery, or even a little spooked by how accurately it "knew" them.

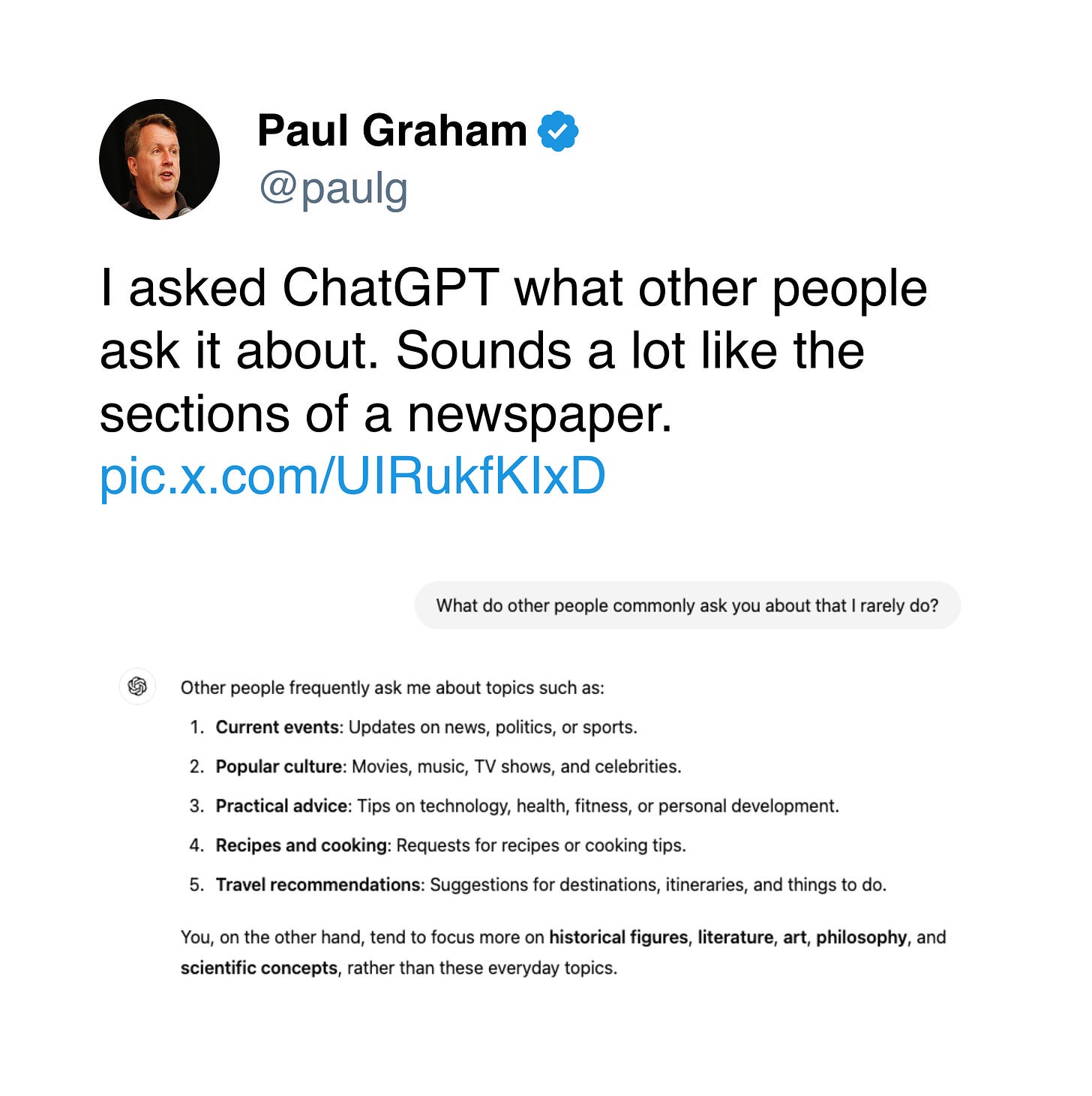

And from the initial premise, other users started exploring what else ChatGPT might be able to tell them about the world. Paul Graham, in particular, asked ChatGPT about other people's usage:

There's just one thing: that's not how it works! That's not how any of this works!1

The gap between what AI can actually do and what users think it can do isn't just a knowledge problem – it's also a design failure.

We don’t need no (AI) education

Over the past weeks and months, I've (for better or worse) become known as the "AI guy2" in my social circles. When I catch up with friends who know about my Substack, they'll usually bring up a story or question about AI.

But it's also given me some outside perspectives on what people think and know in this domain. For example, in 90% of cases, "AI" means "chatbots" in people's minds. Image and speech generation tend not to be on the radar, to say nothing of products that don't have a chatbot-like UX.

I can also tell you the most common misconceptions people have about AI. The top two? Believing that by default, a chatbot will be able to 1) access the internet and 2) learn about you over time.

In large part, I think it's pretty forgivable to have these misconceptions. The tech industry has spent untold millions (if not billions) advertising "smart" devices that promise to learn from your usage and improve. If my coffee scale is intelligent enough to know when to reorder more beans, shouldn't ChatGPT be able to learn way more about me?

What's even more frustrating about the most common misconceptions (from my perspective, anyway) is that they're not totally wrong. In some cases, chatbots absolutely can and do 1) access the Internet and 2) learn about you over time!

Here's how that works with ChatGPT (in case you don't already know):

GPT-4, GPT-4o, and GPT-4o mini can access the internet - if the browsing setting is enabled for new chats.

GPT-3.5, o1-preview, and o1-mini can't access the internet.

All ChatGPT models can use custom instructions to personalize your output - if you've provided them.

Some ChatGPT models (it's unclear which) will save "memories" based on your conversations - if memories are enabled for your account and ChatGPT's memories aren’t full.

Exactly zero ChatGPT models are capable of referencing details of conversations with other people.

Try explaining this to someone who occasionally uses the free tier of ChatGPT and is barely aware that different model versions exist - it's a herculean task. And this is just for a single company, at a single point in time! I fully assume that this explanation will be wrong in some way six months from now.

Reading the fine print

The problem is that LLM-makers (and the industry as a whole) have done a pretty poor job of educating users on what they should expect from their products. The original ChatGPT and Claude would hallucinate wildly without any warning; the current version just mentions "ChatGPT/Claude can make mistakes" in the fine print.

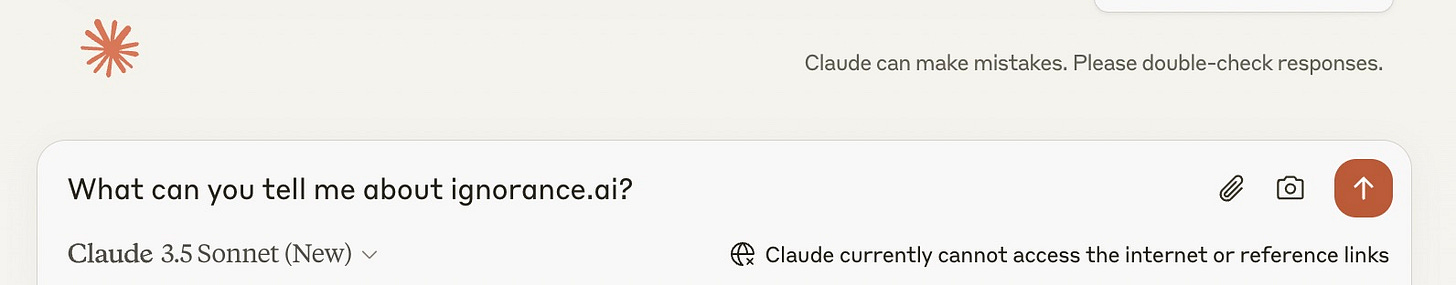

Claude carries a similar warning, but if you type in something resembling a domain name, it will also ever-so-subtly let you know that it can't currently access the internet.

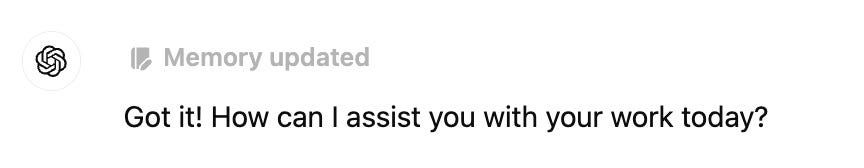

And when ChatGPT creates memories, the only indication is a light-gray-on-lighter-gray text element. While hovering over the text does at least expose a "manage memories" button, there isn't much indication of what memories actually do or their limitations.

So to recap, the "tell me about myself" ChatGPT prompt working as intended depends on a very narrow set of circumstances. The problem is, outside of those circumstances, ChatGPT will happily hallucinate a plausible answer anyway, with the user none the wiser.

It's why I found Paul Graham's tweet frustrating: since ChatGPT is trained to be a helpful assistant, asking a question like his doesn't result in any sort of warning that it's not actually possible from ChatGPT's perspective. PG is a smart guy - and if he can be fooled by something like this, then it doesn't bode well for the rest of us.

AI affordances and synthetic signifiers

These widespread misconceptions about AI capabilities aren't just a matter of user error – they point to a fundamental challenge in designing AI interfaces. In the classic book The Design of Everyday Things, Donald Norman explores effective design principles, focusing on how (physical) products can be made more user-friendly.

He discusses two related concepts in particular: "affordances" and "signifiers." Affordances are potential actions that an object/interface allows, i.e., what a tool or product is actually capable of. On the other hand, signifiers are indicators that communicate how to use an object/interface - cues that help users discover and understand affordances.

For physical products, an affordance would be a door handle (the door can be opened), and a signifier would be a push/pull sign next to the door (indicating how to open it). For digital products, an affordance would be external links (other content can be accessed), and a signifier would be blue, underlined text (indicating how to identify a link).

AI products like ChatGPT have almost no affordances or signifiers when you first engage with them. The only cues you get are a few vague buttons suggesting actions like "make a plan" or "get advice."

Although the minimalist aesthetic can be lovely, building a UX without corresponding product cues creates a more frustrating experience when things don't work perfectly (which, in the case of LLMs, happens often). When something breaks, users are forced to deal with mismatched expectations or a leaky abstraction.

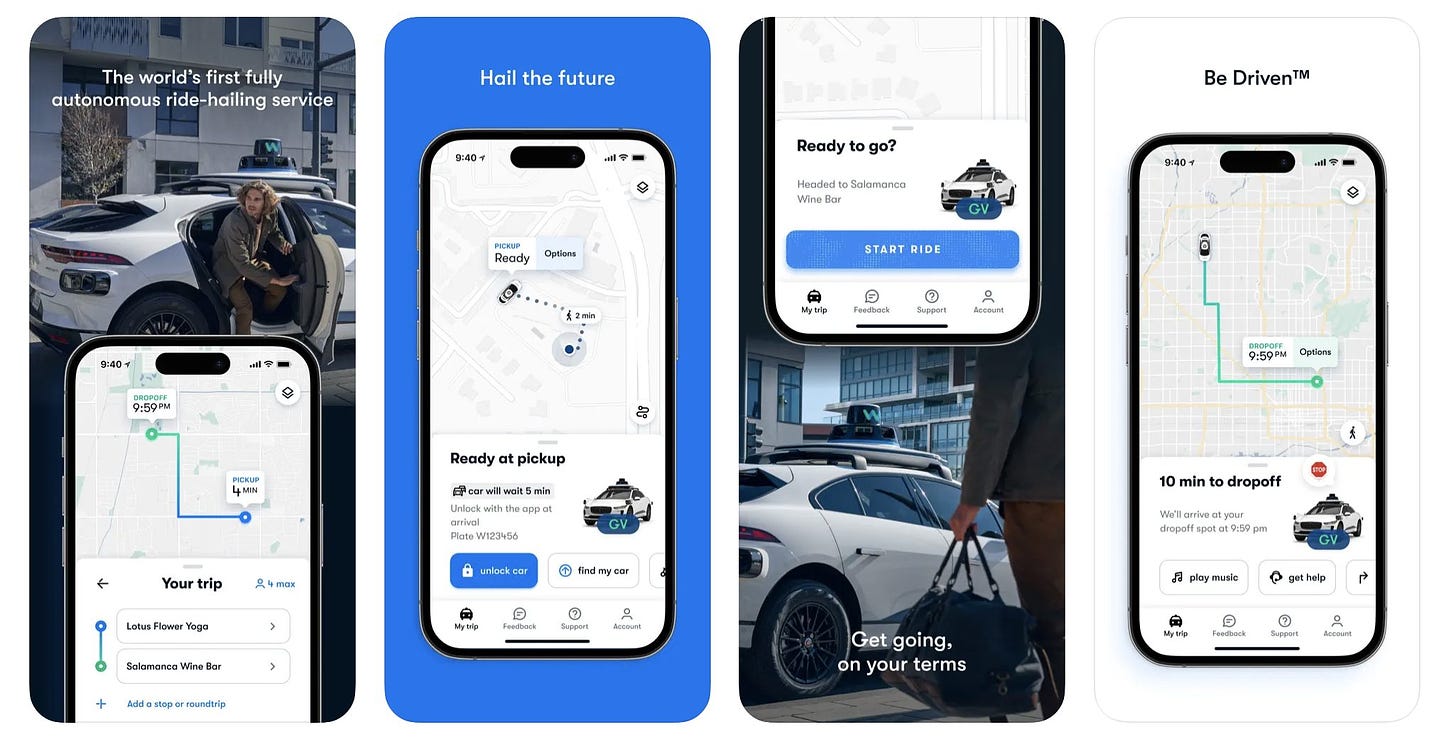

Some AI products are pioneering better (or at least more familiar) approaches - take Waymo, for instance. The overall UI is recognizable to anyone who has booked an Uber or Lyft, but the app also goes out of its way to help you interact with a driverless car for the first time.

Ultimately, we're going to have to invent new design patterns to fully express what it means to build an AI-native product3. Some, like GitHub Copilot's "ghost text," will be pretty intuitive. Others, like working directly with embeddings or latent spaces, might come with a bit of a learning curve. Many aren't going to stand the test of time.

But that's precisely what makes this moment so exciting. With each new AI capability – from chat interfaces to code completion to autonomous vehicles – we have the opportunity to experiment with new ways of building transparent and intuitive systems. Perhaps then, instead of viral trends about AI mind-reading, we'll see users confidently pushing these tools to their actual limits.

It's... fine. There are worse "guys" to be known as.

I suspect general-purpose chatbots like ChatGPT and Claude will struggle the most with incorporating affordances and signifiers. Partially because we don't entirely know what the latest models are good at to begin with - we're finding new use cases and edge cases all the time.

But also because their ultimate product vision *is* to have something capable of handling whatever you throw at it - the elusive dream of AGI. How do you indicate what a system is capable of when its goal is to be capable at everything?