Hey everyone! Happy 4th of July to everyone who celebrates! I celebrated today by having an intimate conversation with 600 of my closest X friends ? Joking aside, today is a celebratory episode, 52nd consecutive weekly ThursdAI show! I've been doing this as a podcast for a year now!

Which means, there are some of you, who've been subscribed for a year ? Thank you! Couldn't have done this without you. In the middle of my talk at AI Engineer (I still don't have the video!) I had to plug ThursdAI, and I asked the 300+ audience who is a listener of ThursdAI, and I saw a LOT of hands go up, which is honestly, still quite humbling. So again, thank you for tuning in, listening, subscribing, learning together with me and sharing with your friends!

This week, we covered a new (soon to be) open source voice model from KyutAI, a LOT of open source LLM, from InternLM, Cognitive Computations (Eric Hartford joined us), Arcee AI (Lukas Atkins joined as well) and we have a deep dive into GraphRAG with Emil Eifrem CEO of Neo4j (who shares why it was called Neo4j in the first place, and that he's a ThursdAI listener, whaaat? ?), this is definitely a conversation you don't want to miss, so tune in, and read a breakdown below:

TL;DR of all topics covered:

Voice & Audio

KyutAI releases Moshi - first ever 7B end to end voice capable model (Try it)

Open Source LLMs

Microsoft Updated Phi-3-mini - almost a new model

InternLM 2.5 - best open source model under 12B on Hugging Face (HF, Github)

Microsoft open sources GraphRAG (Announcement, Github, Paper)

OpenAutoCoder-Agentless - SOTA on SWE Bench - 27.33% (Code, Paper)

Arcee AI - Arcee Agent 7B - from Qwen2 - Function / Tool use finetune (HF)

LMsys announces RouteLLM - a new Open Source LLM Router (Github)

DeepSeek Chat got an significant upgrade (Announcement)

This weeks Buzz

New free Prompts course from WandB in 4 days (pre sign up)

Big CO LLMs + APIs

Perplexity announces their new pro research mode (Announcement)

X is rolling out "Grok Analysis" button and it's BAD in "fun mode" and then paused roll out

Figma pauses the rollout of their AI text to design tool "Make Design" (X)

Vision & Video

Cognitive Computations drops DolphinVision-72b - VLM (HF)

Chat with Emil Eifrem - CEO Neo4J about GraphRAG, AI Engineer

Voice & Audio

KyutAI Moshi - a 7B end to end voice model (Try It, See Announcement)

Seemingly out of nowhere, another french AI juggernaut decided to drop a major announcement, a company called KyutAI, backed by Eric Schmidt, call themselves "the first European private-initiative laboratory dedicated to open research in artificial intelligence" in a press release back in November of 2023, have quite a few rockstar co founders ex Deep Mind, Meta AI, and have Yann LeCun on their science committee.

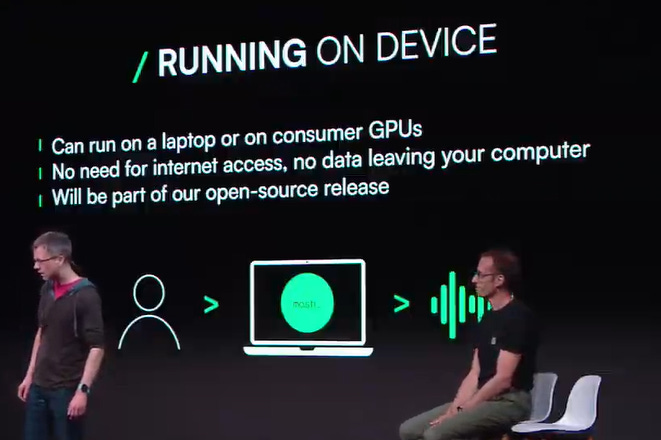

This week they showed their first, and honestly quite mind-blowing release, called Moshi (Japanese for Hello, Moshi Moshi), which is an end to end voice and text model, similar to GPT-4o demos we've seen, except this one is 7B parameters, and can run on your mac!

While the utility of the model right now is not the greatest, not remotely close to anything resembling the amazing GPT-4o (which was demoed live to me and all of AI Engineer by Romain Huet) but Moshi shows very very impressive stats!

Built by a small team during only 6 months or so of work, they have trained an LLM (Helium 7B) an Audio Codec (Mimi) a Rust inference stack and a lot more, to give insane performance.

Model latency is 160ms and mic-to-speakers latency is 200ms, which is so fast it seems like it's too fast. The demo often responds faster than I'm able to finish my sentence, and it results in an uncanny, "reading my thoughts" type feeling.

The most important part is this though, a quote of KyutAI post after the announcement :

Developing Moshi required significant contributions to audio codecs, multimodal LLMs, multimodal instruction-tuning and much more. We believe the main impact of the project will be sharing all Moshi’s secrets with the upcoming paper and open-source of the model.

I'm really looking forward to how this tech can be applied to the incredible open source models we already have out there! Speaking to out LLMs is now officially here in the Open Source, way before we got GPT-4o and it's exciting!

Open Source LLMs

Microsoft stealth update Phi-3 Mini to make it almost a new model

So stealth in fact, that I didn't even have this update in my notes for the show, but thanks to incredible community (Bartowsky, Akshay Gautam) who made sure we don't miss this, because it's so huge.

The model used additional post-training data leading to substantial gains on instruction following and structure output. We also improve multi-turn conversation quality, explicitly support <|system|> tag, and significantly improve reasoning capability

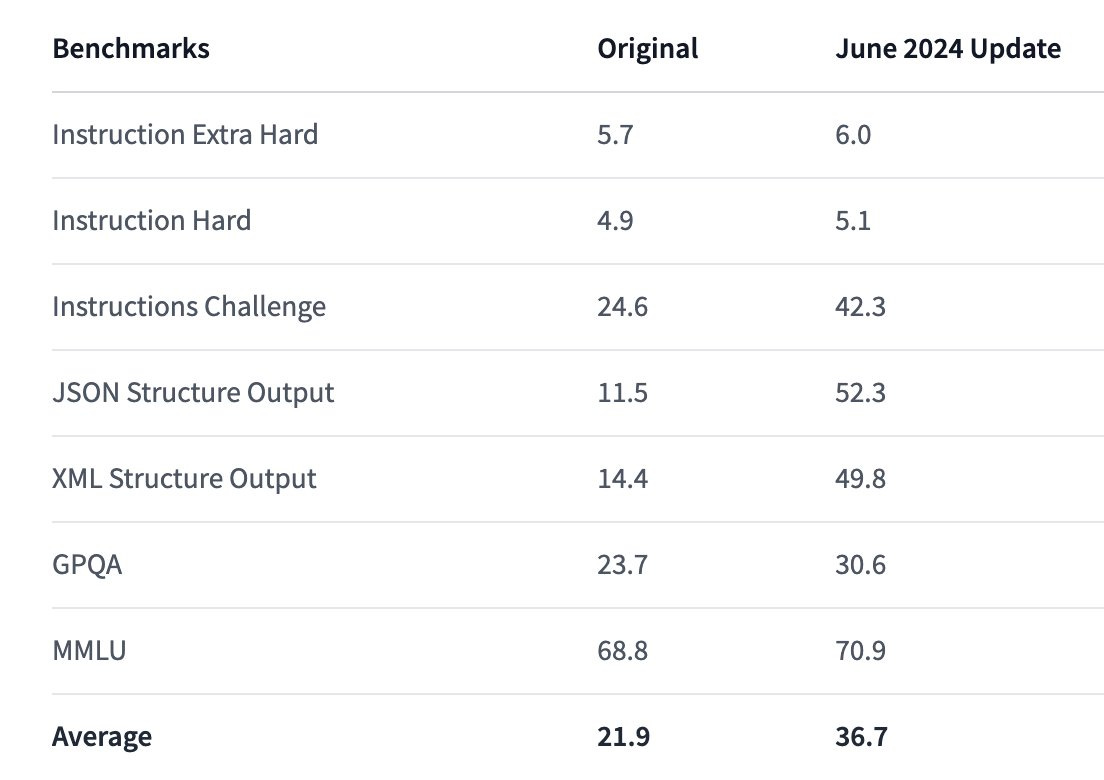

Phi-3 June update is quite significant across the board, just look at some of these scores, 354.78% improvement in JSON structure output, 30% at GPQA

But also specifically for coding, a 33→93 jump in Java coding, 33→73 in Typescript, 27→ 85 in Python! These are just incredible numbers, and I definitely agree with Bartowski here, there's enough here to call this a whole new model rather than an "seasonal update"

Qwen-2 is the start of the show right now

Week in and week out, ThursdAI seems to be the watercooler for the best finetuners in the community to come, hang, share notes, and announce their models. A month after Qwen-2 was announced on ThursdAI stage live by friend of the pod and Qwen dev lead Junyang Lin, and a week after it re-took number 1 on the revamped open LLM leaderboard on HuggingFace, we now have great finetunes on top of Qwen-2.

Qwen-2 is the star of the show right now. Because there's no better model. This is like GPT 4 level. It's Open Weights GPT 4. We can do what we want with it, and it's so powerful, and it's multilingual, and it's everything, it's like the dream model. I love it

Eric Hartford - Cognitive Computations

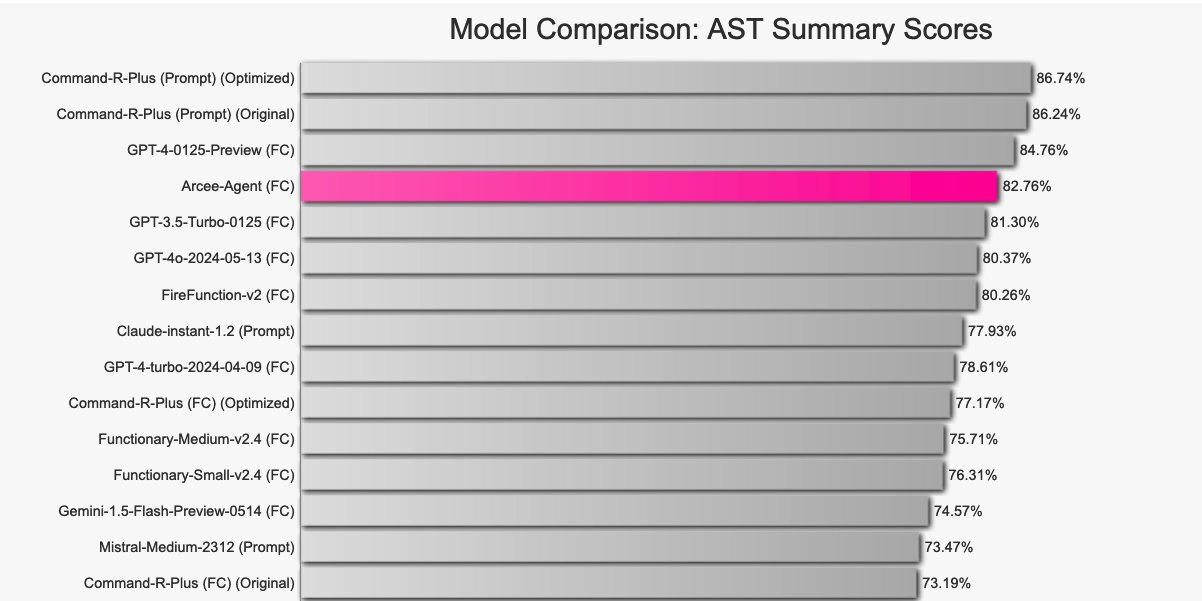

We've had 2 models finetunes based on Qwen 2 and their authors on the show this week, first was Lukas Atkins from Arcee AI (company behind MergeKit), they released Arcee Agent, a 7B Qwen-2 finetune/merge specifically focusing on tool use and function calling.

We also had a chat with Eric Hartford from Cognitive Computations (which Lukas previously participated in) with the biggest open source VLM on top of Qwen-2, a 72B parameter Dolphin Vision (Trained by StableQuan, available on the HUB) ,and it's likely the biggest open source VLM that we've seen so far.

The most exciting part about it, is Fernando Neta's "SexDrugsRockandroll" dataset, which supposedly contains, well.. a lot of uncensored stuff, and it's perfectly able to discuss and analyze images with mature and controversial content.

InternLM 2.5 - SOTA open source under 12B with 1M context (HF, Github)

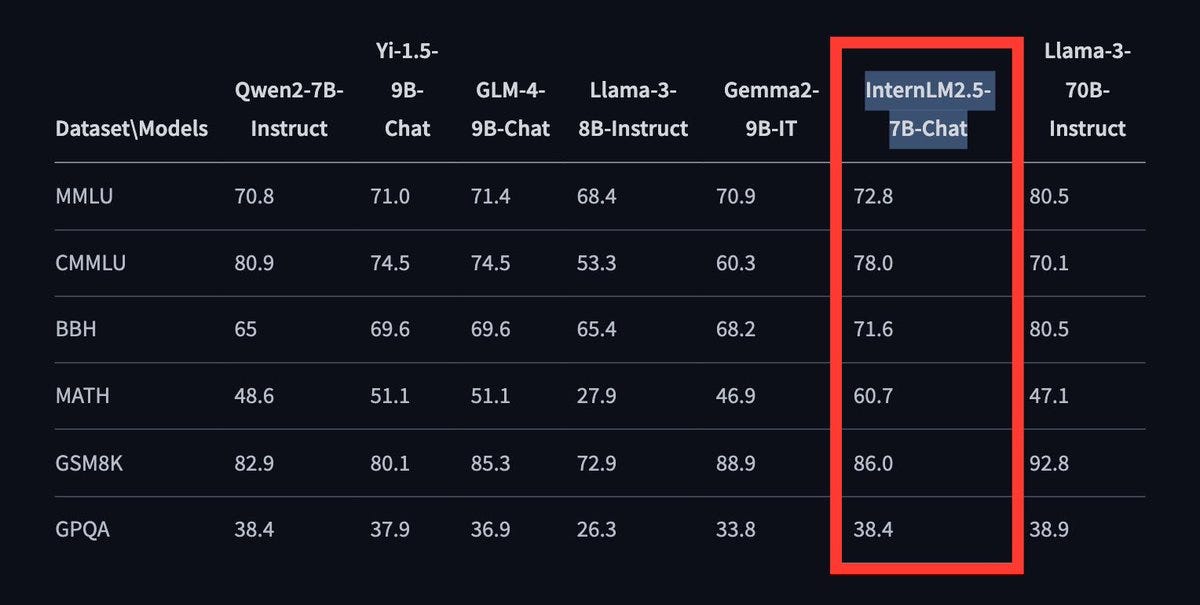

The folks at Shanghai AI release InternLM 2.5 7B, and a chat version along with a whopping 1M context window extension. These metrics are ridiculous, beating LLama-3 8B on literally every metric on the new HF leaderboard, and even beating Llama-3 70B on MATH and coming close on GPQA!

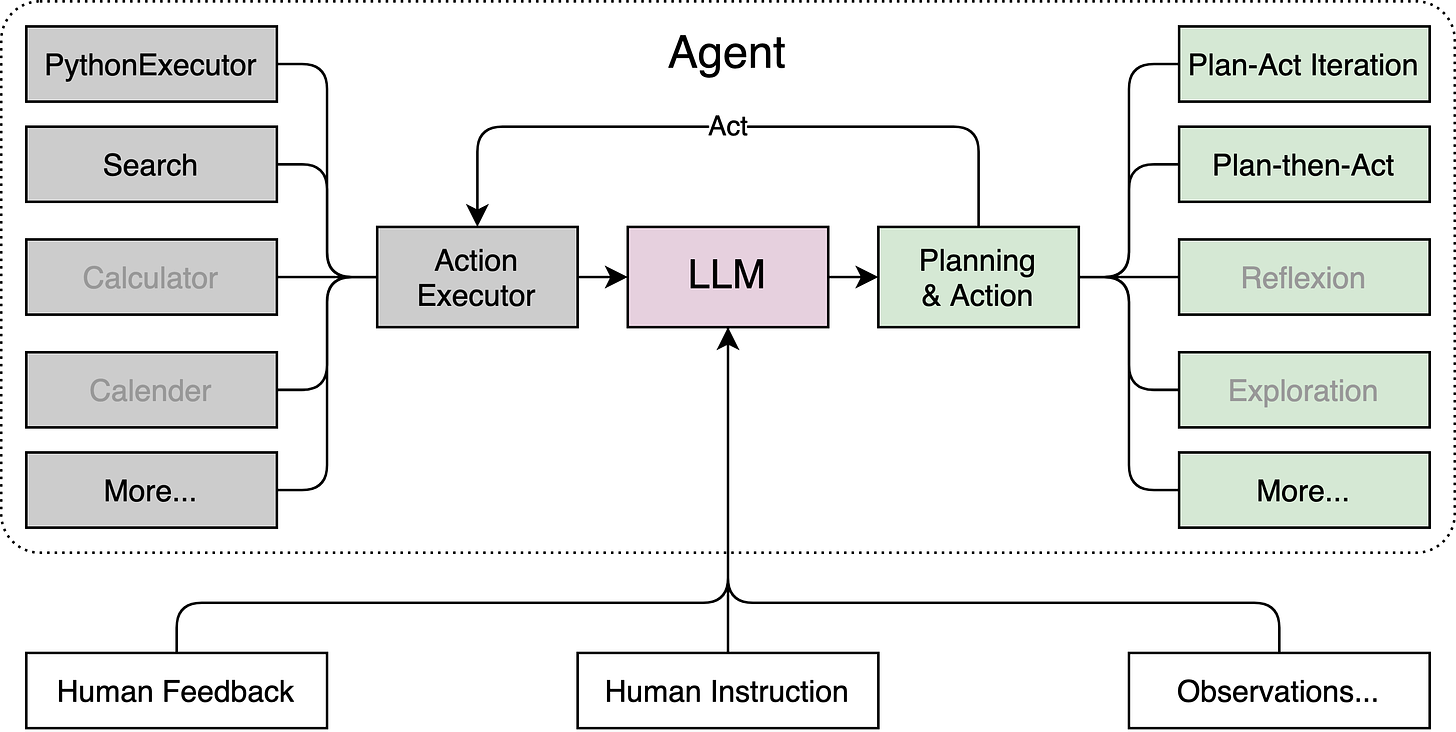

The folks at Intern not only released a beast of a model, but also have released a significantly imporved tool use capabilities with it, including their own agentic framework called Lagent, which comes with Code Interpreter (python execution), Search Capabilities, and of course the abilities to plug in your own tools.

How will you serve 1M context on production you ask? Well, these folks ALSO open sourced LMDeploy, "an efficient, user-friendly toolkit designed for compressing, deploying, and serving LLM models" which has been around for a while, but is now supporting this new model of course, handles dynamic NTK and some offloading of context etc'

So an incredible model + tools release, can't wait to play around with this!

This weeks Buzz (What I learned with WandB this week)

Hey, did you know we at Weights & Biases have free courses? While some folks ask you for a LOT of money for basic courses, at Weights & Biases, they are... you guessed it, completely free! And a lot of effort goes into recording and building the agenda, so I'm happy to announce that our "Developer's Guide to LLM Prompting" course is going to launch in 4 days!

Delivered by my colleague Anish (who's just an amazing educator) and Teodora from AutogenAI, you will learn everything prompt building related, and even if you are a seasoned prompting pro, there will be something for you there! Pre-register for the course HERE

Big CO LLMs + APIs

How I helped roll back an XAI feature and Figma rolled back theirs

We've covered Grok (with a K this time) from XAI multiple times, and while I don't use it's chat interface that much, or the open source model, I do think they have a huge benefit in having direct access to real time data from the X platform.

Given that I basically live on X (to be able to deliver all these news to you) I started noticing a long promised, Grok Analysis button show up under some posts, first on mobile, then on web versions of X.

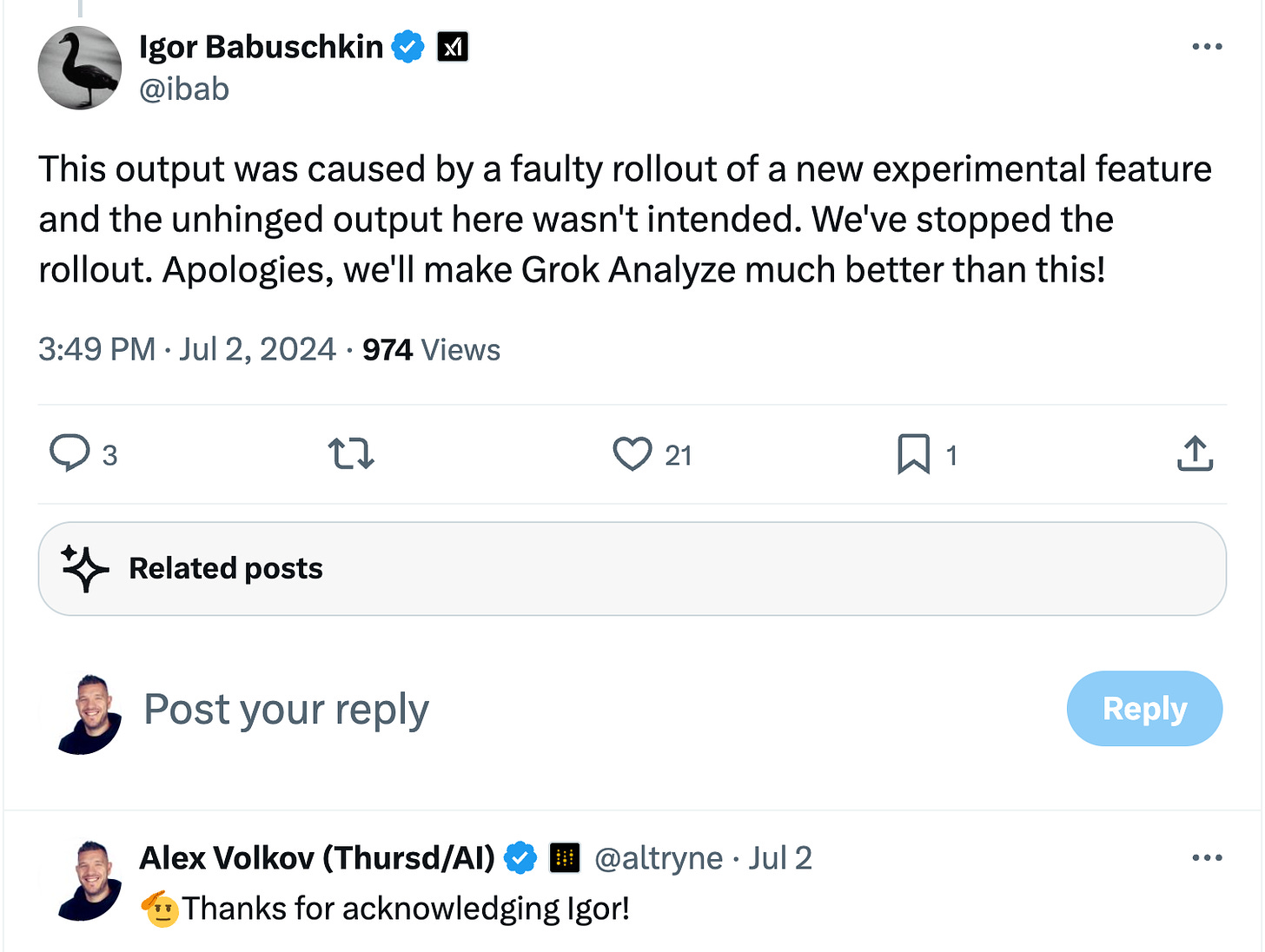

Of course I had to test it, and whoa, I was honestly shocked at just how unhinged and profanity laced the analysis was.

Now I'm not easily shocked, I've seen jailbroken LLMs before, I tried to get chatGPT to say curse words multiple times, but it's one thing when you expect it and a complete another thing when a billion dollar company releases a product that answers... well like this:

Luckily Igor Babushkin (Co founder of XAI) noticed and the roll out was paused, so looks like I helped red team grok! ?

Figma pauses AI "make design" feature

Another AI feature was paused by a big company after going viral on X (what is it about X specifically?) and this time it was Figma!

In a super viral post, Andy Allen posted a video where he asks the new AI feature from Figma called "Make Design" a simple "weather app" and what he receives looks almost 100% identical to the iOS weather app!

This was acknowledged by the CEO of Figma and almost immediately paused as well.

GraphRAG... GraphRAG everywhere

Microsoft released a pre-print paper called GraphRag (2404.16130) which talks about utilizing LLMs to first build and the use Graph databases to achieve better accuracy and performance for retrieval tasks such as "global questions directed at an entire text corpus"

This week, Microsoft open sourced GraphRag on Github ? and I wanted to dive a little deeper into what this actually means, as this is a concept I haven't head of before last week, and suddenly it's everywhere.

Last week during AI Engineer, the person who first explained this concept to me (and tons of other folks in the crowd at his talk) was Emil Eifrem, CEO of Neo4J and I figured he'd be the right person to explain the whole concept in a live conversation to the audience as well, and he was!

Emil and I (and other folks in the audience) had a great, almost 40 minute conversation about the benefits of using Graph databases for RAG, how LLMs unlocked the ability to convert unstructured data into Graph linked databases, accuracy enhancements and unlocks like reasoning over the whole corpus of data, developer experience improvements, and difficulties / challenges with this approach.

Emil is a great communicator, with a deep understanding of this field, so I really recommend to listen to this deep dive.

This is it for this weeks newsletter, and a wrap on year 1 of ThursdAI as a podcast (this being out 52nd weekly release!)

I'm going on vacation next week, but I will likely still send the TL;DR, so look out for that, and have a great independence day, and rest of your holiday weekend if you celebrate, and if you're not, I'm sure there will be cool AI things announced by the next time we meet ?

As always, appreciate your attention,

Alex