Hey folks, Alex here from Weights & Biases, and this week has been absolutely bonkers. From robots walking among us to rockets landing on chopsticks (well, almost), the future is feeling palpably closer. And if real-world robots and reusable spaceship boosters weren't enough, the open-source AI community has been cooking, dropping new models and techniques faster than a Starship launch. So buckle up, grab your space helmet and noise-canceling headphones (we’ll get to why those are important!), and let's blast off into this week’s AI adventures!

TL;DR and show-notes + links at the end of the post ?

Robots and Rockets: A Glimpse into the Future

I gotta start with the real-world stuff because, let's be honest, it's mind-blowing. We had Robert Scoble (yes, the Robert Scoble) join us after attending the Tesla We, Robot AI event, reporting on Optimus robots strolling through crowds, serving drinks, and generally being ridiculously futuristic. Autonomous robo-taxis were also cruising around, giving us a taste of a driverless future.

Robert’s enthusiasm was infectious: "It was a vision of the future, and from that standpoint, it succeeded wonderfully." I couldn't agree more. While the market might have had a mini-meltdown (apparently investors aren't ready for robot butlers yet), the sheer audacity of Tesla’s vision is exhilarating. These robots aren't just cool gadgets; they represent a fundamental shift in how we interact with technology and the world around us. And they’re learning fast. Just days after the event, Tesla released a video of Optimus operating autonomously, showcasing the rapid progress they’re making.

And speaking of audacious visions, SpaceX decided to one-up everyone (including themselves) by launching Starship and catching the booster with Mechazilla – their giant robotic chopsticks (okay, technically a launch tower, but you get the picture). Waking up early with my daughter to watch this live was pure magic. As Ryan Carson put it, "It was magical watching this… my kid who's 16… all of his friends are getting their imaginations lit by this experience." That’s exactly what we need - more imagination and less doomerism! The future is coming whether we like it or not, and I, for one, am excited.

Open Source LLMs and Tools: The Community Delivers (Again!)

Okay, back to the virtual world (for now). This week's open-source scene was electric, with new model releases and tools that have everyone buzzing (and benchmarking like crazy!).

Nemotron 70B: Hype vs. Reality: NVIDIA dropped their Nemotron 70B instruct model, claiming impressive scores on certain benchmarks (Arena Hard, AlpacaEval), even suggesting it outperforms GPT-4 and Claude 3.5. As always, we take these claims with a grain of salt (remember Reflection?), and our resident expert, Nisten, was quick to run his own tests. The verdict? Nemotron is good, "a pretty good model to use," but maybe not the giant-killer some hyped it up to be. Still, kudos to NVIDIA for pushing the open-source boundaries. (Hugging Face, Harrison Kingsley evals)

Zamba 2 : Hybrid Vigor: Zyphra, in collaboration with NVIDIA, released Zamba 2, a hybrid Sparse Mixture of Experts (SME) model. We had Paolo Glorioso, a researcher from Ziphra, join us to break down this unique architecture, which combines the strengths of transformers and state space models (SSMs). He highlighted the memory and latency advantages of SSMs, especially for on-device applications. Definitely worth checking out if you’re interested in transformer alternatives and efficient inference.

Zyda 2: Data is King (and Queen): Alongside Zamba 2, Zyphra also dropped Zyda 2, a massive 5 trillion token dataset, filtered, deduplicated, and ready for LLM training. This kind of open-source data release is a huge boon to the community, fueling the next generation of models. (X)

Ministral: Pocket-Sized Power: On the one-year anniversary of the iconic Mistral 7B release, Mistral announced two new smaller models – Ministral 3B and 8B. Designed for on-device inference, these models are impressive, but as always, Qwen looms large. While Mistral didn’t include Qwen in their comparisons, early tests suggest Qwen’s smaller models still hold their own. One point of contention: these Ministrals aren't as open-source as the original 7B, which is a bit of a bummer, with the 3B not being even released anywhere besides their platform. (Mistral Blog)

Entropix (aka Shrek Sampler): Thinking Outside the (Sample) Box: This one is intriguing! Entropix introduces a novel sampling technique aimed at boosting the reasoning capabilities of smaller LLMs. Nisten’s yogurt analogy explains it best: it’s about “marinating” the information and picking the best “flavor” (token) at the end. Early examples look promising, suggesting Entropix could help smaller models tackle problems that even trip up their larger counterparts. But, as with all shiny new AI toys, we're eagerly awaiting robust evals.

Tim Kellog has an detailed breakdown of this method here

Gemma-APS: Fact-Finding Mission: Google released Gemma-APS, a set of models specifically designed for extracting claims and facts from text. While LLMs can already do this to some extent, a dedicated model for this task is definitely interesting, especially for applications requiring precise information retrieval. (HF)

? OpenAI adds voice to their completion API (X, Docs)

In the last second of the pod, OpenAI decided to grace us with Breaking News!

Not only did they launch their Windows native app, but also added voice input and output to their completion APIs. This seems to be the same model as the advanced voice mode (and priced super expensively as well) and the one they used in RealTime API released a few weeks ago at DevDay.

This is of course a bit slower than RealTime but is much simpler to use, and gives way more developers access to this incredible resource (I'm definitely planning to use this for ... things ?)

This isn't their "TTS" or "STT (whisper) models, no, this is an actual omni model that understands audio natively and also outputs audio natively, allowing for things like "count to 10 super slow"

I've played with it just now (and now it's after 6pm and I'm still writing this newsletter) and it's so so awesome, I expect it to be huge because the RealTime API is very curbersome and many people don't really need this complexity.

This weeks Buzz - Weights & Biases updates

Ok I wanted to send a completely different update, but what I will show you is, Weave, our observability framework is now also Multi Modal!

This couples very well with the new update from OpenAI!

So here's an example usage with today's announcement, I'm going to go through the OpenAI example and show you how to use it with streaming so you can get the audio faster, and show you the Weave multimodality as well ?

You can find the code for this in this Gist and please give us feedback as this is brand new

Non standard use-cases of AI corner

This week I started noticing and collecting some incredible use-cases of Gemini and it's long context and multimodality and wanted to share with you guys, so we had some incredible conversations about non-standard use cases that are pushing the boundaries of what's possible with LLMs.

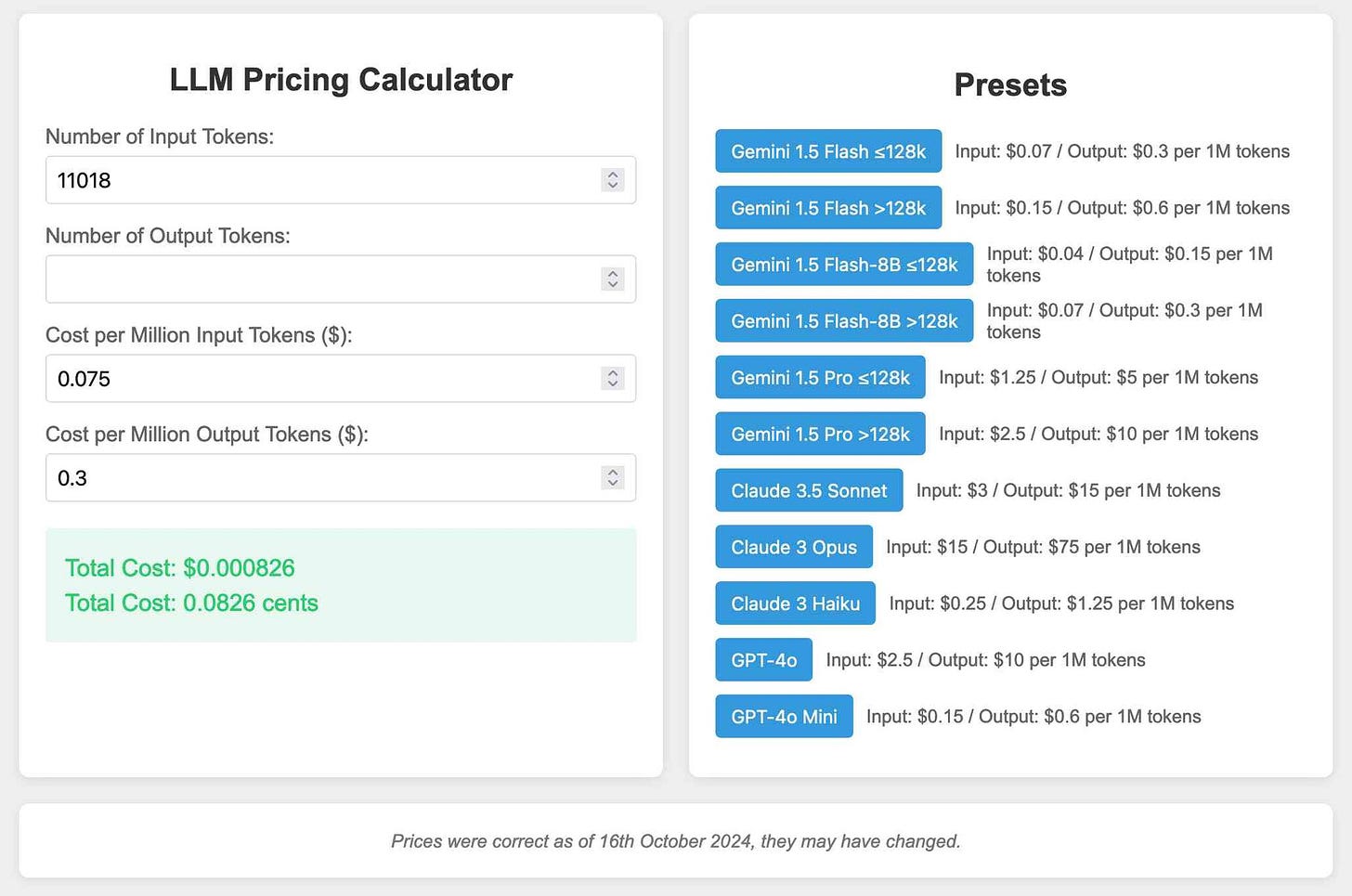

Hrishi blew me away with his experiments using Gemini for transcription and diarization. Turns out, Gemini is not only great at transcription (it beats whisper!), it’s also ridiculously cheaper than dedicated ASR models like Whisper, around 60x cheaper! He emphasized the unexplored potential of prompting multimodal models, adding, “the prompting on these things… is still poorly understood." So much room for innovation here!

then stole the show with his mind-bending screen-scraping technique. He recorded a video of himself clicking through emails, fed it to Gemini Flash, and got perfect structured data in return. This trick isn’t just clever; it’s practically free, thanks to the ridiculously low cost of Gemini Flash. I even tried it myself, recording my X bookmarks and getting a near-perfect TLDR of the week’s AI news. The future of data extraction is here, and it involves screen recordings and very cheap (or free) LLMs.

Here's Simon's example of how much this would cost him had he actually be charged for it. ?

Speaking of , he broke the news that NotebookLM has got an upgrade, with the ability to steer the speakers with custom commands, which Simon promptly used to ask the overview hosts to talk like Pelicans

Voice Cloning, Adobe Magic, and the Quest for Real-Time Avatars

Voice cloning also took center stage this week, with the release of F5-TTS. This open-source model performs zero-shot voice cloning with just a few seconds of audio, raising all sorts of ethical questions (and exciting possibilities!). I played a sample on the show, and it was surprisingly convincing (though not without it's problems) for a local model!

This, combined with Hallo 2's (also released this week!) ability to animate talking avatars, has Wolfram Ravenwolf dreaming of real-time AI assistants with personalized faces and voices. The pieces are falling into place, folks.

And for all you Adobe fans, Firefly Video has landed! This “commercially safe” text-to-video and image-to-video model is seamlessly integrated into Premiere, offering incredible features like extending video clips with AI-generated frames. Photoshop also got some Firefly love, with mind-bending relighting capabilities that could make AI-generated images indistinguishable from real photographs.

Wrapping Up:

Phew, that was a marathon, not a sprint! From robots to rockets, open source to proprietary, and voice cloning to video editing, this week has been a wild ride through the ever-evolving landscape of AI. Thanks for joining me on this adventure, and as always, keep exploring, keep building, and keep pushing those AI boundaries. The future is coming, and it’s going to be amazing.

P.S. Don’t forget to subscribe to the podcast and newsletter for more AI goodness, and if you’re in Seattle next week, come say hi at the AI Tinkerers meetup. I’ll be demoing my Halloween AI toy – it’s gonna be spooky!

TL;DR - Show Notes and Links

Open Source LLMs

Nvidia releases Llama 3.1-Nemotron-70B instruct: Outperforms GPT-40 and Anthropic Claude 3.5 on several benchmarks. Available on Hugging Face and Nvidia. (X, Harrison Eval)

Zamba2-7B: A hybrid Sparse Mixture of Experts model from Zyphra and Nvidia. Claims to outperform Mistral, Llama2, and Gemmas in the 58B weight class. (X, HF)

Zyda-2: 57B token dataset distilled from high-quality sources for training LLMs. Released by Zyphra and Nvidia. (X)

Ministral 3B & 8B - Mistral releases 2 new models for on device, claims SOTA (Blog)

• Entropix aims to mimic advanced reasoning in small LLMs (Github, Breakdown)

Google releases Gemma-APS: A collection of Gemma models for text-to-propositions segmentation, distilled from Gemini Pro and fine-tuned on synthetic data. (HF)

Big CO LLMs + APIs

OpenAI ships advanced voice model in chat completions API endpoints with multimodality (X, Docs, My Example)

Amazon, Microsoft, Google all announce nuclear power for AI future

Yi-01.AI launches Yi-Lightning: A proprietary model accessible via API.

New Gemini API parameters: Google has shipped new Gemini API parameters, including logprobs, candidateCount, presencePenalty, seed, frequencyPenalty, and model_personality_in_response.

Google NotebookLM is no longer "experimental" and now allows for "steering" the hosts (Announcement)

XAI - GROK 2 and Grok2-mini are now available via API in OpenRouter - (X, OR)

This weeks Buzz (What I learned with WandB this week)

Weave is now MultiModal (supports audio and text!) (X, Github Example)

Vision & Video

Adobe Firefly Video: Adobe's first commercially safe text-to-video and image-to-video generation model. Supports prompt coherence. (X)

Voice & Audio

Ichigo-Llama3.1 Local Real-Time Voice AI: Improvements allow it to talk back, recognize when it can't comprehend input, and run on a single Nvidia 3090 GPU. (X)

F5-TTS: Performs zero-shot voice cloning with less than 15 seconds of audio, using audio clips to generate additional audio. (HF, Paper)

AI Art & Diffusion & 3D

RF-Inversion: Zero-shot inversion and editing framework for Flux, introduced by Litu Rout. Allows for image editing and personalization without training, optimization, or prompt-tuning. (X)

Tools

Fastdata: A library for synthesizing 1B tokens. (X)