For a podcast-length discussion of various news items, tune in to the Audio version.

The vibes have shifted. This is still not a normal moment in AI1, and we can’t precisely determine how or why, but they have shifted.

In Jan 2024 we (lightly) commented that the AI world order had been stagnant for a long time, but in the past 3 months, the cracks in that world order started to show:

“We went from 100% gpt4 usage to almost 0% in the last 3 months”. “I've switched to Claude completely. Better task clarification, more consistent output, and improved error handling. OpenAI isn't on par anymore.” More and more people canceling ChatGPT subscriptions (part of the Unbundling of ChatGPT) and OpenAI rumored to be losing $5B this year

Google AI Overviews being bad, bad, bad, bad (after the Gemini mess)

Microsoft announcing and cancelling Recall, Figma announcing and cancelling AI, McDonald’s testing and canceling Drive-thru AI (this follows Discord announcing and cancelling Clyde last winter)

Inflection Pi 2.5 claiming 94% of GPT4 then non-acquihiring to Microsoft

Adept claiming (on our podcast) that they are “completely sold out” and then non-acquihiring to Amazon

Stability shipping Stable Diffusion 3 then Rombach and then Mostaque leaving to start Schelling AI

The Rabbit R1 winning CES and then crapping the bed, ditto Humane

Cognition Lab’s Devin becoming the most hyped AI demo of the year, then valued at $2bn, then debunked (partially! watch their talk at the World’s Fair for what’s next) and quickly cloned by OpenDevin, while their 14% SWE-Bench was soon surpassed by AutoCodeRover and Factory

VCs (even big ones) turn on OpenAI-backed Harvey, customers privately agreeing (though it then announced a $100m raise with inside investors)

In isolation, all of these can be chalked up to strategic or temporary missteps by individuals, just doing their best wrangling complex systems in a short time.

In aggregate, they point to a fundamentally unhealthy industry dynamic that is at best dishonest, and at worst teetering on the brink of the next AI Winter2.

But by far the most structural criticisms of this AI summer have come from two of the most hallowed financial institutions: Goldman Sachs and Sequoia Capital.

Trillion Dollar Baby

Curiously enough, both AI bulls and bears this year have landed on roughly the same ballpark number: one trillion dollars, though across different timeframes. The universal problem with these analyses is that they’re pretty… bad. It’s hard to pick a side in a debate where many leading arguments are low effort strawmen for tribal minds to casually dismiss and carry on with their worldview.

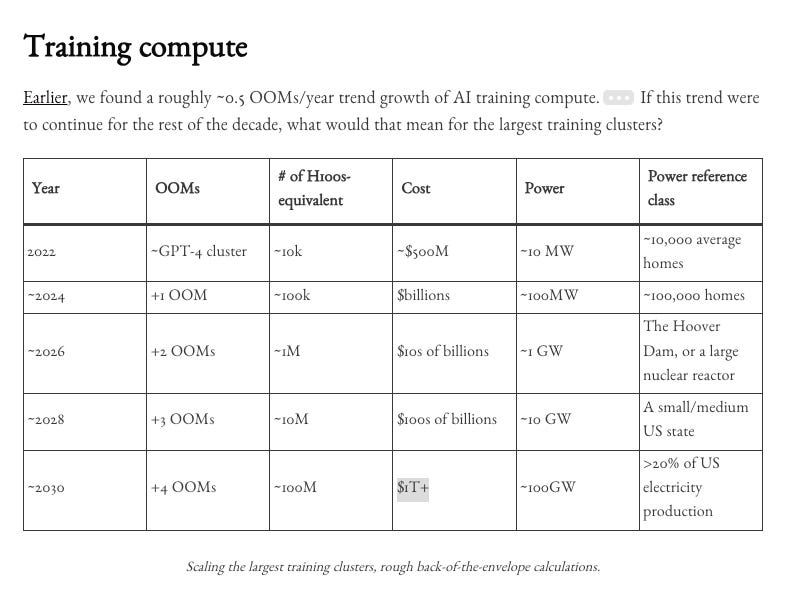

Here’s Leopold Aschenbrenner predicting a $1T/yr industry investment and eventually a $1T training cluster can and will be done, because he thinks returns will scale proportionally with investment:

So far, every 10x scaleup in AI investment seems to yield the necessary returns. GPT-3.5 unleashed the ChatGPT mania. The estimated $500M cost for the GPT-4 cluster would have been paid off by the reported billions of annual revenue for Microsoft and OpenAI (see above calculations), and a “2024-class” training cluster in the billions will easily pay off if Microsoft/OpenAI AI revenue continues on track to a $10B+ revenue run rate. It’s hard to understate the ensuing reverberations. This would make AI products the biggest revenue driver for America’s largest corporations, and by far their biggest area of growth.

Of course, the easy criticism is: calm down son, diminishing returns are real, scaling laws don’t hold in economics like they do in AI3, and log lines do not go up and to the right for ever when checked by physical reality. Even discounting that, what’s the bear case? Goldman Sachs’ Jim Covello asks the opposite question:

We estimate that the AI infrastructure buildout will cost over $1tn in the next several years alone, which includes spending on data centers, utilities, and applications. So, the crucial question is: What $1tn problem will AI solve? Replacing low wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I’ve witnessed in my thirty years of closely following the tech industry.4

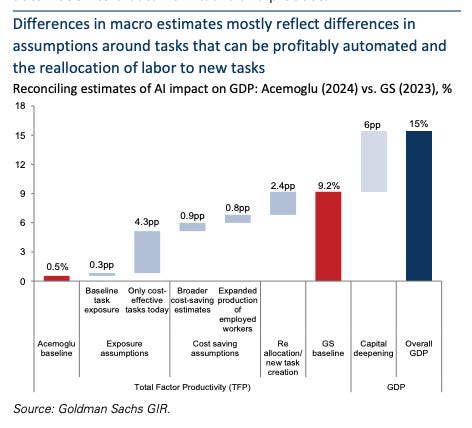

If you’ve heard the idea that “Goldman Sachs is bearish on AI”, you probably heard it from people like Ed Zitron, a “media personality” who very confidently makes sweeping statements5 primarily based on selective argument-by-authority. Reading the actual GS report makes clear that its editors were a lot more balanced; trotting out not one but three of Covello’s colleagues to argue against him, and they in fact did remarkable work in isolating precisely the divergences in assumptions that led to the stark difference in outlook between the bulls (+9% GDP) and bears (+0.5%) in the piece. So GS responsibly does the job of dissecting its own Head of Global Equity Research’s arguments, which you can read in the report if you wish.

But you’re not going to. By the time we whip out the charts the average viewer has stopped reading. Balance and estimate breakdowns do not get views; hyperbole does.

Sequoia’s Questions

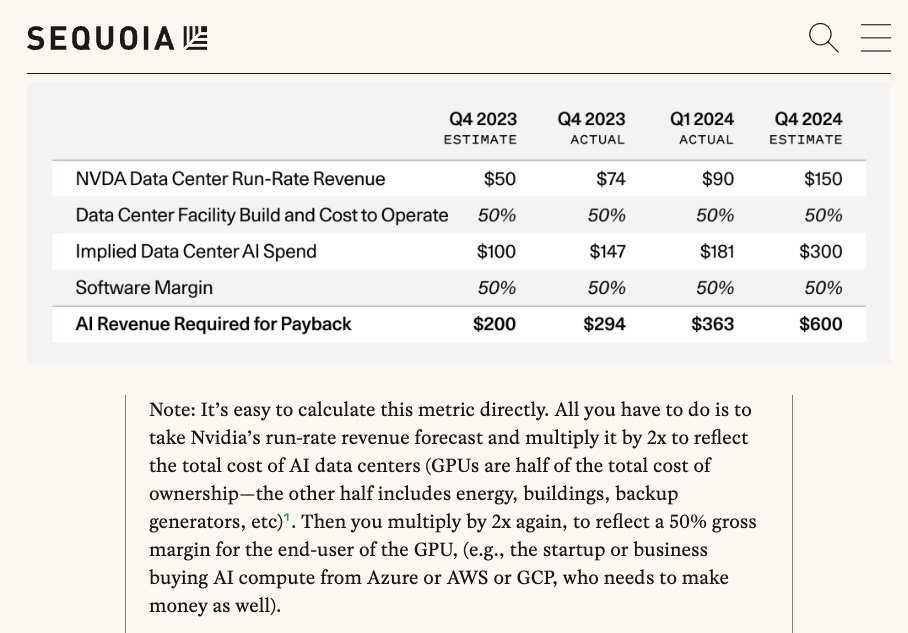

Sequoia’s consecutive $200B and $600B “questions” from David Cahn failed to make the headline worthy 1 trillion number, but make the same point with a much simpler model: that the AI industry needs lifetime revenue 4x the amount of money going to NVIDIA, for the AI infra build out to pay itself back, based on a requirement of 50% data center margins and 50% software margins.

Contra NVIDIA’s massive tripling in DC revenue in the past year, David estimates AI industry $75B revenue in 2023, and $100B in 2024 to arrive at his revenue “hole” numbers of $125B and $500B.

Did you spot the issue? No? Not your fault. David mentions that the model assumes lifetime revenue in 2023, but that word is gone in the 2024 version. We’re conflating lifetime revenue with quarterly ones, when we know that investment is amortized over the long term and compounding effects mean the biggest rewards come at the end6.

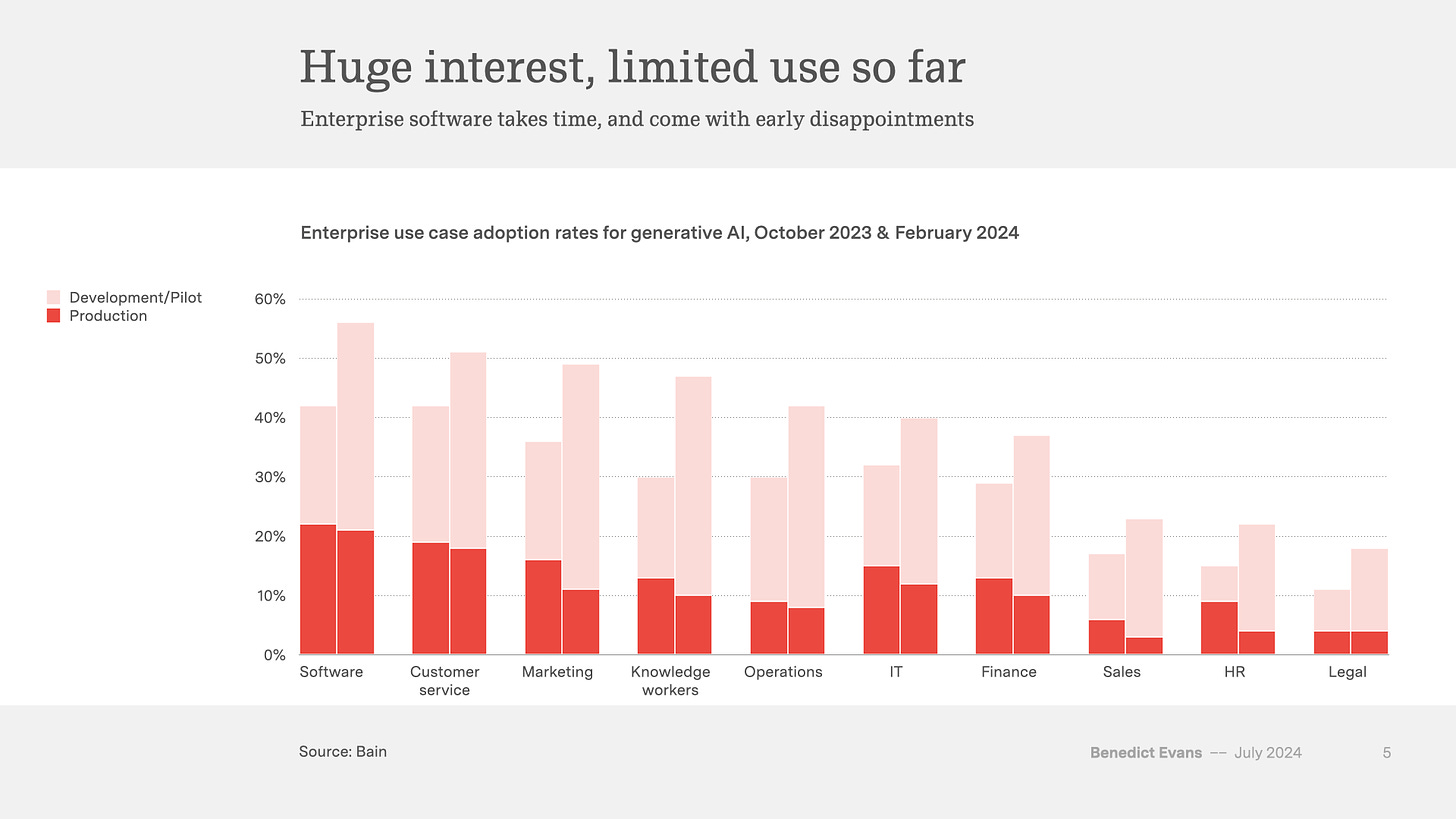

The tribal clapback takes have already started - you can listen to (previous guest) Vipul from Together AI’s take (imbalances happen, but don’t forget traditional ML spend), or Sarah and Elad’s take (don’t underestimate foundation models, enterprise adoption is slow), or (friend of the pod) NLW’s take (Brainstorming, Cost savings/productivity, Automation). Benedict Evans, he of the chart porn, has a more balanced discussion of the state of AI Summer:

The final piece worth an honorable mention this past quarter, though not quite qualifying in the AI infra spend debate, is Chris Paik’s The End of Software. Provocatively written, and if true has very meaningful implications on the economy, but Chris is neither a developer or a founder and he dramatically overestimates7 the economic impact of citizen software (take it from a no-code fan!).

Engineering, not Tribalism

We could (and indeed to some extent cannot resist, see footnotes8) going point by point debating both the skeptics and the hypebois. But then we’d be contributing to more of the same - one person’s signal is another’s noise - and it is the nature of midwits to want to score points in the battlefield of ideas. He said X, he doesn’t know what he’s talking about, I say Y, I’m smart and connected and know things, listen to me.

All noise.

What’s real is this: the future is here, but it is not evenly distributed.

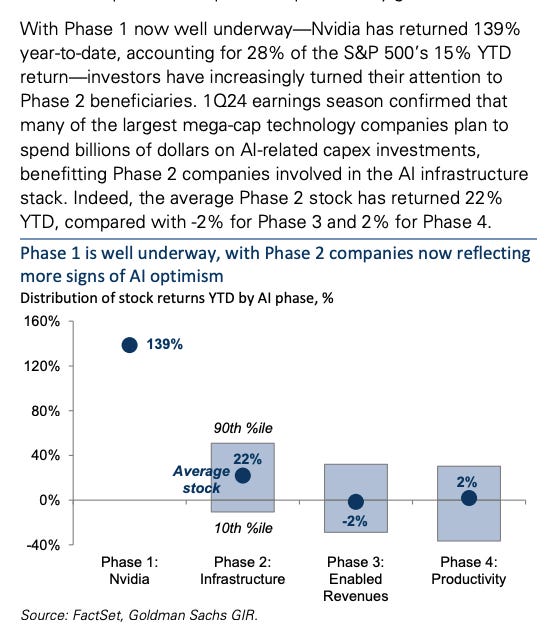

In the same way that 5 stocks account for 96% of the S&P 500’s gains this year, the rollout and benefit of AI has been extremely imbalanced. From the GS report again:

We have mindblowing models, and plenty of money flowing to GPUs, infra is improving, and costs are coming down. And we have the tools!

What we haven’t seen is the proportionate revenue, and productivity gains, flow to the rest of the economy.

The future is here, but it is not evenly distributed.

It is the solemn and unique responsibility of AI Engineers to bridge that gap from capabilities to product.

Time to build, or else AI Winter is coming.

Monthly Notes

Given what our readership responds to and the incentives of HN/Twitter distribution, we have pivoted our notetaking strategy to a combination of short essays every weekday via Email and monthly notes on GitHub (see Mar, Apr, May, and Jun). Let us know what you think of this new format!

With thousands of non-researchers showing up at AI conferences and hackathons, we are definitely still in full swing of AI Summer. Full talk here.

We typically try to stay technically grounded on Latent Space, but the topic of AI Winter is both real and relevant; the last time we brought it up was almost a year ago with AI Fall.

When the dismal science meets the bitter lesson, the dismal science wins!

More gems that didn’t make our cut:

”when describing the efficiency benefits of AI, [he] added that while it was able to create an AI that updated historical data in its company models more quickly than doing so manually, it cost six times as much to do so.” Skill issue tbh

"the tech world is too complacent in the assumption that AI costs will decline substantially over time.” Bruh. Bruh.

“no such roadmap (or killer app) for AI has been found.” We count four so far and that’s an old post.

“Generative AI is unprofitable, unsustainable, and fundamentally limited in what it can do thanks to the fact that it's probabilistically generating an answer.” Ah, a stochastic parrotooor. Got it.

“Because the market under-appreciates the B100 and the rate at which next-gen chips will improve, it overestimates the extent to which H100s purchased today will hold their value in 3-4 years.” They make much of the B100s but don’t forget that current-generation GPUs are often converted to inference workloads when they become legacy.

As for revenue hole, it’s still real, just not as big, and it is closing fast. Though the numbers are small in David’s terms, the growth rates for AI native companies are still very healthy. OpenAI’s revenue grew 700% in 2023 and another 100% YTD, after several years of minimal growth. And it’s not just the AI majors like Anthropic (+680% yoy) or Cohere (+170% YTD) or Midjourney (?) too, many of the newer foundation model companies like HeyGen (+1000% growth yoy) and Suno (0 to $15m in 6 months since our pod!) are doing just fine. While we are just talking hundreds of millions vs David’s hundreds of billions, these all underlie AI-powered improvements in the layers above them.

and unfortunately timed…

You think we are running out of data? Training on 1-2T tokens was SOTA a year ago, and in 2024 Llama 3 trains on 15T tokens (DBRX 12T, Reka 5T), HuggingFace releases 15T super high quality tokens, and Apple interns pipeline 240T datasets for a fun baseline, and we’re starting to see synthetic data beat its own teacher models, which Claude- and GPT-Next are almost certainly using (with verifiers of course).

You think inference costs are not coming down? Read Shazeer et al 2024 and Defazio et al 2024 (paper) and Deepseek v2 and get back to us. And this is the year of on-device AI - from Apple Intelligence to Gemma2/Gemini Nano to SmolLM - that will make commoditity inference truly too cheap to meter.