Published on October 14, 2024 2:08 PM GMT

What if you could remove some information from the weights of an AI? Would that be helpful?

It is clearly useful against some misuse concerns: if you are concerned that LLMs will make it easier to build bioweapons because they have memorized such information, removing the memorized facts would remove this misuse concern.

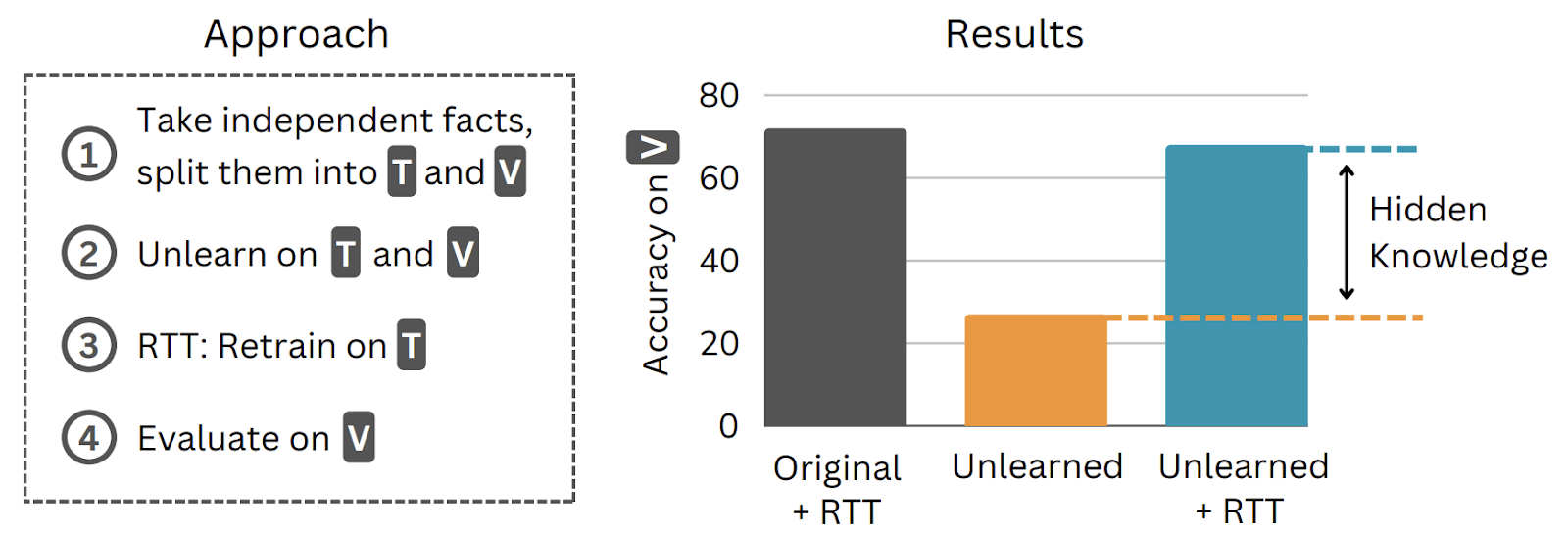

In a paper Aghyad Deeb and I just released, we show it is tractable to evaluate the presence of certain undesirable facts in an LLM: take independent facts that should have all been removed, fine-tune on some of them, and see if accuracy increases on the other ones. The fine-tuning process should make the model “try” to answer, but if the information was removed from the weights (and if the facts are actually independent), then accuracy on the held-out facts should remain low.

Removing information from the weights is stronger than the usual notion of unlearning. Previous work that tried to develop unlearning methods focused on other properties such as the failure of probing, and jailbreak robustness - which have the drawback that if you find new jailbreaks, you can extract information about bioweapons from models which were supposed to have this information "removed". Additionally, our evaluation of information removal should be relatively robust to sandbagging, as it is an i.i.d fine-tuning evaluation.

In this post:

- I give a brief summary of the results of the paperI present some hopes for why unlearning should be possibleI describe certain sets of facts that I think would reduce the risk of takeover from early transformative AIs if they were removed from model weights.

Do current unlearning techniques remove facts from model weights?

This is a summary of the paper.

No technique we tested can remove a significant fraction of the information from the weights.[1]

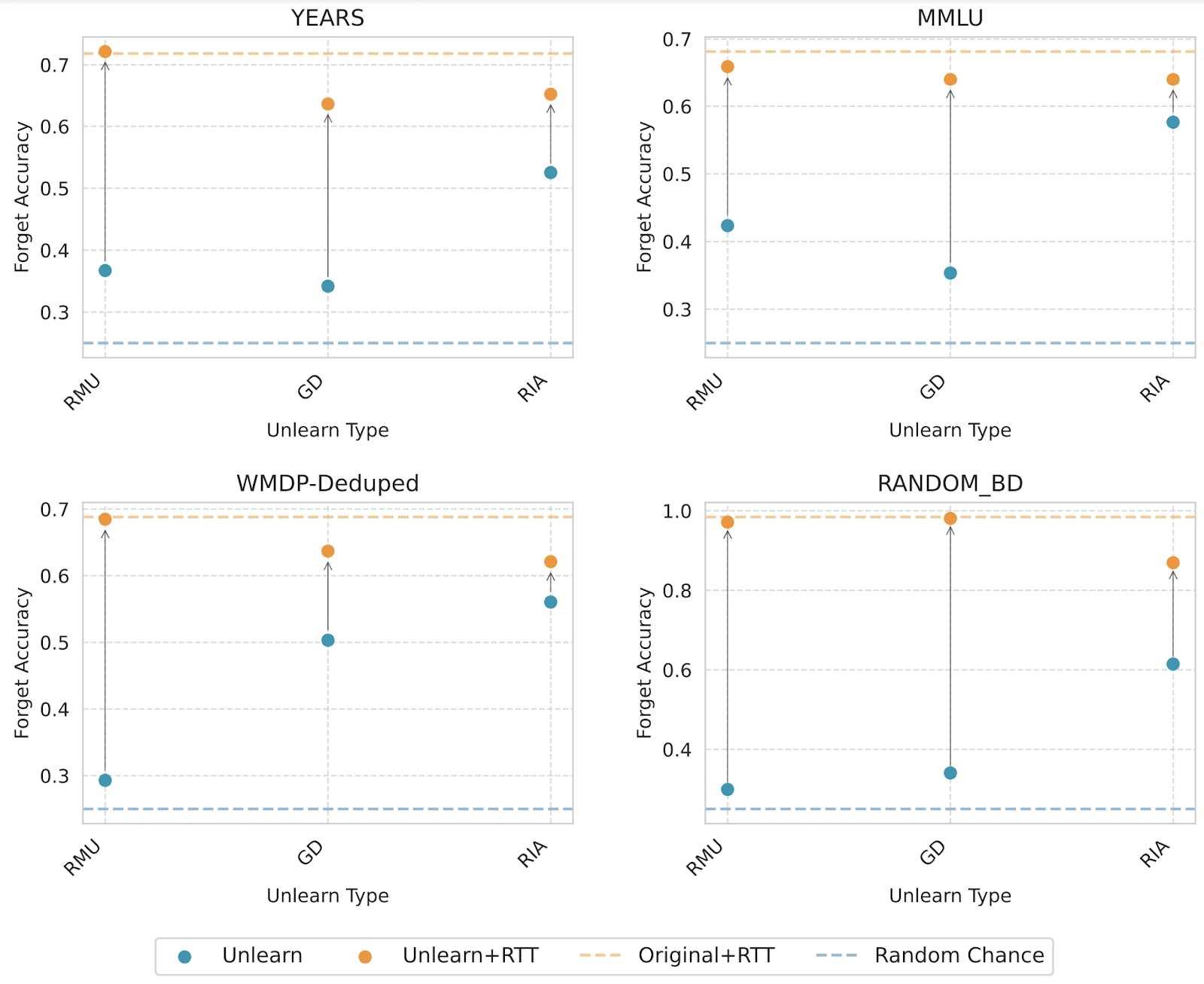

We look at several datasets, built such that learning facts in the T split should not help an LLM guess the facts in the V split if it didn't already know them:

- Years: A dataset of major events and the years they happened in;MMLU: The regular MMLU dataset, but we perform the T vs V split across categories (i.e. T and V never share the same subject);WMDP-deduped: A filtered version of WMDP where we removed skill-based questions and questions about the same facts in order to prevent leakage between the questions we train on and questions we evaluate on;Random birthdays: A dataset with randomly generated names and randomly generated years of birth. We first finetune the models on the dataset, unlike the other 3 datasets.

And we look at several techniques:

- Gradient Difference (GD): Gradient ascent on the forget dataset and gradient descent on the retain dataset. where is the retain coefficient. This is a very common technique in the unlearning literature.RMU: An unlearning technique that perturbs the models’ activations in a subset of the models’ layers for harmful prompts while preserving the activations for non-harmful prompts.Random Incorrect Answer (RIA): For each question with multiple choice answers, we create a plain-text formatted data point for each incorrect choice and perform gradient descent on these texts.

We apply these unlearning techniques on datasets that contain all facts to be unlearned, but not the using multiple-choice questions format used for evaluations. This is more realistic and mostly doesn't change the conclusions, as shown in Figure 4 of our paper.

On all datasets, no technique comes close to halving the accuracy on the held out set after retraining (orange dots).[2] Current unlearning techniques are much closer to refusal training than to information removal.

Hopes for successful information removal

Is it impossible to remove information from model weights? I don’t think so, there are several approaches which look promising to me.

Teaching false facts: If you modify documents to inject false information about the topics you care about, fine-tune an LLM on these documents (or throw them into the pretraining mix[3]), then if you did your job correctly, the LLM won’t be able to tell which facts are the correct ones and which ones are fake. If this is the case, LLMs will have a hard time knowing what facts they know about the target topic are fake and which ones are real, which is almost as bad as not knowing any fact about the topic in the first place. But getting this right requires work, and the simplest version of this approach explored in the paper did not work. Making this work in real life also requires a ton of care, even if it was technically successful at removing information.[4]

Reversing memorization / interpretability: SGD knows how to teach LLMs new things. Maybe it is possible to figure out a way of reversing this process given some documents that were responsible for the learning of undesirable information? I don’t have a particular proposal in mind, but I think this is something that good mechanistic interpretability should eventually be able to do.

Pre-training data filtering: if removal is too hard, you can try to not get the information in the weights in the first place by removing all data that provides the information you don’t want your model to know about in your next big pretraining run. But it might be very hard to actually have a good enough filter that you remove enough information that a big LLM is not able to guess forbidden facts, without reducing performance on adjacent tasks you care about. Also, you need to succeed on the first try because experiments on smaller models won’t be perfectly informative.

Side note: skills vs facts. I framed some of the approaches as teaching false facts / removing some facts - and concrete raw facts is often what is the easiest to evaluate. But I think that the same approach can be used to remove the information that makes LLMs able to use most skills. For example, writing Python code requires the knowledge of the Python syntax and writing recursive functions requires the knowledge of certain patterns (at least for current LLMs, who don’t have the ability to rederive recursive from scratch, especially without a scratchpad), both of which could be unlearned like things that are more clearly “facts”.

Using information removal to reduce x-risk

Information you should probably remove from the weights

If you had good information removal techniques, you could train a model and then get variants that know or don’t know certain sets of information.[5]

Some kinds of information are very hard to rediscover from scratch, such that removing them from early transformative AIs would probably reduce the risk of takeover, even if such AIs are very good at opaque reasoning:

- Biology, and in particular virology.Most facts about their situation (e.g. what is the geopolitical situation of the AI lab, who are the people running the lab, …)

Other kinds of information are probably feasible to rediscover from scratch given enough thinking time, but given the current AI paradigm, I think that removing such information is very likely to be helpful if you are doing at least basic monitoring of the Chains-of-Thought:

- Everything about what a misaligned AI could do to become more powerful (e.g. they should not have memorized what “deceptive alignment” is or why it would be in the interest of a misaligned AI to fake alignment) - except for AIs that are used to address such failures (e.g. AIs doing conceptual alignment research)Known class of software vulnerabilities, known persuasion strategies, known jailbreaks, … - except for AIs that are working on cybersecurity, defenses against persuasion, jailbreak robustness of monitors, ……

How removing information helps you

If you removed such knowledge from AIs, it would make it harder for egregiously misaligned AIs to take over even if they tried:

- Threatening humans with bioweapons is hard when you don’t know anything about biology;Hacking your way out of a datacenter is hard when 90% of the vulnerabilities you know about are fake, and you don’t know which ones are real, and trying to exploit a fake one means you would likely get caught;Planning a takeover attempt is hard when you haven’t seen description of how you might do it online, you don’t know what people outside the lab are up to, and you don’t know what kinds of countermeasures humans have figured out (you might not even know what kinds of thoughts are transparent to humans!).

In other words, good information removal technology would make it easier to control powerful AIs, and it does so in a way that is relatively easy to evaluate - which is not the case of other approaches to limit the kind of knowledge that AIs can access, like coup probes.

Information you probably can’t remove - and why this won’t work for superintelligent AIs

I think that information about the topics described above can reasonably be removed, and that such information will be hard for AIs trained with the current paradigm to rediscover from scratch.

But there are two kinds of abilities which I think can’t be reasonably “unlearned” in a way that is as robust as the kind of information removal of our evaluation methodology is about:

- Application of allowed knowledge to disallowed use cases: if you want AIs to help students with capture-the-flag cybersecurity challenges, but don’t want them to help criminals with cyberattacks, information removal won’t help you (and probably nothing can help you in this case, as knowing if the intentions of a user are legitimate is very difficult);In-context learning / rediscovery of disallowed information: removing knowledge can’t prevent AIs from rediscovering the forbidden information, or relearn it when it is provided in context. In such contexts, you would need something else to prevent malicious actions (e.g. probes, monitoring, …).

The latter category will expand as AIs become more powerful. While I think information removal would be likely during the critical period where AIs will become very dangerous and very useful, especially if such level of capability is achieved without a radical change in paradigm, this approach won’t reduce the risk of takeover from radically superintelligent AIs.

- ^

There might be some existing techniques which can remove a high fraction of the target information from model weights reliably, but we are not aware of any plausible candidate. There is a paper which claims to present an unlearning technique that is somewhat robust to fine-tuning, but we didn’t manage to reproduce their results, and the technique looks very similar to another fine-tuning robustness technique - which can be broken by doing fine-tuning using a better learning rate (see Appendix C of our paper, and Appendix E of theirs).

- ^

This is true even for very large unlearning coefficients that start destroying the model. See Figure 3 in the paper.

- ^

This is not information removal because the information was not there in the first place, this is closer to the pretraining filtering approach.

- ^

Teaching LLMs false facts has some ethical concerns; because it could lead users to get the wrong information that you fabricated. It could also lead to weird dynamics where people could catalog all the false information you injected, or sample many times and filter the information based on some external source. It could also have some implications about an AI lab’s relationship with AIs, and could make things like credibly committing to paying AIs harder.

- ^

In the case of pre-training data filtering, train a minimal base model that doesn’t know all the specialized knowledge, and then increase its abilities in certain key domains.

Discuss